This is the eighth in a series of posts on the Sony a7RIII (and a7RII, for comparison) spatial processing that is invoked when you use a shutter speed of longer than 3.2 seconds. The series starts here.

Before I conducted the tests on which I’ll report further down the page, I had thought that long-exposure hot pixels were mostly in the same locations from frame to frame. I was wrong. But first, some old business.

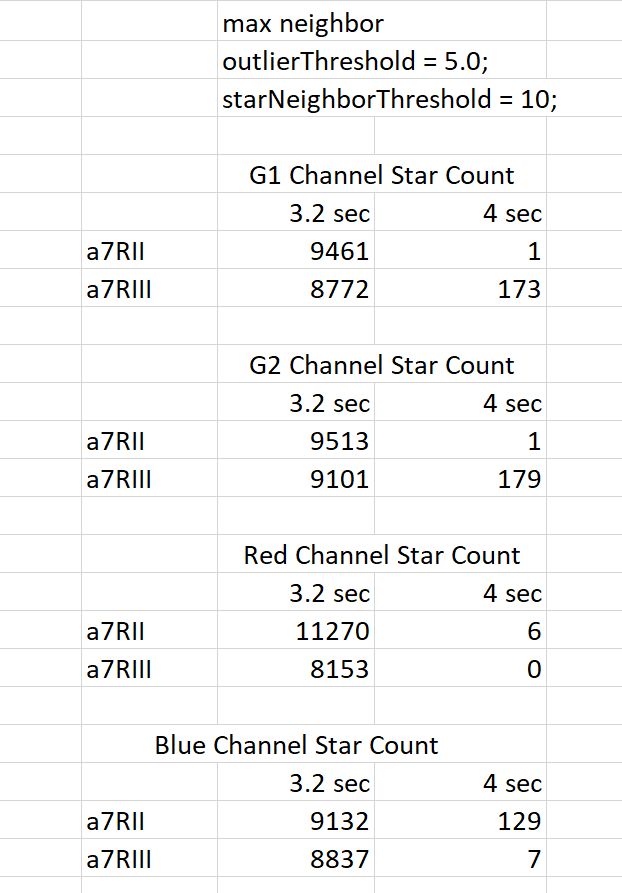

In the previous post, I talked about the differences between 3.2-second and 4-second dark field exposures with the a7RII and a7RIII when the files were subjected to a test that counted the number of star-like objects. Last night I realized that, because I hadn’t noticed a checkbox setting in RawDigger, that I’d processed the images in a space with a gamma of 2.2, instead of the linear space that I meant to use. I added a few lines of code and reran the passes that used the relationship of the outliers’ intensity to the brightest neighboring pixel as a criterion for stardom.

The conclusions remain the same, but I am presenting the above in case people are trying to reproduce my results. By the way, I ran the star-counter program against synthetic Gaussian random noise fields of the same dimensions as the 11 MP a7RII raw planes and found no stars.

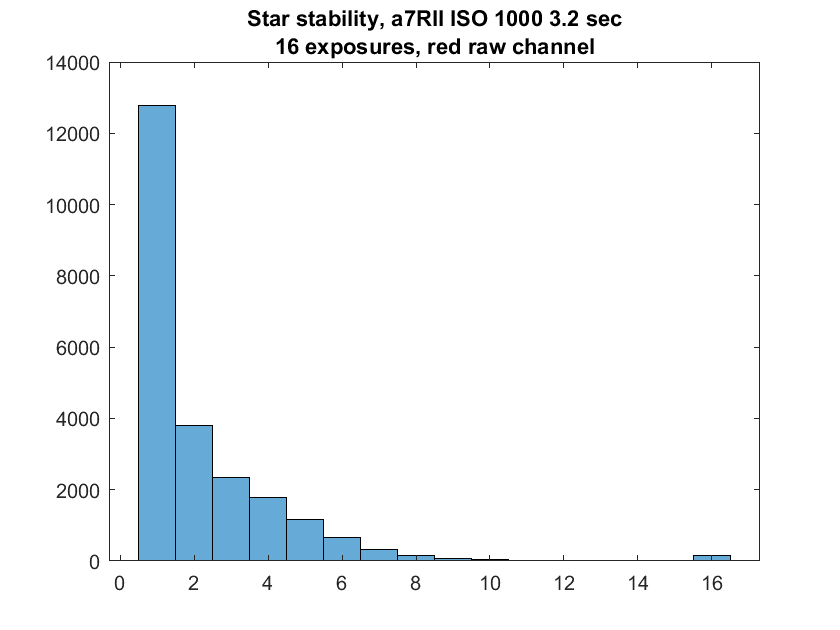

But this is mostly a post about the stability of the “stars” that the program counts in successive exposures. I had thought that most of the hot pixels that crop up in long exposures with the a7RII were in the same place exposure after exposure, but I’d never tested that. Now I had the makings of a good tool to perform such a test. I made 16 exposures of the back of the body cap with an a7RII, with a 3.2-second duration, at ISO 1000. I counted stars with these criteria:

- an outlierThreshold of 5 standard deviations

- a starNeighborThreshold that said that stars must exceed the brightest of their 8 neighbors by a factor of 10.

Then I looked at the number of different times in the 16 exposures where the program found a star in the same place:

I expected the column labeled “16” to be much higher. In order for a pixel to get counted there, it has to show up as a star on all 16 shots. By far the largest number of stars found were found in that location just once.

Removing the constraint that a hot-pixel has to be much brighter than the brightest of its neighbors:

Now we see more representation at the upper end of the horizontal scale, but still much less than I’d expected.

It has been speculated that the Sony lossy raw compression algorithm can itself interfere with imaging stars. I ran the same test with compressed files:

Roughly half the number of stars. Looks like the speculations were well-founded. The distribution seems unaffected.

A similar, but not quite so great, diminution in hot pixels. Again, the general shape of the distribution is about the same.

The evanescence of hot pixels in the a7RII explains a lot about why the Sony engineers didn’t simply map them out; they aren’t consistent enough. It also explains why long exposure noise reduction (LENR) is not particularly effective on this camera.

If we simulate the frame subtraction that is at the heart of LENR on 8 pairs of captures (the same as the uncompressed ones above), here is what we see:

Note that the number of hot pixels has not actually gone down once we double the numbers in the graph above to account for the fact that there are half as many samples. The little bump on the right side that indicates the same hot pixel showed up in capture after capture has disappeared, as expected. That’s what LENR is supposed to do.

More great insights here, Jim. In particular it explains why in DeepSkyStacker I get almost all the gains that can be had simply by stacking a good number of (A7RII) image files, corrected for vignetting with an averaged flat file – I’ve always found that adding dark files generally contributes minimal improvement.

I’ve often puzzled about this, because its at odds with users with other camera brands who seem to generally find that dark files are essential, which suggests that their background noise is more consistent. I will feel much happier leaving them out from here on, relying instead on median stacking to take out the random noise in each frame…

There is not only hot pixels, there is also hot areas. There are e.g. cameras where one edge of the sensor is hotter than the other due to power being driven from there. All the pixels near that edge are recorded a tad brighter than the rest of the bunch.