Lensrentals has loaned me a Fuji GFX 100S II for testing. My first test was to measure the precision in various drive modes:

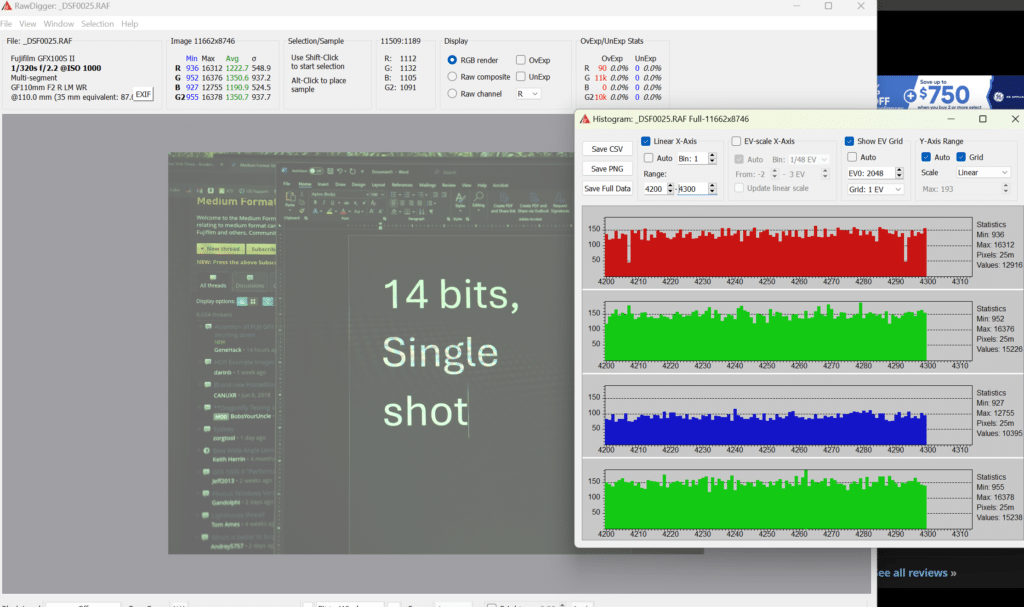

- Single shot, 14 bit

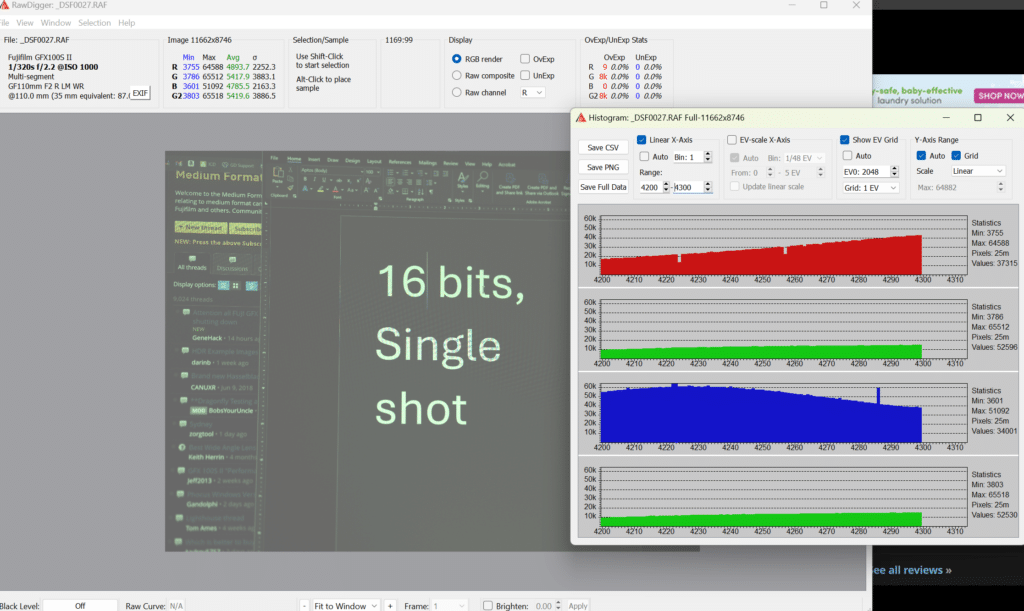

- Single shot, 16 bit

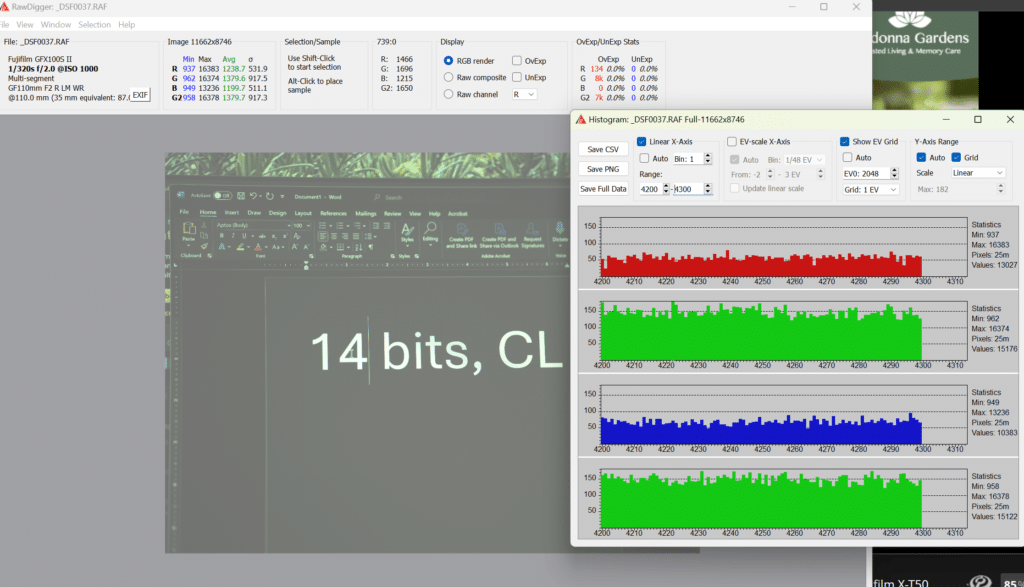

- CL

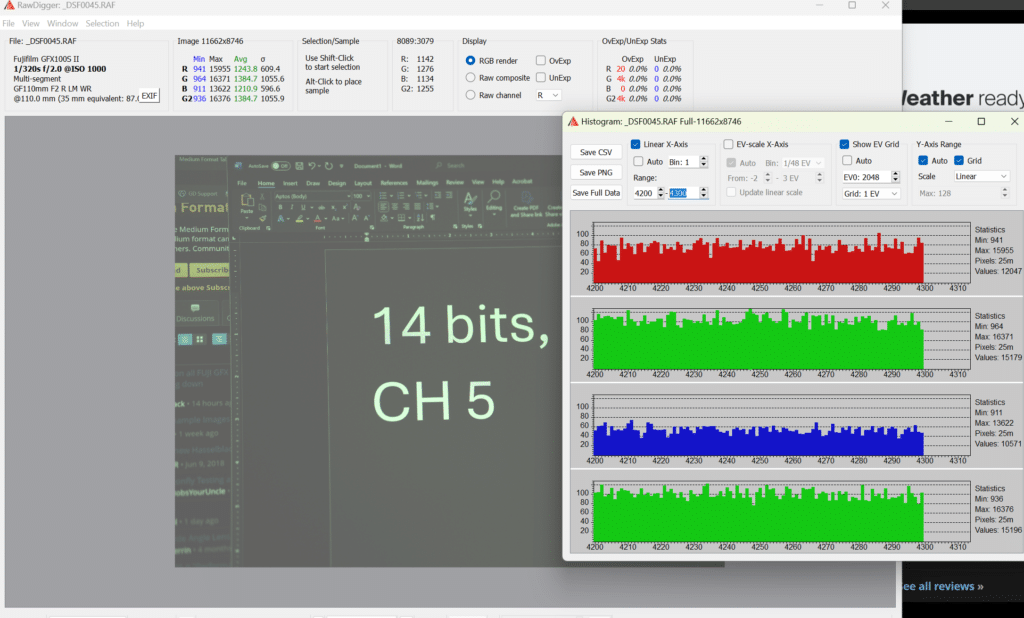

- CH 5 fps

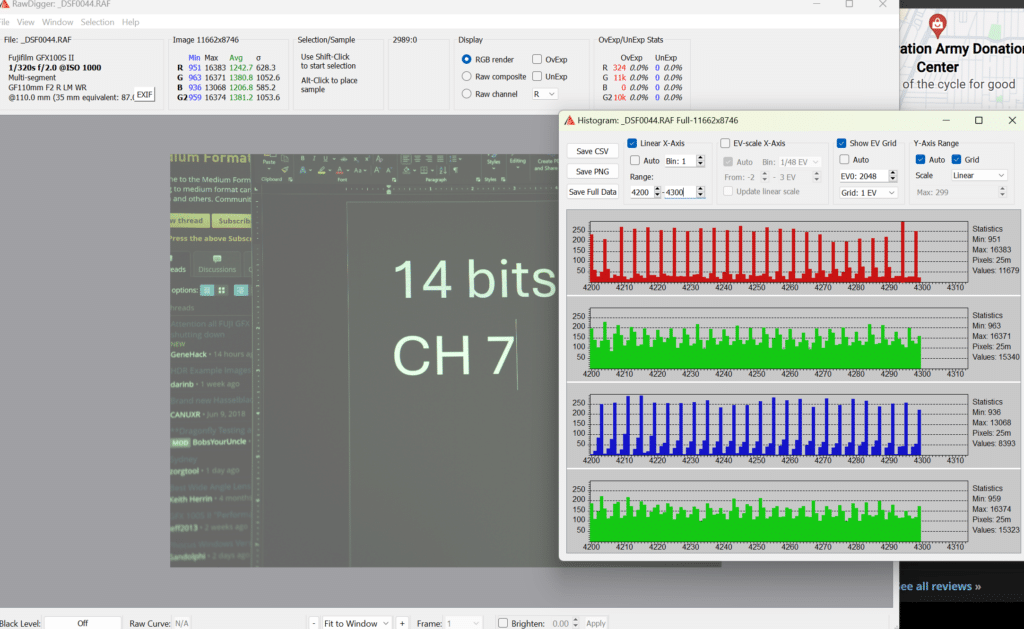

- CH 7 fps

The results, all with the camera in EFCS mode:

So, the answers are:

- Single shot, 14 bit — 14 bits

- Single shot, 16 bit — 16 bits

- CL — 14 bits

- CH 5 fps — 14 bits

- CH 7 fps — 12 bits

Julian says

Does “precision” mean color accuracy? I’m not sure how to interpret the graphs. I see the curves are much smoother and the y-axis values are magnitudes higher for 16-bit, but unsure what that means in the real world without accompanying text. Thanks for looking into this is as it’s a major selling point for the camera system.

JimK says

Precision and accuracy are technically almost orthogonal. Precision in this case means the number of bits that the analog to digital converters are putting out. Look for gaps in the histograms to see values which the ADCs aren’t coding, even if they get slightly filled in with post-ADC processing.

Paolo Madamba says

So does that mean in continous shooting, even if I set the bit rate to 16 it still only shoots at 14?

JimK says

“bit rate”? I don’t see a setting for bit rate. You can’t set what Fuji calls “output depth”, by which they mean precision, to 16 bits in either Ch or Cl drive modes.

Renjie Zhu says

I use the raw digger to analyze my image taken by GFX 100 II, CH 8, 35mm mode, the precision looks like same as single shot 14bit, there is no obvious skip sampling gap there. Guess the less pixel s relief the cache writing pression.

For Bird photography, choose 35mm mode CH8.

Bird in fly scenario with image compress can last the shooting for 60 seconds.

For large wild life photography, full frame, CH 5 is good choice.

JimK says

Thanks for that.