In the beginning, digital photography sensors were monochromatic. If you wanted color, you made three successive exposures through different filters, which were usually mounted on a wheel for rapid sequencing. A variant of this approach was to use a series of prisms and half-silvered mirrors to split the imaging light into three beams which were filtered independently and simultaneously imaged on three monochromatic sensors. This approach is still used in some video cameras.

In the early 1970s, before digital photography even got off the ground, Bryce Bayer invented his color filter array (CFA) pattern, which exposed different sensels through different color filters, all in a single exposure.

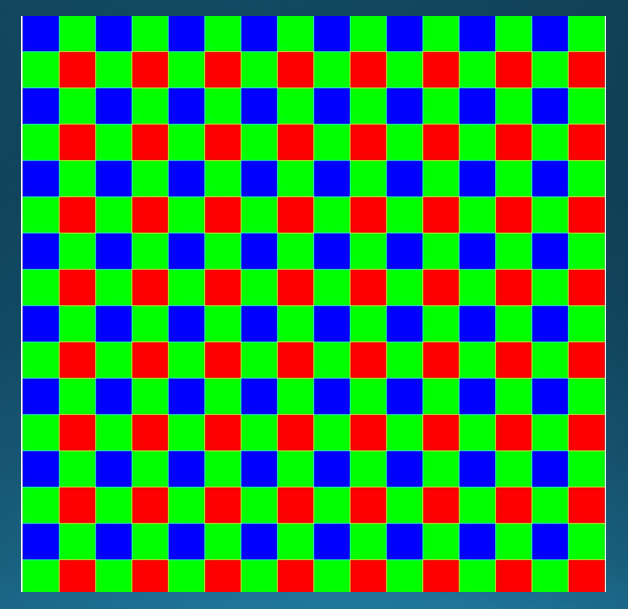

Here’s what a Bayer CFA looks like:

As an aside, I’ve always thought that having some techie thing named after you was neat. To that end, last year I proposed the Kasson pattern, which was — and still is — available for license at no charge, providing my name is displayed prominently on any camera using it.

Amazingly, no one has taken me up on my generous offer.

OK, back to Bayer. His array has been very successful, and the vast majority of contemporary digital cameras use it. There have been variants with different patterns and different colors in the filters, but they haven’t caught on. There is a totally different approach, Foveon, invented by Carver Mead, that uses the fact that different wavelengths of light penetrate silicon to different depths and stacks the various wavelength (they’re not red, green, and blue, but more like white, yellow, and red) sensels vertically. It remains a niche technology.

So the Bayer array is the big kahuna of photographic capture, making possible the marvelous cameras that we have today. It’s not all good, though. Images captured using a Bayer CFA are missing some information. In the production of a standard RGB image from a raw file from a Bayer CFA camera, half the green pixels, and three-quarters of the red and blue pixels, have to be created in software. The process is called demosaicing, and there are many algorithms. Not surprisingly, with two thirds of the data in the demosaiced image produced by sophisticated guesswork, there are problems in some demosaiced images. They include false colors and weird patterns. It’s amazing that it works as well as it does.

It occurs to me, with cameras like the Pentax 645Z, that there is now a practical alternative to demosaicing of Bayer-mosaiced files. If the raw developer took each block of two green, one red, and one blue raw pixel and used that to compute the output pixel, say by averaging the greens, passing on the reds and blues, and then doing the usual color space conversion, we’d have an image untouched by the hand of demosaicing. With 50 megapixels in the 645Z sensor, we’d have a 12.5 megapixel output file, which is plenty for many purposes. Because two green raw pixels are averaged to produce one processed pixel, we’ll have about 0.7 as much photon luminance noise, which sounds like an advantage until you realize that, using conventional demosaicing and res’ing the result down to 12.5 MP we’d get half the noise.

Is this a practical approach? Only experimentation can answer that. At best, it will not replace demosacing in all circumstances, but it may prove useful for some purposes.

PS. If you sometimes look at the photo boards and want a chuckle, take a look here: http://www.lensrentals.com/blog/2012/03/hammerforum-com

Leave a Reply