I’ve done some more work with my camera simulator. I decided that doing the antialiasing filtering by allowing fill factors of greater than 100%, while programmatically convenient, is not always a good approximation to real AA filters. So I’ve added a separate AA operation that uses either rectangular averaging (box) filtering or circular averaging (pillbox) filtering. We won’t use this immediately, since neither the D800E nor the a7R have AA filters. Before someone corrects me, yes I know that the D800E has a kind of trick now-you-see-it-now-you-don’t filter arrangement, but I’ve decided not to call it an AA filter, and not to try to simulate it.

I added camera motion simulation to the model. I did that by using a Matlab function that builds a convolution filtering kernel that simulates the effect of constant-velocity camera motion for integer numbers of pixels in any fixed direction, and applying that to the full-res target image.

Here’s what we get with a two-sensor pixel vertical motion with perfect focus and at 100% fill ratio:

You can see that the horizontal lines are blurred and the vertical ones are virtually unaffected.

With a two-sensor pixel horizontal motion with perfect focus and at 100% fill ratio:

The vertical lines are blurred and the horizontal ones are virtually unaffected.

Already we know something we didn’t know before about the D800E and a7R images of two posts ago: the camera motion is not strictly vertical. Why is this, since the shutters operate vertically, when the camera is in landscape orientation? It must be that there is some horizontal component, and that the tripod resists the vertical motion better than the horizontal motion, so we end up comparable amounts of both.

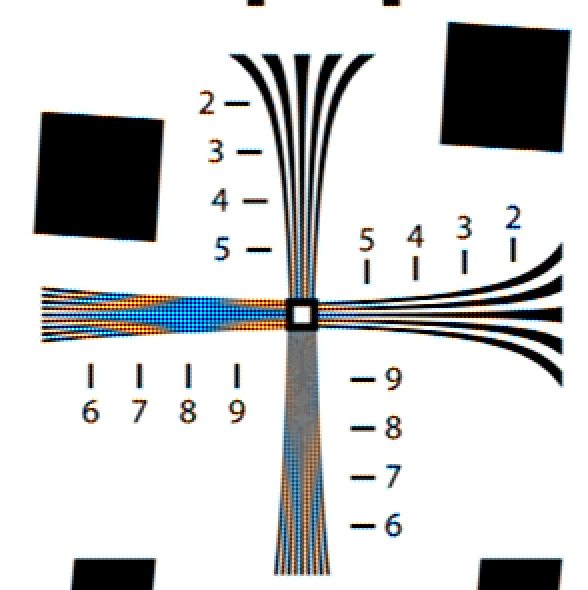

Here’s what the sim looks like with a two-sensor pixel 45 degree motion with perfect focus and at 100% fill ratio:

Now we have blurring of both the horizontal and vertical lines, but the effect is lessened in each from the two images immediately above, because a 2 pixel move in the 45-degree direction amounts to a 1.4 pixel change in both the vertical and horizontal.

Here’s a look with a three-sensor pixel 45 degree motion with perfect focus and at 100% fill ratio:

Now we know something else we didn’t know before: the camera sensor motions that we’re seeing are not small fractions of a sensel; they’re one to three sensel shifts.

A criticism of the camera motion simulation that I’ve chosen is that is doesn’t accurately represent the damped coupled harmonic motion that characterizes the actual vibration. I accept that as valid, but what I’ve done is a whole lot more useful than no simulation at all, and I can tweak things later if that looks like a good thing to do.

Ferrell McCollough says

I’ve stared at your pictures longer than I’ve stared at any Ansel Adams picture so that makes you quite the photographer. Really excellent work you are doing. Do you have a feeling based on your pics with the A7R and D800 if the motion is equally horizontal and vertical – similar to the 45 degree motion in the simulation.

I suppose in portrait orientation the vulnerability of the tripod design would be revealed. Then one could take the experiment even further and test optimum spacing of tripod legs for resisting shutter vibration. I doubt tripod engineers consider much more than ergonomics when the leg spread “locks” are designed.

One could also come up with the optimum leg positions relative to the camera. For example, always placing the furthest leg forward and the two nearest left and right.

Jim says

Ferrell,

I’m currently thinking that the a7R motion is roughly equal parts vertical and horizontal, based on the images I made of the ISO 12233 target earlier.

When I get the RRS L-bracket, I’ll do some portrait-mode images, and I expect that they’ll be mostly shaken parallel to the ground, as the shutter direction and the weak tripod direction align.

Interesting point about the leg positions…