Many years ago, I wrote an article on backing up photographic images. Quite a few bytes have flowed under the figurative bridge since then, and the original article is sufficiently obsolete as to be nearly useless. So, I’m going to take another crack at it, with liberal self- plagiarization from the original article.

In chemical photography, you have only one master image of each exposure. It’s stored on the film you put into your camera. If you value the images you can make from it, that master image is precious to you. Be it original negative or original transparency, any version of the image not produced from the master will allow reduced flexibility and/or deliver inferior quality. Photographers routinely obsess over the storage conditions of their negatives, in some cases spending tens of thousands of dollars to construct underground fire-resistant storage facilities with just the proper temperature and humidity.

One of the inherent advantages of digital photography is the ability to create perfect copies of the master image, and to distribute these copies in such a way that one will always be available in the event of a disaster. In the real world, this ability to create identical copies is only a potential advantage. I have never had a photographer tell me that his house burned down, but he didn’t lose his negatives because he kept the good ones in his safe deposit box, his climate-controlled bunker, or at his ex-wife’s house. On the other hand, I have talked to several people who have lost work through computer hardware or software errors or by their own mistakes. It takes some planning and effort to turn the theoretical safety of digital storage into something you can count on. The purpose of this article is to help you gain confidence in the safety of your digital images and sleep better at night.

Before I delve too deeply into the details of digital storage, I’d like to be clear about for whom I’m writing. I’ve been involved with computers for more than sixty years and consider myself an expert. If you are similarly skilled, you don’t need my advice, and I welcome your opinions on the subject. Conversely, if you are frightened of computers, feel nervous about installing new hardware or software, and get confused when thinking about networking, you should probably stop reading right now; what I have to say will probably not make you sleep better at night, and may indeed push you in the other direction. My advice is for those between these two poles: comfortable with computers, but short of expert. I’ve tried to avoid making this discussion more complicated than it has to be, but it’s a technical subject, and it needs to be covered in some detail if you are to make choices appropriate to your situation.

Okay, with the caveats out of the way, let’s get started. People occasionally ask me what techniques I recommend for archiving images. I tell them that I don’t recommend archiving images at all, but I strongly recommend backing them up. Let me explain the difference.

When you create an archive of an image, you make a copy of that image that you think is going to last a long time. You store the archived image in a safe place or several safe places and erase it from your hard drive. That’s called offline storage, as opposed to online storage, which stores the data on your computer or on some networked computer that you can access easily. If you want the image at some point in the future, you retrieve one of your archived images, load it onto your computer, and go on from there.

When you back up an image, you leave the image on your hard drive and make copy of that image on some media that you can get at if your hard drive fails. Since the image is still on your hard drive, your normal access to the image is through that hard drive; you only need the backup if your hard drive (or, sometimes, computer) fails.

The key differentiation between the two approaches is that with archiving, you normally expect to access the image from the archived media, as opposed to only using the offline media in an emergency.

A world of difference stems from this simple distinction.

I’m down on archiving for the following reasons:

- Media life uncertainty. It is difficult – verging on impossible – to obtain trustworthy information on the average useful life of data stored on various media. Accelerating aging of media is a hugely inexact undertaking. Manufacturers routinely change their processes without informing their customers. The people with the best information usually have the biggest incentive to skew the results.

- Media variations. Because of manufacturing and storage variations (CD or DVD life depends on the chemical and physical characteristics of the cases they are stored in, as well as temperature and humidity), you can’t be sure that one particular image on one particular archive will be readable when you need it.

- File format evanescence. File formats come and go, and by the time you need to get at an image you may not have a program that can read that image’s file format.

- Media evanescence. Media types come and go (remember 9-track magnetic tape, 3M cartridges, 8-inch floppies, magneto-optical disks, the old Syquest and Iomega drives?). By the time you need to get at an image you may not have a device that can read your media.

- Inconvenient access. You may have a hard time finding the image you’re looking for amidst a pile of old disks or tapes.

- Difficulty editing. Over time, your conception of an image worth keeping probably will change. But it’s a lot of trouble to load your archived images onto your computer, decide which ones you really want, and create new archives, so you probably won’t do that. You’ll just let the media pile up. Your biographers will love you for that, but it will make it harder to find a place to store the archives, and harder to find things when you need to.

The drawback to the online-storage-with-backup approach has traditionally been cost. But both magnetic and solid-state disk cost per byte has been plummeting at a great rate for years, and it’s now so low that, for most serious photographers, it’s not an impediment to online storage of all your images.

In addition to the above list, there’s also a psychological difficulty with archiving: “out of sight, out of mind.” Once you’ve got a drawer full of archived files, you probably will leave them be until you want to retrieve an image. At that point, you may find that the data was not recorded properly, that the media has degraded so the files are unreadable, or that you can’t find a device to read them that is supported by your current operating system. You won’t be happy then.

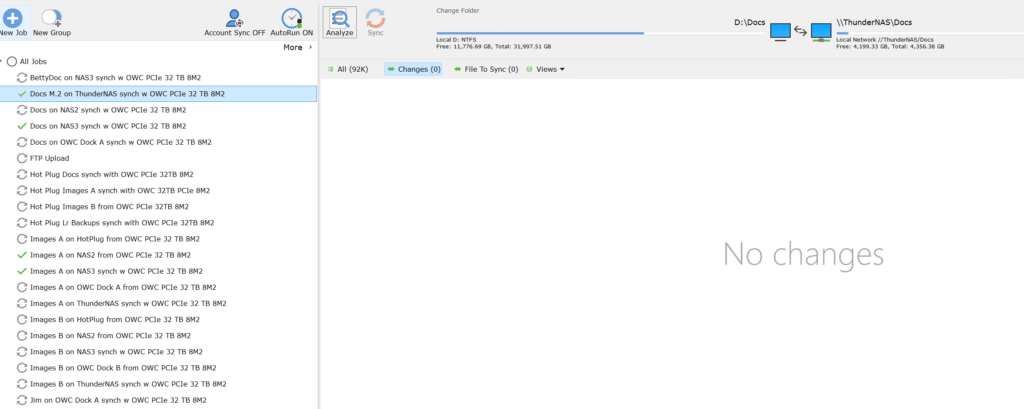

If you buy into my backup-not-archive approach, you’ll keep all your files on disks attached to your main workstation (or maybe in network attached storage (NAS) box accessible through 10 gigabit Ethernet (10GbE) USB-C, or Thunderbolt) , and you’ll back up to some combination of local drives, network attached storage (NAS), bare drives for transport to an off-site storage location, and cloud storage. I back up to all of those.

At a bare minimum, you’ll want two backups of your files. If you can afford it, four or five is a better number. You’ll want enough local backups so that you can deal with a failed backup device, make mistakes and destroy your data, and still have a successful recovery in the event of a failure on your main machine. There’s an important psychological aspect to this, too. When faced with loss of precious data, unless you’re an IT professional (and sometimes even if you are an IT pro), most people’s first reaction is mild to terrifying panic. That sort of panic can lead to mistakes that, instead of restoring the missing files, wipes out the backup files. If you have several backup copies, you can recover from that situation. In addition, just the knowledge that you have several backup copies can calm your mind and reduce the chances that you’ll make some major screw-up with the restoration.

You should also test your backup system, and train yourself to do restores properly by doing trial restores regularly. This requirement effectively eliminates disk mirroring systems. Only a very brave (and possibly foolish) person would do a trial restore on their perfectly functional workstation and risk destroying its integrity. In fact, I’ve found disk mirroring programs so unreliable for workstation transitions or restoration – for one thing, they tend to break copy protection features – that I don’t back up my programs at all. If my OS disk crashes, I’m prepared to reinstall my programs. I am, however, fanatical about backing up my data.

Where should you keep your data? The options are on-site, off-site, and in the cloud. You should at least seriously consider doing all three.

We’ll get to on-site storage in a bit. The best way to handle off-site storage is physical transportation of disks or disk subsystems. I favor moving the raw drives around, either to a friend’s house (you can return the favor) or to a safety deposit box. SSD’s are smaller and lighter, but more expensive. There are many cloud storage options. I’ve used the business versions of Backblaze in the past, and it’s been a generally good experience, but I use Google Workspace now and am generally happier with it. Amazon’s basic S3 service has good performance but is expensive. Amazon’s Glacier doesn’t offer easy changes to stored data.

At the turn of the millennium, the economics of data storage favored using other devices than disks for backed-up data. For most users, that hasn’t been true for years. You should back up your data to either spinning rust or solid state (flash) disks, just as you should use either solid state or spinning magnetic disks for your data storage.

How should you attach your backup disks to your computer? There are several options:

- Spare SATA or M.2 disks in your computer chassis.

- Hot-plug SATA or M.2 disks plugged into your computer.

- External storage boxes attached via USB or Thunderbolt.

- Network attached storage (NAS) attached via Ethernet.

The first alternative is viable, but it shouldn’t be your only backup target, because a computer failure could render those drives temporarily or permanently unavailable. The second alternative is good if you can easily attach the hot plug disks to another computer. The external USB/Thunderbolt solution is good if the box can easily be attached to another computer. The fourth alternative is the most versatile but may suffer a speed disadvantage compared to the third if very fast Ethernet connections aren’t used.

To RAID or not to RAID?

Disks can be arranged in arrays for the purpose of improving performance or correcting for failures. These arrays are called Redundant Arrays of Inexpensive Disks (RAID). David Patterson and Randy Katz of UC Berkeley developed the concept and created the following taxonomy:

- RAID 0 – striped, not redundant at all. Improves transfer speed, since data can be read from more than one disk at a time.

- RAID 1 – mirrored. Data is duplicated on paired drives. Can tolerate a single disk failure without losing data. Doubles the disk storage required.

- RAID 10 – striped and mirrored. Provides tolerance of a single drive failure, and speed advantages because of the striping.

- RAID 5 – uses parity tracks to achieve tolerance of single disk failures with less cost penalty than RAID 1.

- RAID 6 – uses parity tracks to be able to tolerate two disk failures at greater cost than RAID 5.

With the redundant options – all but RAID 0 – after a drive failure and the replacement of the failed drive with a new one there is a time during which the missing data is being rebuilt when a further drive failure can cause loss of data. Over the last couple of decades, the capacity of magnetic disks has risen faster than their transfer speed, with the result that the rebuilding times have gotten to the point where I don’t recommend RAID 5 for magnetic disks. You are much less likely to lose data during a rebuild if you use RAID 6.

However, SSDs have changed the calculus for me. They tend to be smaller than magnetic disks, fail less often, and have faster transfer speeds. All those differences make RAID 5 a viable option for SSDs.

Hardware vs software RAID controllers

Many operating systems, like Windows 11, come with software that will organize multiple disk drives into RAID arrays. You can also buy third party software, like SoftRAID, to do that. The other way to go about constructing a RAID is to buy an external box with a computer, an operating system designed around storage tasks, and use the RAID software that comes with the box. The latter solution is my preferred way of handling all but very fast PCIe M.2 arrays. My current favorite vendor is QNAP. They make desktop NAS boxes with up to 25 gigabit per second Ethernet and high-speed Thunderbolt interfaces.

Why not software RAID? I’ve had bad experiences attempting restoration of data from both the software RAID solutions incorporated into Windows and with third-party products. The restoration process is opaque and intolerant of some kinds of hardware failures, and the repair tools are inadequate. If you use software RAID, make your peace with reformatting a failed array and restoring your data from another backup.

Opacity vs transparency

From a transparency perspective, there are two kinds of backup programs. One kind encapsulates your data in a proprietary or public domain wrapper. To see your backed up files, you need to restore them to a temporary location. The other kind saves your data in a file structure like – and, ideally, the same as – the file structure you are using to store the live copy of the data. The latter is the kind of backup program I favor. The first reason is so that you can check the integrity of your backed up data in place – you won’t have to restore it to do that. The second reason I like that approach is that you won’t be dependent on any proprietary software for your restoration; you can use any copy program you wish for both backups and restores, and the programs don’t have to be the same.

What to do – and what not to do – in an emergency

Suppose there has been a hardware failure or a user screw-up and you’ve lost a ton of your data. What’s the first thing you do? The right answer is nothing: take a deep breath, assess the status of your backups, and wait long enough that that panicky feeling passes. One good plan is to sleep on it, and you’ll sleep soundly if you have lots of backups. If it was a hardware failure, replace the broken parts and format the partitions. If you want to do work in the meantime, remap the drive letters of one of the backup boxes or the backup drives in your workstation to the drive letters of the failed unit(s). When you are calm, have a plan, and are ready to restore the data to its original location, write down the steps, go through them slowly, and double check at each increment of your plan. Be careful that you get the direction of restoration right, but know that, even if you mess that up, you’ve got more backups just in case. Before you tell the backup software to copy over the files, take a good look at the changes it wants to make, and see if those seem right to you. There’s no hurry. You can work with your remapped drives as long as you want, which takes the time pressure off. But the remapped drives you’re working with will need to be backed up if you make changes you wish to preserve.

Check on your backed-up data. Does it all appear to be there? One thing that can give you confidence in the integrity of your backup is to compare several backups using your backup software with the write phase turned off and make sure all your backups are the same. Confident in your backup data? Then fire up your backup software again and run a comparison of your newly installed or newly formatted disks and take a long look at what your backup software proposes to do. Does it make sense? Then go ahead and tell the backup software to write the backed-up data to its new home. Go do something fun while that happens.

When the restore is complete, compare it to the backup. They should be the same.

Automation

I recommend having some of your backup scheduling automated. That way you won’t have to remember to do those backups, and there’s a greater chance that the files you need to restore will be there when the day arrives when you need them. However, I don’t think you should put all your eggs in that basket. It’s never happened to me, but I worry about inadvertent deletions being propagated, so I think that at least one of your backups should be strictly manual, and that you should look closely at what the backup program proposes to do before you turn it loose.

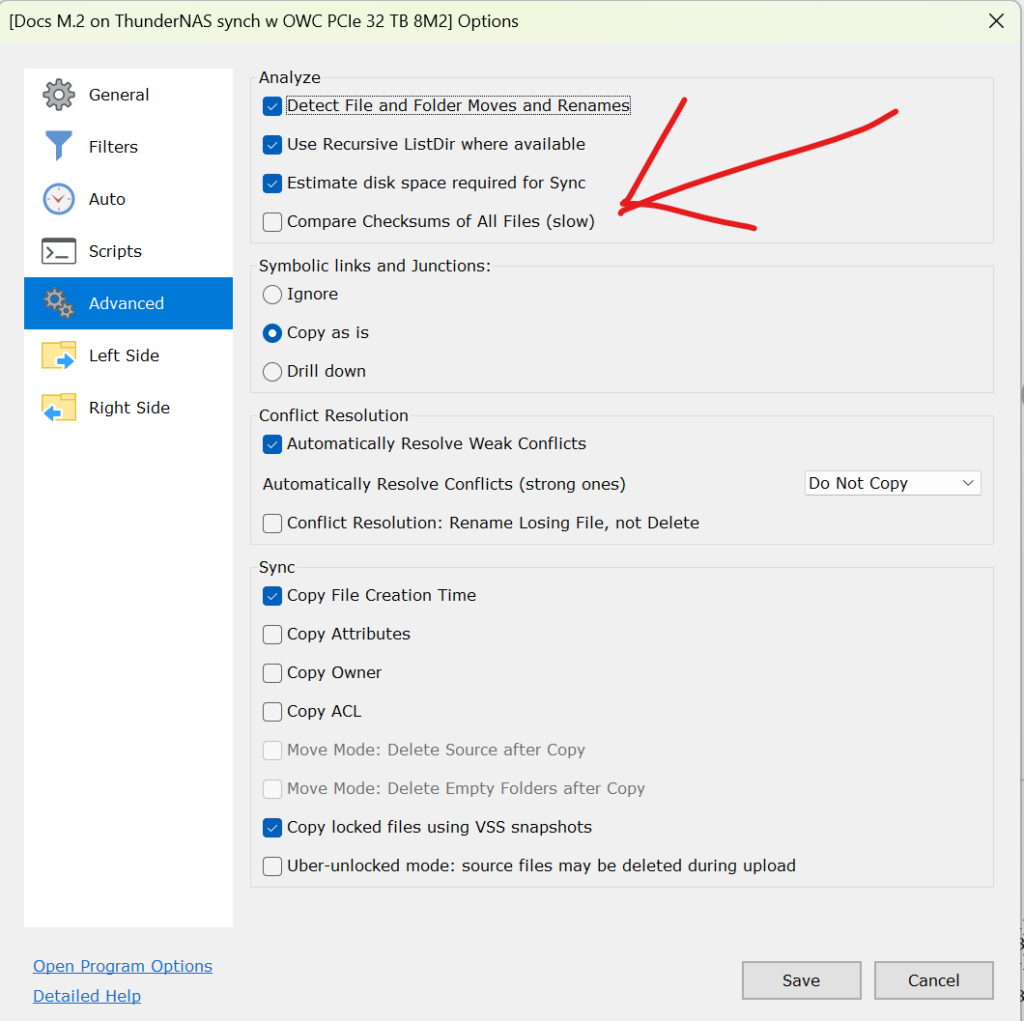

Checksum or no checksum?

Many backup programs allow the option to do cyclic redundancy checks, to compute checksums, or other mechanisms to ensure that the copied files are the same as the originals.

This in not an unalloyed blessing, because these checks increase the backup times greatly. I don’t usually use them myself, but if the decreased throughput is not a problem in your application, I recommend the extra measure of safety.

If you do all the above, it may help you to sleep better at night, and it may save you from a very unpleasant loss of data.

Mike Nelson Pedde says

Very well said. If I may, I’d add two things.

1) On my computer I have a folder called Installed, and in that folder I have the install files for all of the software on my computer. That folder is updated every time I download an update. Within that folder is also a file called Licenses, and in that file are the serial numbers for all of the software on my computer. If I have a system crash I install the OS on the new disk, run the backup software to reinstall that folder and reinstall my software from there. THEN I restore my data.

2) A question I’ve been asked more than once is, “How often should I back things up?” The answer is simple (and always the same): “How much can you afford to lose?”

Mike

PT says

There are two things you need a backup for.

1. the original RAW version

2. the (often painstakingly) processed image

And for each version: at least 3 backups on different discs = already 6 backups.

And if you consider that each backup should be transferred to a new medium every few years (as the magnetic effect of the storage medium fizzles out over the years): well, it’s getting very time-consuming.

And storage media that last 1000 years are the technology of the future: always have been and always will be.

Jack Hogan says

Hi Jim,

I had a workflow similar to yours until a couple of years ago. Then my ISP started providing 10Gbps to the home – so I switched everything to the cloud and never looked back.

Jack