To make the preceding discussions about backup more concrete, in this post I’d like to tell you my backup strategy, and let you know how I got there. There are many ways to do backup. There are even many ways that make sense. What I’m doing is not the only good way to solve my particular backup problem — in fact, I’ve changed my strategy over the last few weeks, since writing this series of posts made me think more deeply about what I was doing.

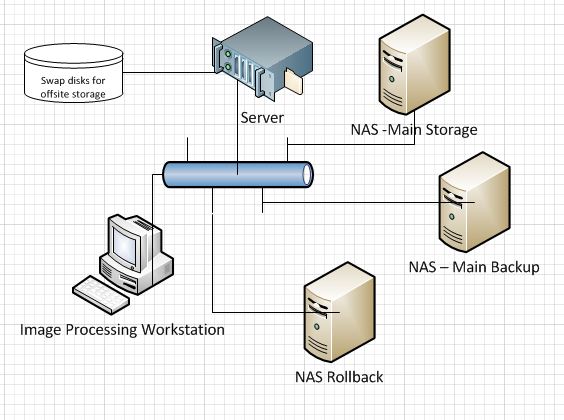

Here is the hardware I’m using for backup:

There are several workstations, but I’ve just shown one to keep the diagram from getting cluttered. There’s a backup server (running Windows 2008R2, and also acting as one of the domain controllers), with USB attached bare drives that are aperiodically taken offsite. There are three NAS boxes (all Synology). The top one acts as the main network file server and the main backup for the workstations. The middle one backs up the top one. The bottom NAS box is used to backup workstations, and keeps old versions of files so that, if necessary, we can roll back to them.

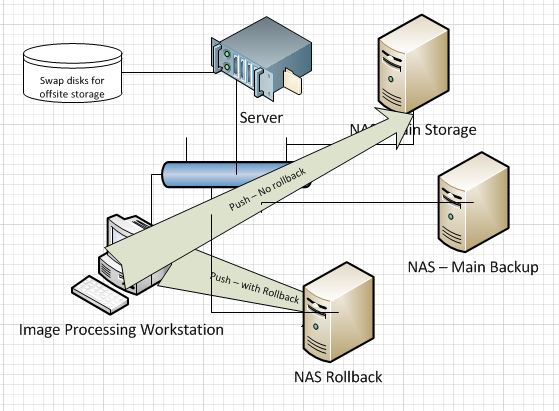

The workstations back themselves up by running GoodSync and pushing to the main storage NAS box and to the rollback one:

I tell GoodSync to backup to the rollback server every time a file changes, and to back up to the main storage every few hours. I figure it’s at least as likely — and probably more — that you’ll want to get at an old version of a file a few minutes, or even seconds, after you’ve overwritten it than hours or days later.

I could have used third-party backup from the server to back up the workstations, with the profiles putting data on the main storage NAS box not keeping old versions of files, unlike the ones putting data on the rollback NAS box. That would have reduced a little of the load on the workstations and transferred it to the server, which in my case would have been a good thing (the act of querying directory and file structures and transferring data is most of the load, and is the same in either case). A downside of the server-mediated backup is increased network traffic. I decided to do things the way I did because I like the way Goodsync does rollback better than Vice Versa, and I don’t want to spring for the expensive server version of Goodsync.

I could have split the difference and used Goodsync to backup the workstations to the rollback NAS box, and VV running on the server to move the workstation data to the main storage NAS box. That would have provided me with redundancy that could be useful if one of the backup programs, but I’ve seen incomplete backups when two different programs are trying to backup the same directories at the same time. I haven’t been able to reliably reproduce this problem, and it seems to correct itself over time, but it makes me sufficiently nervous that I’m staying away from it.

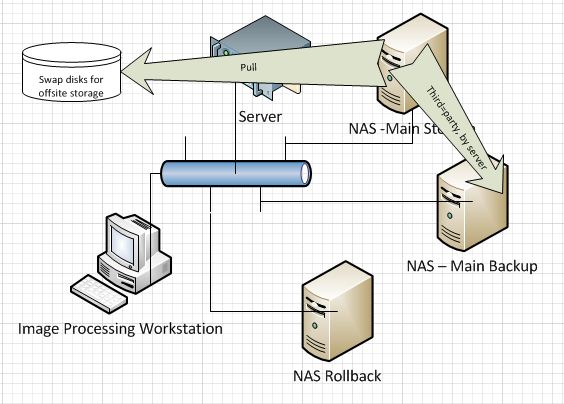

The server runs Vice Versa, and uses it to pull data from the main storage NAS box and write it to the locally-attached disks, and also to take data from the main storage NAS box and write it to the backup NAS box:

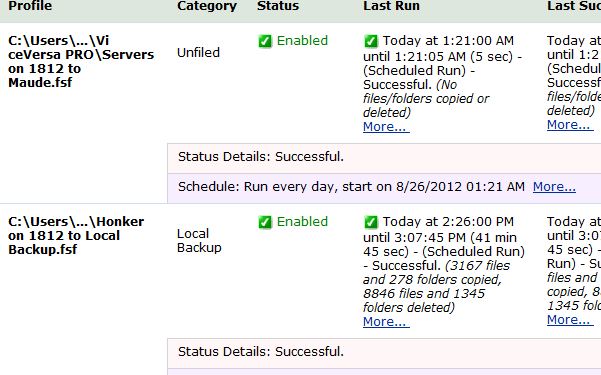

You may note that I have completely separated backups to recover from human error (rollback) and backups to restore data on damaged disks. I didn’t start out to do this, but two things lead me in that direction. One of them was, when I was telling GoodDync to keep old versions of file on all backups, seeing this message from VV when writing data form the main NAS store to the disks destined for offsite storage (look at the second entry):

This left me scratching me head, as I hadn’t done anything recently that should have caused so many files and folders to be deleted. I think that probably a bunch of old files timed out, and GoodSync removed them from the main NAS store, and thus VV had to remove them from the soon-to-be-offsite disks. But I don’t really know, and I don’t want to have to spend a lot of time with the logs ever time this happens. Automatic backup is supposed to make life easier, not harder.

The other thing that made be separate the functions was thinking about plausible recovery scenarios, and having a NAS box go casters-up and wanting to get a previous version of an old file from the offsite disks or another NAS box didn’t seem at all likely. It’s been years since I wanted to get a previous version of a file. You could argue that it’s not even worth having one set of copies of old files.

Leave a Reply