This is the kind of tech-heavy post that I’d normally put in The Bleeding Edge. I’m posting it here because I think the topic is so important to digital photographers. It starts out with a history lesson, but you can ignore that if you’re impatient. Over the next few posts I’ll be dealing with the ramifications of the inability of our disk systems performance improvements to keep up with their massive capacity gains.

I remember my first 300 MB disk. I bought it in the late 80s or very early 90s, when I was first getting into digital photography. It was a 5 ¼ inch full height unit, and weighed a ton. It came in a 2 foot by 2 foot by 2 foot box that was full of gray springy foam, with the disk itself floating in the middle. It cost more than three thousand bucks. It used an eight-bit wide SCSI interface, and I had to buy a SCSI adapter from Adaptec to run it. Still, it was a big improvement over the 40 MB disks which were the standard of the day, which were themselves a big improvement over the 10 MB disks that first shipped on the IBM PC XT.

Disks have gotten much larger since, with the current high water mark for mainstream products being 4 TB – more than ten thousand times the capacity of my $3K SCSI disk for under a tenth the price. The disks have gotten faster, too, but the speed hasn’t kept up with the size.

The original PC XT 10 MB hard disk, the Seagate ST-412, could perform a seek in an average time of 85 msec. By the end of the 1980s, Seagate had successor products that got that time under 20 msec. The average seek time of today’s 7200 rpm drives is a little under 10 msec. Since the seek time is greatly dependent on the rotational speed – on average, you have to wait half a rotation for the data to come under the head – there isn’t a lot of opportunity to drive the seek time down, although caching can ameliorate the effects for some access patterns. Indeed, the situation is getting worse. 15,000 rpm disks have been around for years, and 10,000 rpm used to be something of a server standard, but the bulk of today’s sales is at 7200 rpm or below. Indeed, in the past few years there has been a trend towards 5000ish rpm disks for “near-line” (spinning backup) use. By the way, the reason that spindle speeds stopped going up is the same reason CPU clock rates stopped increasing: heat.

“OK”, I hear you saying, “That’s true about seeks, but that’s not the key spec for photographers: what about transfer rate?” There’s been some improvement there, of course, but the peak transfer rate goes up as the square root of the areal density, where the disk capacity rises proportionally with the areal density. As you get smaller bits, you can put more data in one track, and that helps the transfer rate. You can also have more tracks, and that doesn’t do a thing for the transfer rate.

The original PC XT disk had a maximum sustained transfer rate of a little under one megabyte per second. The new Seagate desktop 4 TB disks have a sustained transfer rate of 180 MB/s. About a two-hundredfold increase in transfer rate for a 250,000-fold increase in capacity, a factor of a thousand slower than what would be necessary to move data at a pace that kept up with the amount of data.

The result? Things take a lot longer than they used to. There have been some changes in the way we use disks that haven’t helped:

- RAID 5 decreases write speed, even as it has the potential to increase read speed

- Network attached disk arrays offer slower transfer speeds than the native drive interfaces

- Likewise USB, although USB 3 goes a long way to fixing this

And there have been things we have done that do help:

- Striped arrays (RAID 0 and 10) are faster proportionally to the number of non-redundant disks involved

- High speed serial interfaces, like Thunderbolt and the newer versions of USB

All of these changes – good and bad — don’t make much difference when faced with a thousandfold challenge.

I had this all driven home to me recently. I had a five-bay Synology box stuffed full of 2 TB Western Digital disks and configured as RAID 5, for a total capacity of 8 TB. I was using the box for backup, and it was full. I bought a set of 4 TB Seagate drives, ripped out the WD ones, and initialized the software, picking the same RAID 5 configuration for a total of 16 TB. I named the box Daisy (sticking with the Downton Abbey theme), joined it to the domain, and started copying over one of the directories from Anna, a file server.

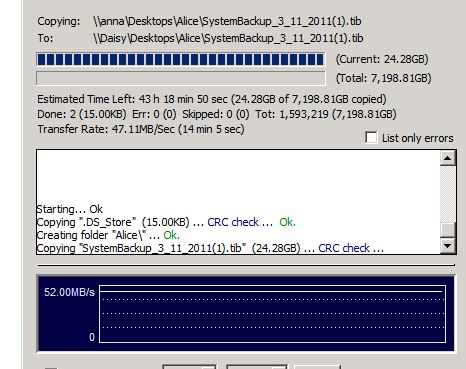

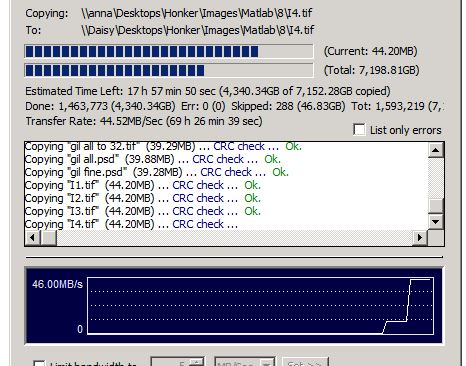

Vice Versa said the operation would take a couple of days. Turns out it was optimistic. Here’s what it looks like now:

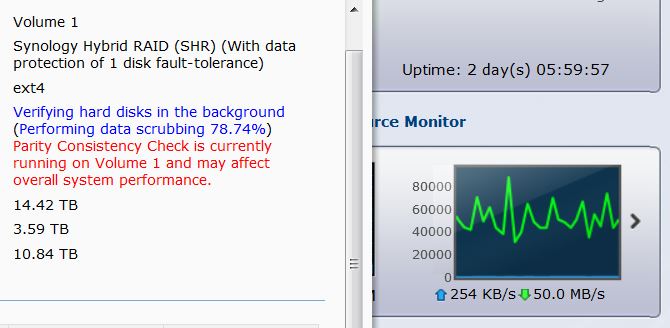

And that Synology array I created? It did data scrubbing for almost three days.

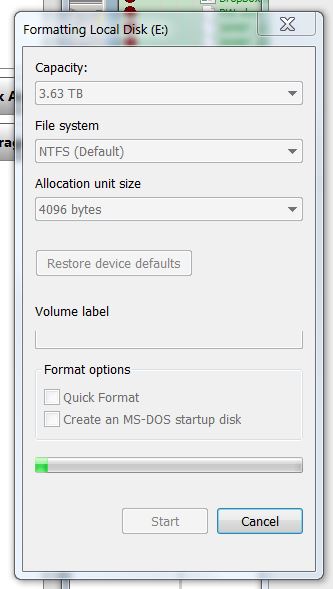

Want another data point? I attached a 4 TB Seagate drive to a computer via USB 3 for offsite backup. The quick format failed twice in a row, so I told Windows to do a full format. Here’s what it looked like after 19 hours:

It looks like it’s going to take several days.

The trends are clear; unless we do something differently, things are going to continue to get worse. What can be done? Stay tuned.

Leave a Reply