Yesterday I reported on the amount of noise, and the spectra, of noisy test images exported from Lightroom with sharpening turned off. Today we’ll see what happens when it’s set.

Here’s the test protocol. The target image is a 4000×4000 sRGB, with each plane filled with a constant signal of half-scale, (0.5, 127.5, or 32767.5, depending on how you think of it), with Gaussian noise with standard deviation of 1/10 scale added to it. The image was created in Matlab, written out as a 16-bit TIFF, imported into Lightroom, downsized by various amounts as it was exported as a 16-bit TIFF, read back into Matlab, and the green plane analyzed there. All three amounts of sharpening were tested.

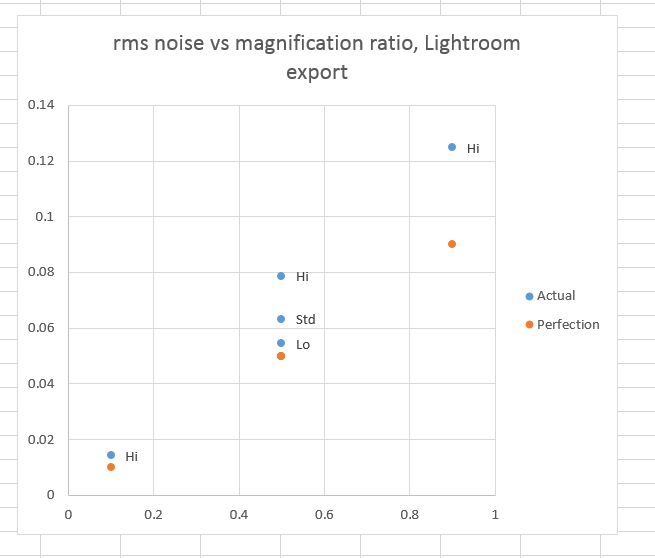

RMS noise, aka standard deviation, of the downsampled images as measured in sRGB’s gamma of 2.2:

As expected, the sharpening increases the noise, with more sharpening increasing the noise more.

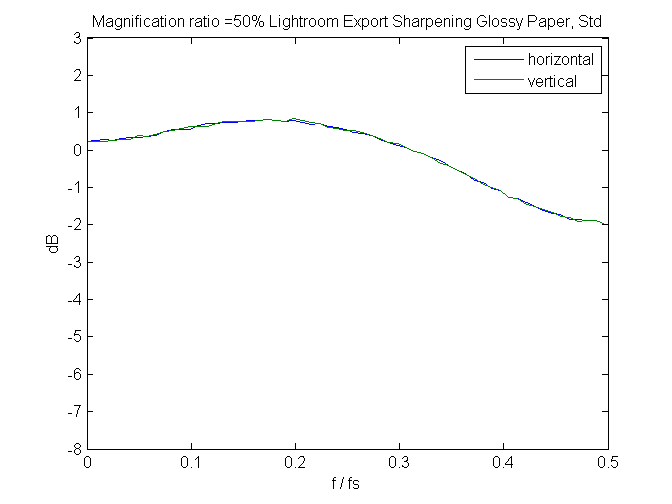

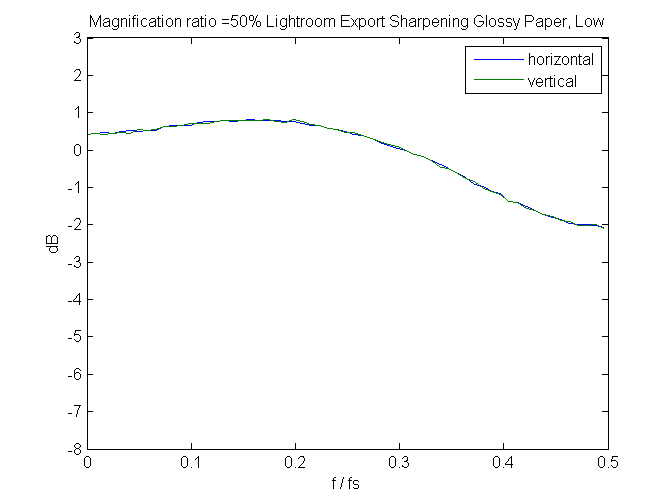

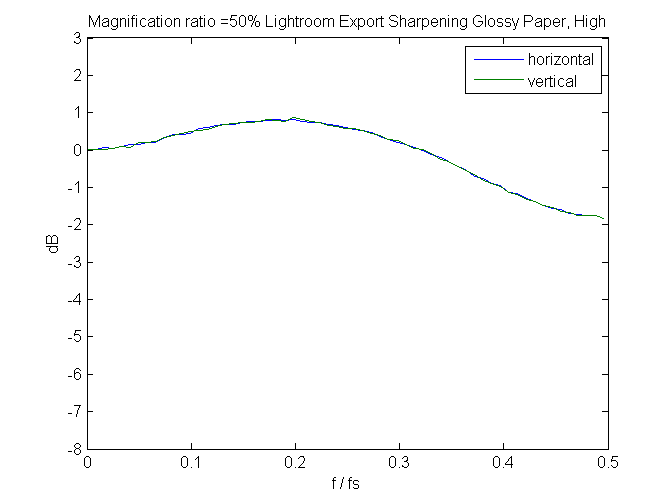

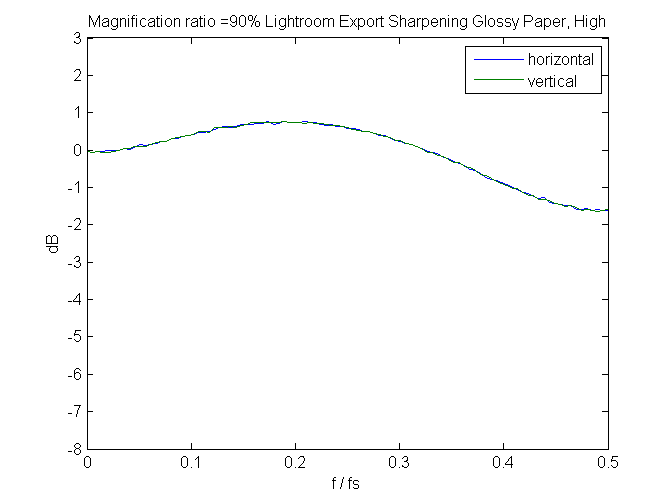

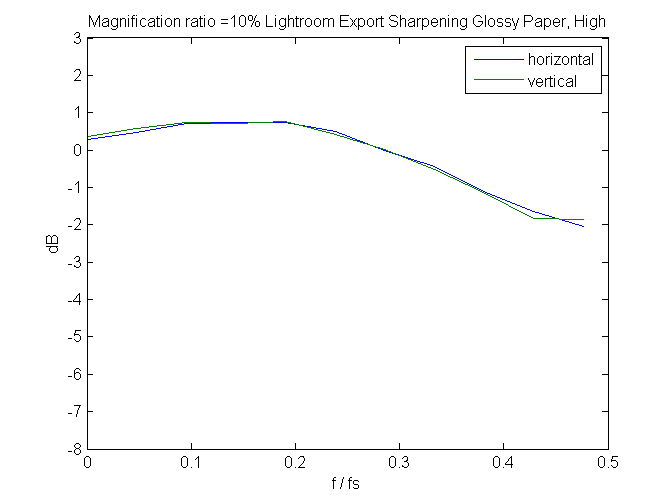

What do the spectra look like?

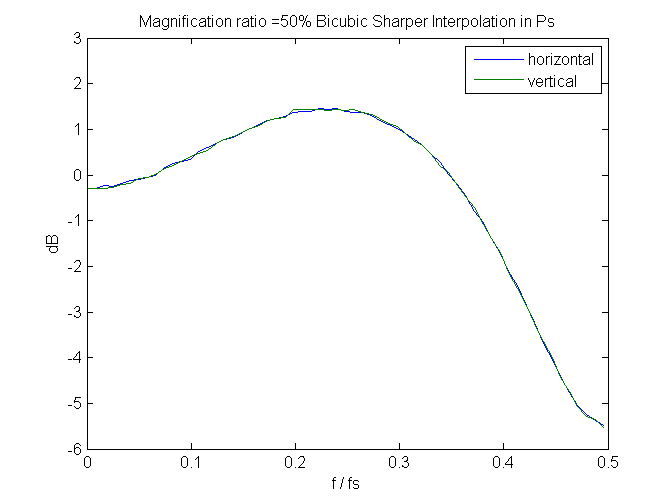

The filters are quite restrained, especially when compared to the meat-axe that is Photoshop’s bicubic sharper:

Things look pretty much the same at other downsizing ratios:

Leave a Reply