This is a continuation of a test of the following lenses on the Sony a7RII:

- Zeiss 85mm f/1.8 Batis.

- Zeiss 85mm f/1.4 Otus.

- Leica 90mm f/2 Apo Summicron-M ASPH.

- AF-S Nikkor 85mm f/1.4 G.

- Sony 90mm f/2.8 FE Macro.

The test starts here.

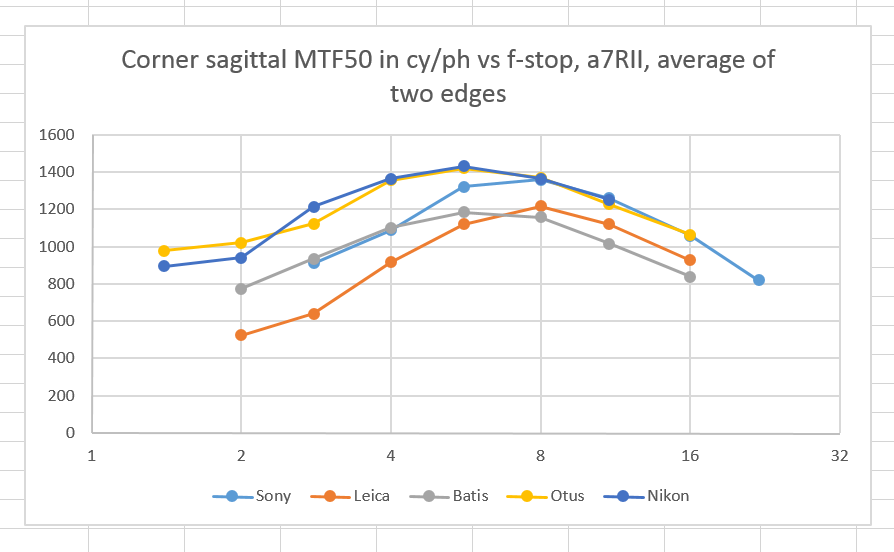

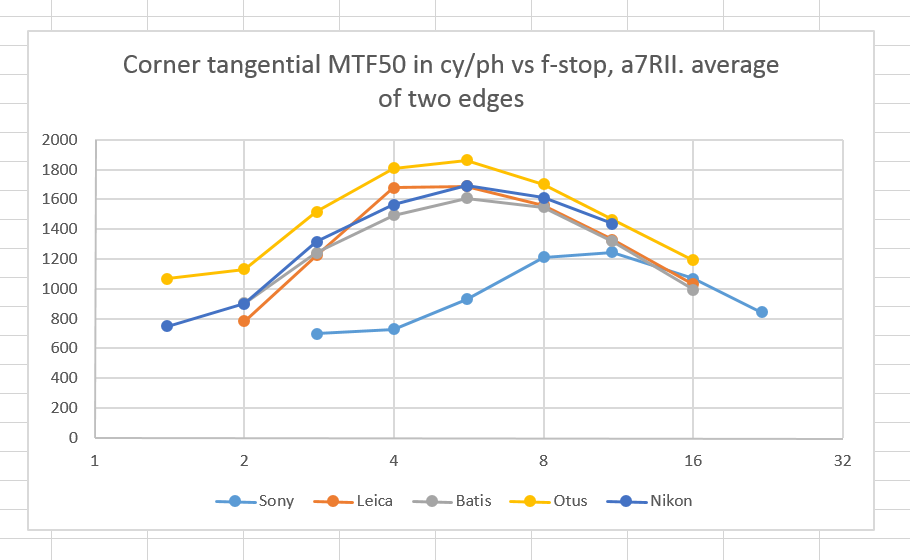

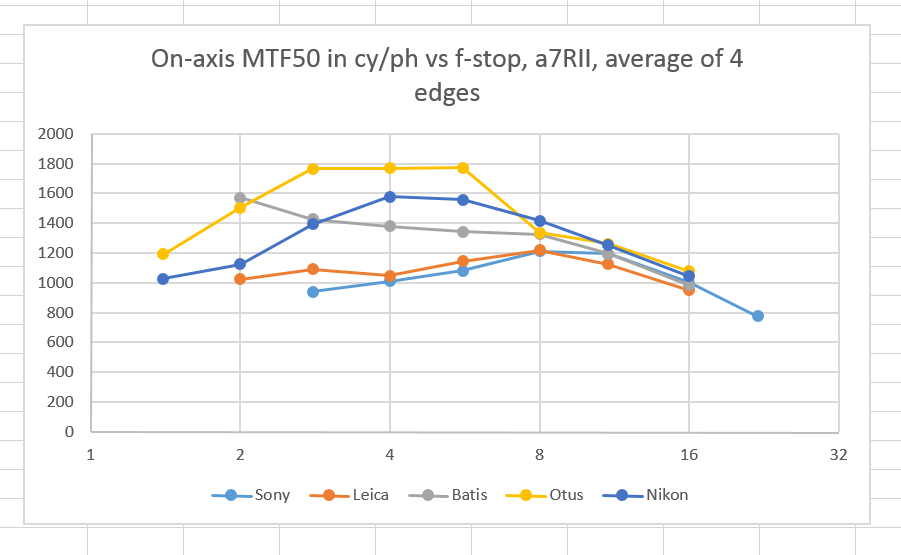

I reprocessed the 3.5-meter DCRAW-developed test shots from the lateral chromatic aberration (LaCA) studies to look at where the modulation transfer function (MTF) reached half of its zero-frequency value. This is called MTF50. These images were created by placing the target in the corner of the image. The target has four edges. Two are oriented in the direction from the corner of the image to the center. These can be used to measure what lens designers call the sagittal MTF50. The two edges perpendicular to those allow measurement of the tangential (or meridional) MTF50.

I’m reporting the MTF50 numbers in cycles per picture height. The height of the a7RII image is 5320 pixels. Divide the numbers on the vertical axis by that if you are more comfortable in cycles per pixel. If you like lines per picture height, double all the numbers on the y axis.

Note that DCRAW doesn’t do any sharpening, so these numbers will look low compared to those for images that are developed in Lightroom using the default settings, which include deconvolution sharpening. Note also that I used a high-contrast target, which I normally don’t use for MTF testing, which will make the numbers somewhat higher than you’d get with a low-contrast target. I used the high-contrast target so I could get the LaCA results from the same set of exposures.

Here are the sagittal curves:

As we saw in the LaCA studies, the Summicron struggles in the corners on the test bench. The fact that the Summicron images look so good raise the possibility that there are things we should be testing for that we don’t consider.

It’s no surprise that the Otus does so well here, but it is a surprise to me that the Nikon matches it step for step. At f/8 and beyond, the Sony 90 macro is also right in there. The Batis starts out mid-pack at wide apertures, and ends up in last by a bit at the narrow ones.

The tangential curves are different:

All the lenses are more-or-less clustered together except for the Sony macro and the Otus. The Otus is a clear winner.

Now let’s look on-axis. Before you jump to the curves please read the rest of this paragraph. Measuring on-axis performance of top-notch lenses at their optimum f-stops is hugely dependent on accurate focusing. Even with the Sony’s excellent focus aids, I have been unable to consistently duplicate on-axis MTF50 with the Otus 55 and the Otus 85. Therefore, the numbers at wide apertures should be taken with a reasonably large quantity of salt. I focused wide open in all cases, so focus shift could play a role in the results as well, although in my testing of the Otus, I found the most accurate way to focus at the best apertures was to focus wide open.

There’s a lot to see here. The first thing that jumped out at me was the Batis f/2 number, which is slightly better (a tie, really) than the Otus. This is not a glitch. All four edges gave similar results, and all four edges of the not-shown f/1.8 shot we only slightly worse. I think the reason has to be better focus with the Batis. Remember what I said in the distant landscape testing about the Batis and the Sony being easy to focus precisely? When focusing on the Siemens star, the Batis was far and away the easiest and most repeatable in the focusing department. The Otus felt the best, of course, and was probably second, but small motions of the Otus long-throw focusing ring produced large variations in the moire patterns that I was using to focus. The Sony was next, by a hair, although it wasn’t anywhere near as much fun, and would be worse if you were in a big hurry. The Nikon was quite a bit worse. The Leica was really tricky to focus. Many times, I’d think I had it close to nailed, and merely taking my hand off the ring changed things. The rangefinder-oriented short focus throw is a real problem when you want focus bang-on.

The Otus is a clear winner overall, in spite of probably not being focused as well as the Batis. The Nikon acquits itself well here. The Sony macro and the Leica produce about the same so-so numbers; well, so-so in this field, anyway.

One of the questions this and the two previous CA tests leaves me with is: “Why does the Summicron look so good and measure so bad?”

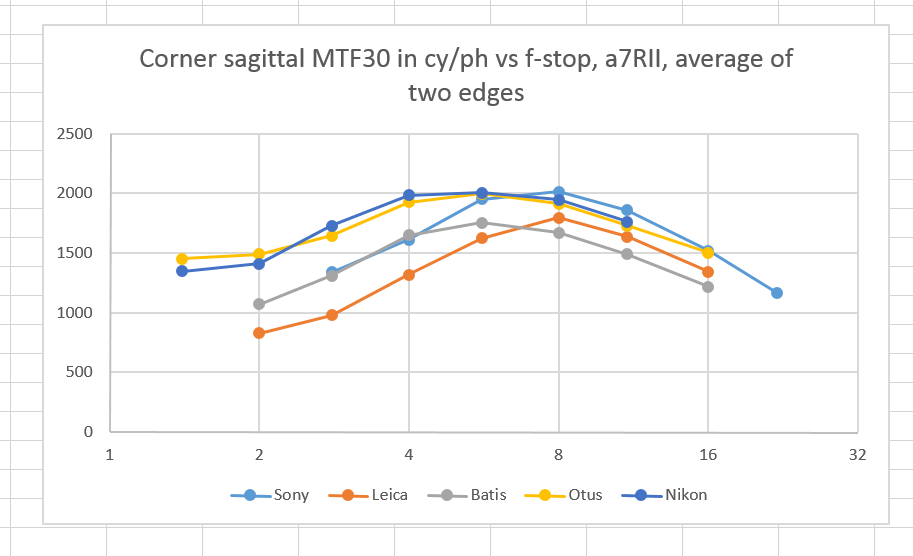

Here are the results at MTF30:

No surprises, except take a look at the Otus numbers for on-axis MTF30 at f/2.8, f/4, and f/5.6! They are essentially at the monochrome Nyquist limit for the a7RII sensor, which means that with the right (or the wrong, depending on how you look at it) subject, the Otus on the a7RII is going to alias all over the place.

David Braddon-Mitchell says

Jim is your judgement that the Summicron looks so good based on the infinity crops you showed us, or on stuff at the same distance as these MTFs. If the former, maybe it doesn’t better at 3.5 metres.

By the way I’m now intrigued: the Sony is producing so so (compared to this company) results at 3.5 metres.

This raises some interesting questions.

It’s the sharpest lens DXO have ever tested (and they have some pretty good info and I think OK methodology, if you ignore the headline weighted numbers that people get upset about.

Now as far as I know they haven’t tested the Summicron.

But they have tested the Sony and the Otus.

The Sony was tested on the A7r, and I compared that to their figures for the the other two on the D800E, as that is (probably) the very same, no AA filter, sensor as the original A7r.

One of the numbers they give you is peak sharpness: this is the figure got from MTF of the on-axis test of the sharpest aperture. (its hard to find their methodology, the fact that this is *peak* sharpness is buried info, and causes a lot of confusion)

They give the ranking of peak sharpness as Otus, Sony, Nikon – about evenly spaced. This reverses the order of the Sony and Nkon for you.

I wonder – did they slip up, do they have a somewhat worse Nikon, or do you have a somewhat worse Sony?

Anyway, with lenses as good as this, immaterial except for the fun of it…

Jim says

I suppose I could have a bad copy of the Sony macro, but it doesn’t show any obvious symptoms of decentering. That’s the problem with testing with only a single copy of a lens, but I’d go nuts trying to test with 16 copies of each, even if I could round them all up. Do you know what Roger C has to say about the Sony 90 macro?

David Braddon-Mitchell says

I don’t think he ever put it on the optical bench; he did do a brief MTF comparison (from IMATEST I think) when it first came out. He was very surprised by how much better it did than the Canon L on the 5DIII. He expected good results (in part because of testing it on the A7r with it’s higher rez sensor) but even so he said he was surprised.

Still, that doesn’t tell us much, since there is quite a sensor difference.

One odd feature of the Sony I have found is that it seems to unusually sensitive to leaving on the IS when it’s on a tripod. Can’t think why that would be so.

Jim says

I did manage to get that turned off in spite of my not being used to the switch on the lens overriding the in-camera SS setting.

Jim says

Note that the Sony has the best sagittal MTF30 at f/8, and ties for best MTF50 at that stop.

Christoph Breitkopf says

The Sony 90 never was the sharpest lens DXO tested – it was actually at place 14 at the time most people were thinking that it was at 1: https://plus.google.com/112823570007766118485/posts/j3aK37qLq3q

And since DXO isn’t saying, we can’t be sure if even place 14 was at least partially due to Sony “improving” the raw files. Roger’s optical bench results were not quite as impressive (though certainly not bad): https://www.lensrentals.com/blog/2015/10/sony-e-mount-lens-sharpness-bench-tests

Of course, as DXO is actually testing the lens+sensor combination, the “top” results have changed quite a bit since they added Canon 5DS R results.

David Braddon-Mitchell says

By the way, one really interesting thing about your test is seeing into the focus dependence of these results. There’s no reason to suppose that professional reviewers are any better at focussing, and every reason to suppose they are more strapped for time. That should give easier to focus lenses a big leg up.

I know some people use focus bracketing where they focus once, have the camera on a rail, and have some method for micro-racking of the camera by repeatable increments and then choose the best results.

Jim says

You got me thinking with that one. The focus effects are so twitchy with the Otus that moving the ring wouldn’t be a decent way to do focus bracketing. I’d have to use a geared rail. Hmmm….

David Braddon-Mitchell says

Well, the RSS rail moves 1.25mm per revolution of the screw. The kirk one (http://www.kirkphoto.com/Focusing_Rail_FR-2.html), though, is 1.07mm per revolution. Assuming you can gauge a half revolution accurately, that gives about .5mm fairly reliably. Even less if you can do quarter turns. Is that enough?

Jim says

Boy I hope so.

Christoph Breitkopf says

Easily. After playing around with a DOF calculator, I think 1cm steps would be more appropriate. Even setting the target circle-of-confusion to the sensor pixel pitch, I still get about 2cm DOF at f/2.8.

But I’m fine with Jim trying 1mm steps as long as we get the results *soon* 😉

NicoG says

Hi,

In fact Roger C did evaluated the sample variation of various Sony FE lens including the FE90 (can remind the link, and the website seem to be off tonight).

He find out that, at the infinite, this lens has a not so shining MFT and large sample variation, not only of corner results but also in the center. In the comment of that blog post, Rogers collegue recently stated that they would soon also test the lenses at a closer distance.

Anyway, I wonder how dxo managed to get such a good result with that lens (correct me if I’m wrong but I think they also base their methodology on several lens samples ?)

David Braddon-Mitchell says

Here’s Roger’s first test: nothing but good news for the lens, but by no means definitive. This is taken at IMATEST distances – medium close

http://www.lensrentals.com/blog/2015/05/sony-fe-90mm-f2-8-g-oss-resolution-test

Here’s his bench test at infinity:

http://www.lensrentals.com/blog/2015/10/sony-e-mount-lens-sharpness-bench-tests

This is interesting: it shows (just like Jim’s tests) good but not class leading quality at infinity. That’s consistent with my observations of great results closer.

BUT the sample variation figures are interesting – it shows variation with the overall quality *without* difference in the centreing. Which (if true) could explain how there can be slightly less good copies out there which don’t show up as skewed or decentred.

Certainly my first copy was bad overall AND skewed with a terrible right side. Replacement is gold. But it is a bit annoying, especially for me here in Oz where getting stores to take things back involves sending them copies of the Consumer Rights act and generally sounding lawyerish. Or buying from Adorama and wearing a substantial postage charge if you need to return.

NicoG says

here is the link:

https://www.lensrentals.com/blog/2015/10/sony-e-mount-lens-sharpness-bench-tests

and here is what Roger Cicala and Aaron Closz say:

“The 90 mm f/2.8 is a decent lens, reasonably sharp, but there seems to be a fair bit of copy-to-copy variation in overall sharpness.”

Rob says

You ask the question ‘why does the Leica look so good and measure so bad?’ Isn’t it quite likely that the measured differences in sharpness is ‘not’ something that we can actually ‘see’?

Erik Kaffehr says

Hi Jim,

“One of the questions this and the two previous CA tests leaves me with is: “Why does the Summicron look so good and measure so bad?””

Could it be that a lens with a bit of less MTF at Nyquist plays better with a non OLP filtered sensor?

Best regards

Erik

Jim says

If that’s all that was going on all we’d have to do is stop way down.