This is the 11th in a series of posts on the Sony a9. The series starts here.

Warning: this is a yet another techie post about esoteric aspects of camera sensor analysis and modeling. There is not much to learn here that applies to general photography. If you aren’t interested in these things for their own sake, do yourself a favor and skip this.

A Sony engineer, Hayato Wakibayashi, has patented some interesting tricks that might be used in the a9. Some thought these might explain the anomalies described in the previous post. In the patent, the inventor describes an architecture that, among other things, allows a group of analog to digital converters (ADCs) to operate on sensor pixels in parallel when speed is of the essence, and all together on the same pixel to reduce read noise. You could imagine that, if the 12-bit ADCs were used in groups of four, that they could operate on four separate pixels at a time in the a9’s continuous shutter mode, and on a single pixel, with their outputs added together, in single shot mode. The latter scheme would produce a 14-bit output.

I decided to simulate their approach, assuming an implementation like that described above. But first, I did some looking at the photon transfer curves of the a7 in continuous shutter mode. The one at ISO 100 was particularly interesting. I’ll just show you the darkest part:

See that “rippling”? That’s the same kind of thing I showed you in the last post from the simulation run where I used a 12-bit ADC and cut the post-Amp read noise in half:

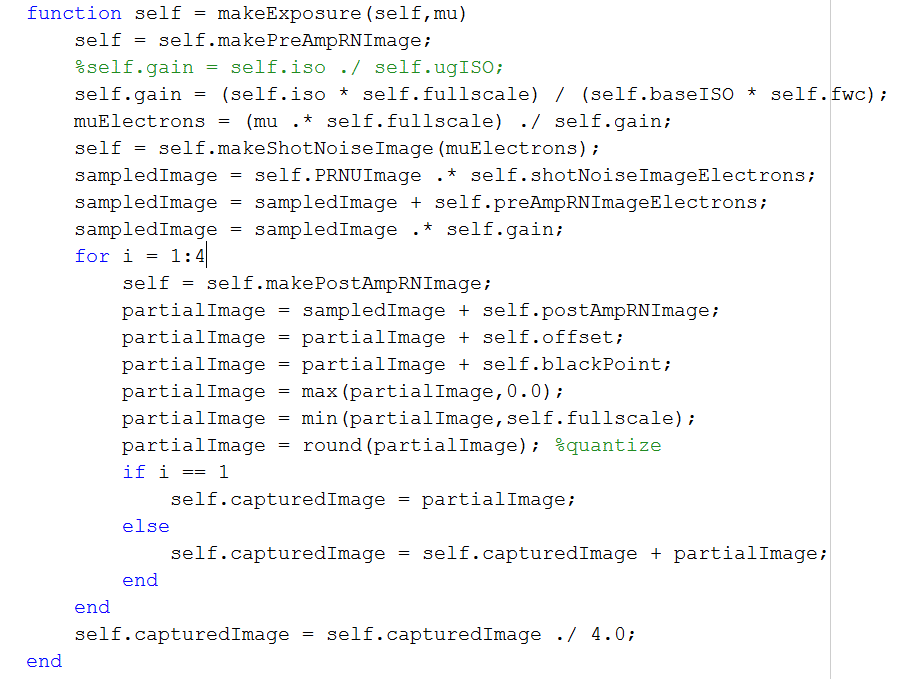

I set up the simulator to use one sample for each pixel with the photon noise and the pre-amp read noise added in, but to then do four 12-bit conversions on each one and average the results, which is a scaling factor away from adding them together. If you’re interested, here’s the code:

I set the post-amp read noise to twice what I’d measured in the camera’s 14-bit mode, since this averaging should reduce noise by a factor of two. But then I divided that number by 4, since we’re talking 12-bit precision, not 14-bit. I ran the sim for ISO 100 through 500, and plotted the results on a plot with those from the real camera from ISO 640 on up:

Now let’s compare that with the same plot from the real camera:

Two things are clear to me:

- I got about the right amount of noise. Well, maybe it’s a little high, but it’s in the ballpark. Extra credit for the noise that I put in being a tad more than I see when the camera is in continuous mode.

- There are no “kinks” to the simulated curves, so the multiple ADCs is not a way to explain the kinks in the real camera’s curves.

Let’s look first at the simulated ISO 100 PTC:

And the real one:

Again, it looks like my post-amp read noise is a little high, but the curves lok otherwise alike. There is no “ripple” in either case. The read noise seems to supply enough dither.

Does this proved that Sony is using the technology described in the patent above in the a9? No, it does not. But it certainly doesn’t disprove it, and it is a plausible idea. It’s also a plausible idea for a7c cameras, and could explain why the read noise in continuous shutter mode is so much higher than in single shot mode.

As an aside, if you’ve read the patent and can tell me a combination of switches 131, 132, and 133 that connects one pixel to both ADCs and doesn’t load the source follower with two constant-current sources, I’d be interested. If you can’t, do you think it’s an error in the patent?

BarsMonster says

I believe patents are not always 100% detailed, hence the absence of details around current sources.

In your simulation to see some weird effects of multiple ADC sampling you might need to include more realistic ADC model with uneven (non-ideal) steps. Even very good ADCs typically have some 0.25LSB systematic errors. This error might have complex distribution across the ADC’s range and will differ among ADC’s instances.