Note: this post has been extensively revised.

I keep reading assertions like this one that was just posted here:

When saving and opening a JPEG file many times in a row, compression will ruin your image.

I knew from hanging around with some of the IBMers working on the original JPEG standard that recompressibility with no change was one of the objectives of the standard. So what happened?

- Has the standard changed in that regard?

- Are people improperly implementing the standard?

- Is the above quoted statement wrong?

I ran a test.

- I opened a GFX 50R .psd file in Photoshop, flattened it, and saved it as a TIFF.

- I closed the file.

- I opened it in Matlab, and saved it as a JPEG with default quality setting.

- I opened the JPEG in Matlab.

- I converted the file to single precision floating point, and back to unsigned integer with 8 bits precision.

- I changed a pixel in the lower right corner to a value equal to the number of iterations.

- I saved that file as a JPEG under a new name.

- I repeated steps 4 through 7 100 times, giving me 100 JPEG files created serially from the original TIFF.

- I analyzed differences among the files as described below.

If you mask off the 8×8 pixel block containing the lower right corner, files 2 through 100 were identical, meaning that opening, modifying and saving a JPEG image many times does not change anything outside of the 8×8 block in which the change was made.

So the confident assertion of the Fstoppers writer is wrong.

It was interesting that the JPEG file created from the TIFF was slightly different from the first JPEG file created from a JPEG.

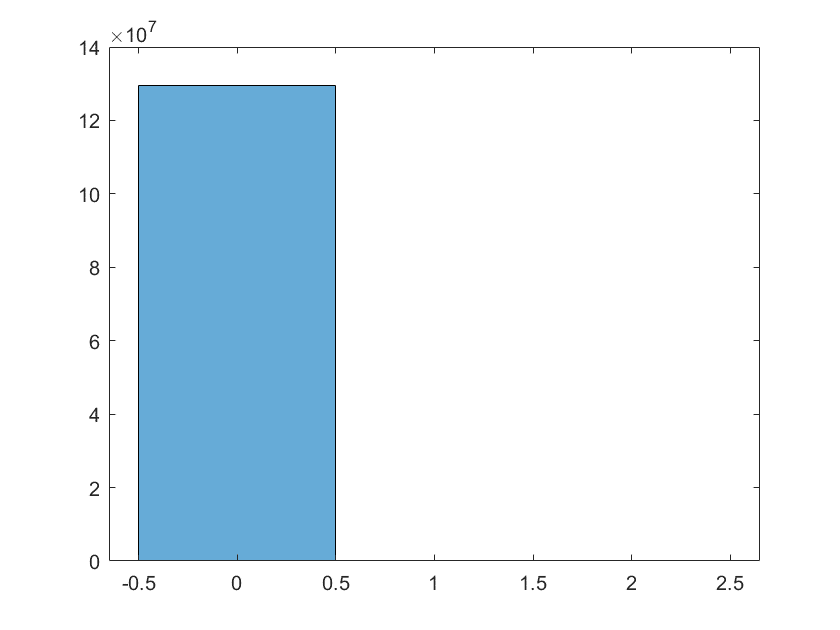

Here’s a histogram of the difference between the file created from the TIFF and the one created from the JPEG (it’s an RGB file, and I’m plotting the difference for all 3 planes, so there are three times as many entries as pixels in the file:

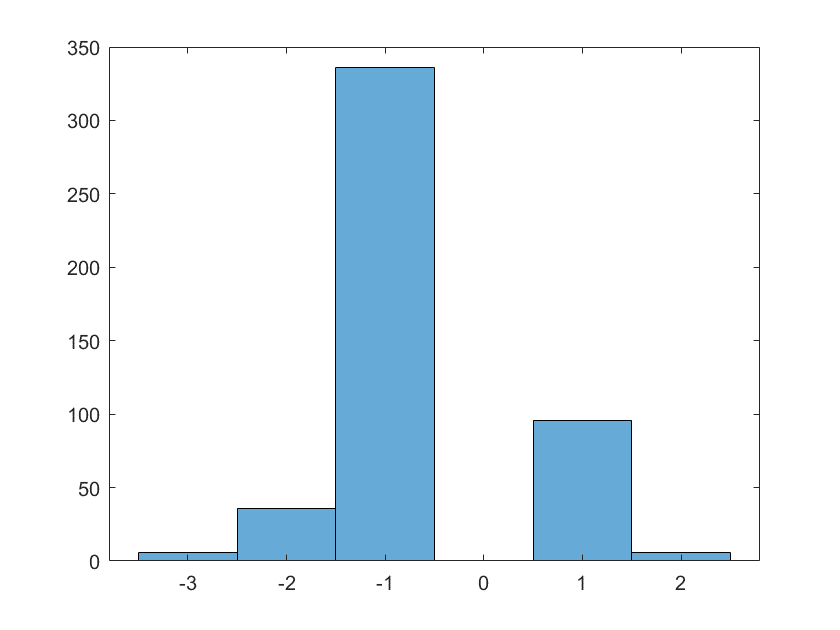

For roughly 130 million data bytes both JPEGs are equal. However, there are some difference bytes that are not zero. We can see them by removing all the zero bytes from the histogram:

There are fewer than 450 bytes that differ by one, and about 150 that differ by two, and a few that differ by 3.

Conclusions:

- In Matlab, JPEG recompressions don’t “walk”; they are the same after the first iteration.

- Changes from the first to the second iteration are small.

- The effects of changing one 8×8 block do not affect the recompression accuracy of other 8×8 blocks.

I then created a chain of 4 JPEG images in Photoshop, each one derived from the previous one, and saved them at quality = 9.

The first one was different from the second in 480 pixels.

The rest were identical.

Conclusions

- In Photoshop, JPEG recompressions don’t “walk”; they are the same after the first iteration.

- Changes from the first to the second iteration are small.

Of course, it is not practical for me to conduct this experiment for all possible JPEG images.

Good test Jim.

To be fair to the poster, the test he ran was not the same as yours as he created a new jpg every time he saved and used that for the next, so he ended up with the 99th new version showing terrible artifacts.

I would always go back to the raw to create a new (different) jpg so his test is a bit moot for me and perhaps many others.

The test I ran, when continued, produces identical JPEGs ad infinitum.

Here’s a possible, if a bit far-fetched, real-world situation that I don’t think would be a problem and the Fstopper article claims would be a disaster. Let’s say I get a JPEG from somebody to print. I look at it, and I discover a dust spot. I open up the file, fix the dust spot, and save it under the original name. Then the customer calls and tells me to remove a different disut spot. I open up the file, fix the dust spot, and save it under the original name. That sequence repeats itself a few times.

Because the DCT takes place in 8×8 pixel blocks, as long as none of the dust spot edits were in the same block, they would all have been decompressed, changed, and recompressed only once, even though the whole file had been decompressed and recompressed many times.

As long as the same quantization matrix is used, resaving it should be lossless.

Maybe if you alternate between two different quality levels it’ll degrade more.

Exactly so.

My understanding is that it going from a low quality jpeg to a higher quality one will just add a bunch of zeros to the matrix, which would then just be lopped back off going back to lower quality.

It depends on the relationship between those quantization tables.

If the high quality table has coefficients multiples of the low quality table, then the bahavior is as you’ve described. However, if for example, a pair of corresponding coefficients is 3 in the high quality table and 4 in the low quality table – re-compression using the high quality table could sometimes make things a little bit worse.

> “Every pixel in the file is zero.”

All I see is that vertically, every pixel on this plot means 2.6·10⁵ pixels on the original image. All that your graph shows is that there is <1.3·10⁶ pixels with difference 1, <1.3·10⁶ pixels with difference 2, <1.3·10⁵ pixels with difference 3, etc. So JUDGING BY YOUR PLOT, there may be A LOT of pixels with very large differences.

You need a better metric than this plot to convince an observant reader!