This is the 22nd post in a series of tests of the Fujifilm GFX 100, Mark II. You can find all the posts in this series by going to the Categories pane in the right hand panel and clicking on “GFX 100 II”.

I normally do dark-field histograms first when I test cameras. With the GFX 100 II, I didn’t do that. Big mistake.

When I tested the camera earlier, I found unusually low read noise at ISO 80. I scratched my head, did a bunch of tests, and couldn’t figure out where it was coming from. Today I looked at some dark field histograms at ISO 80 and ISO 100.

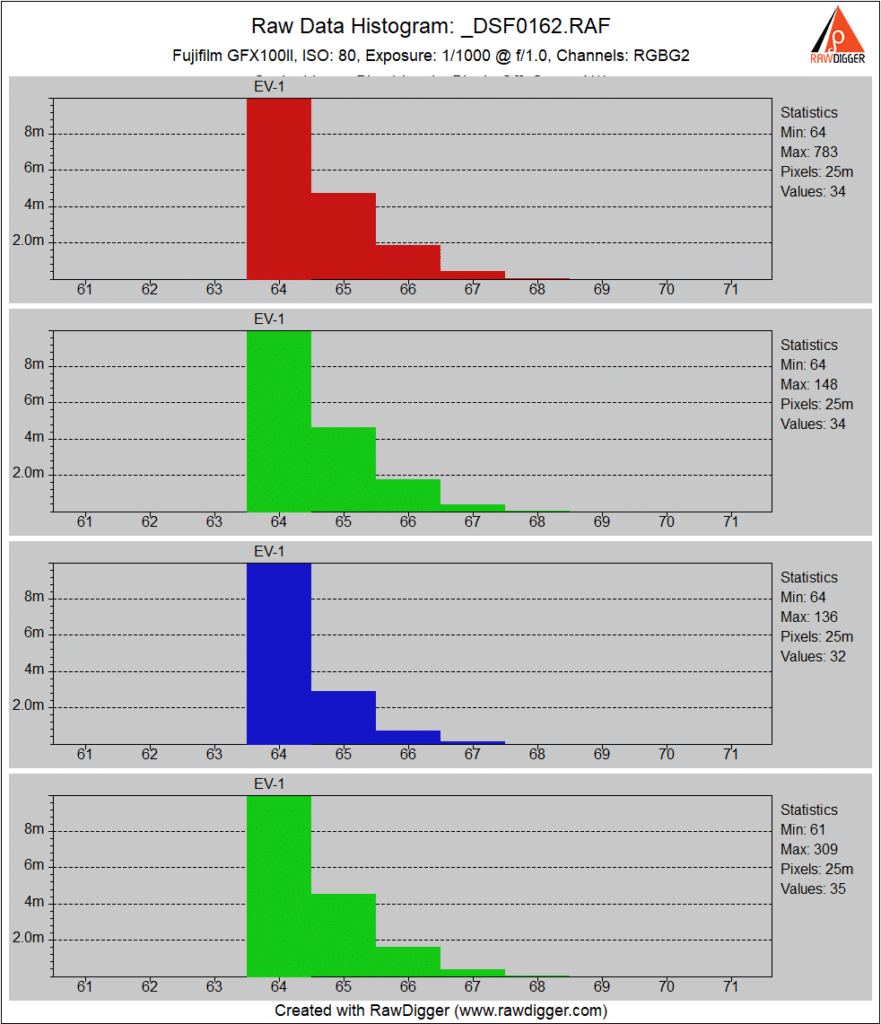

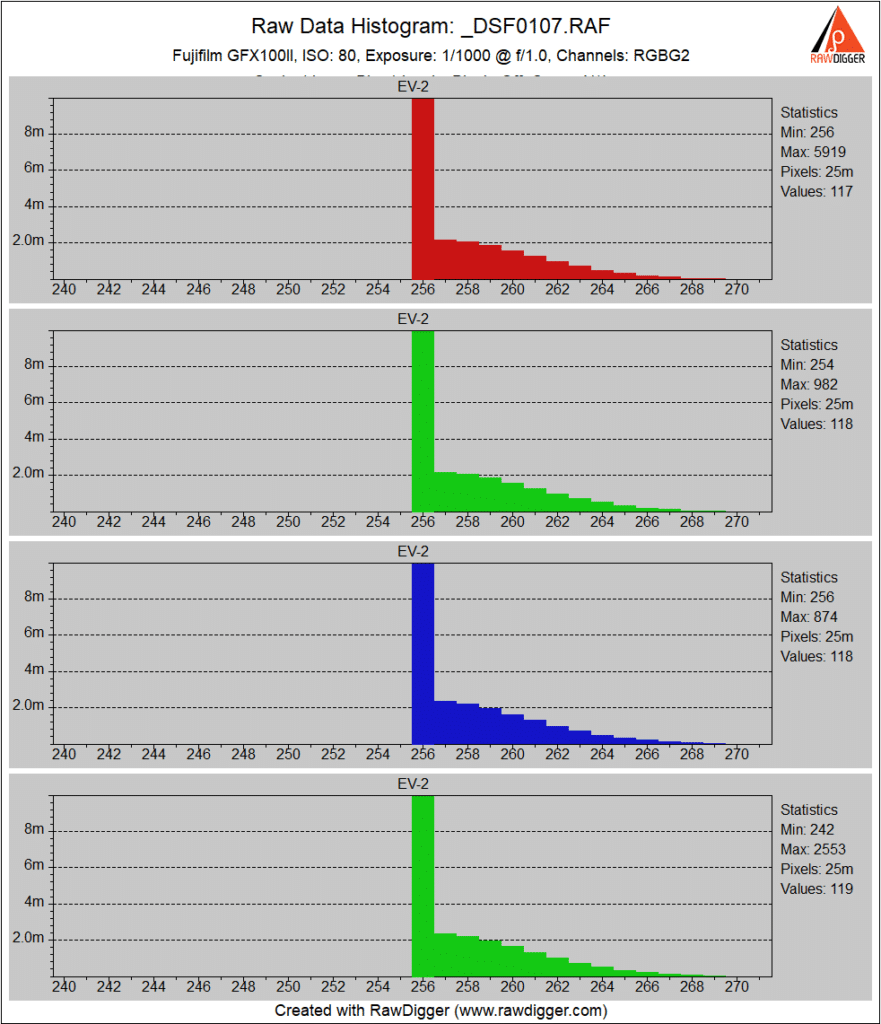

First, in single shot drive mode, 14-bit precision, with electronic first curtain shutter.

I could kick myself. The answer is obvious. At ISO 80 those folks at Fujifilm have dropped all the data below the nominal black point, slicing off the left half of the histogram, and cutting the measured read noise in half of what it would normally be. For shame, Fujifilm. For shame, Jim. I should have figured this out long ago.

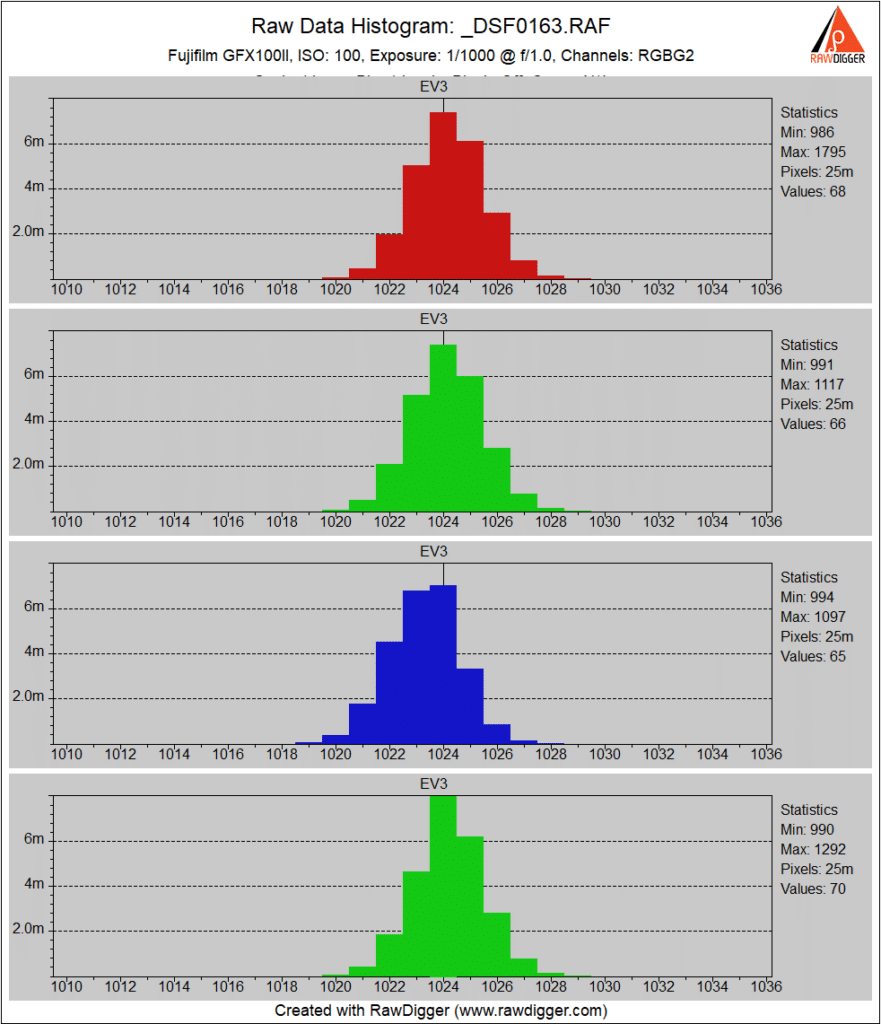

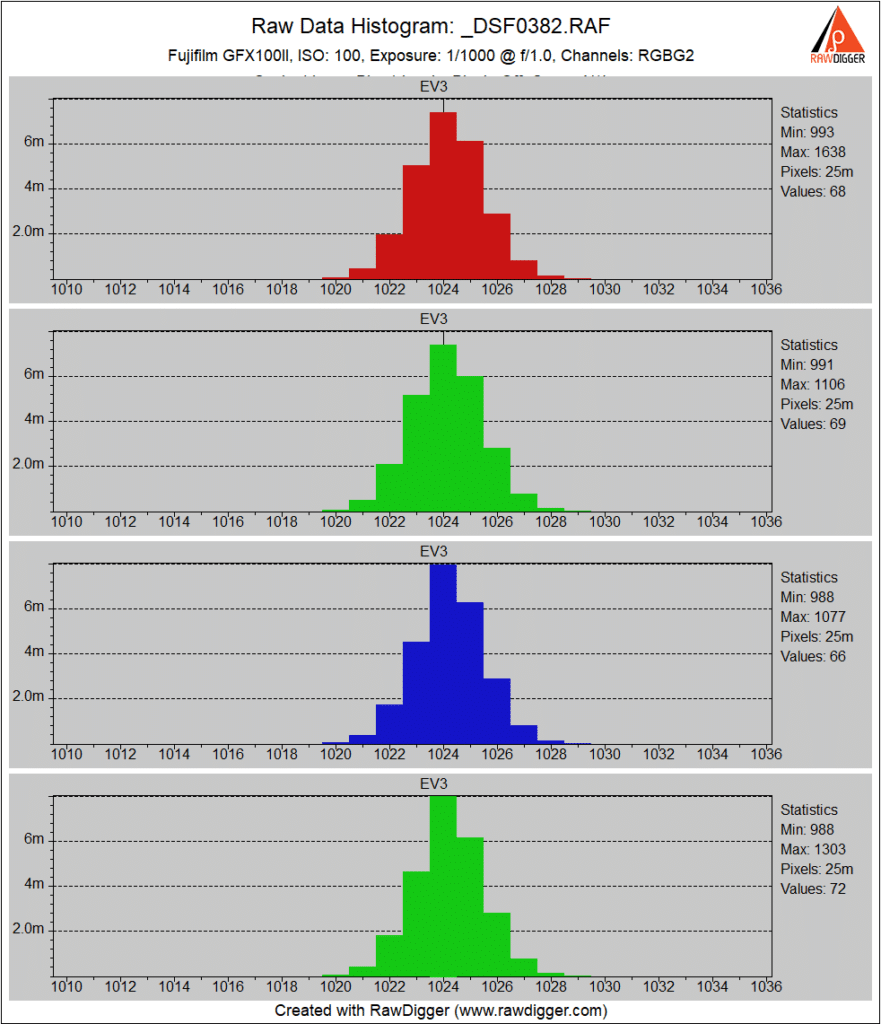

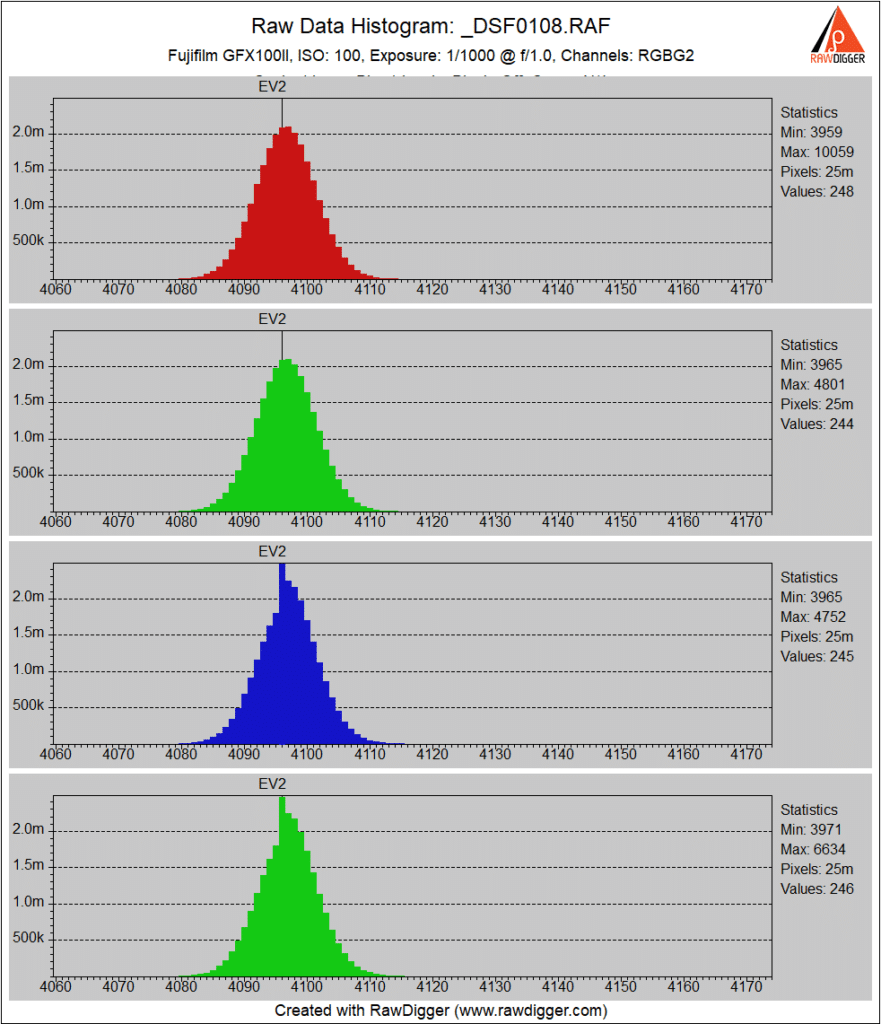

In CL mode, 14 bit precision, with mechanical shutter:

Same thing.

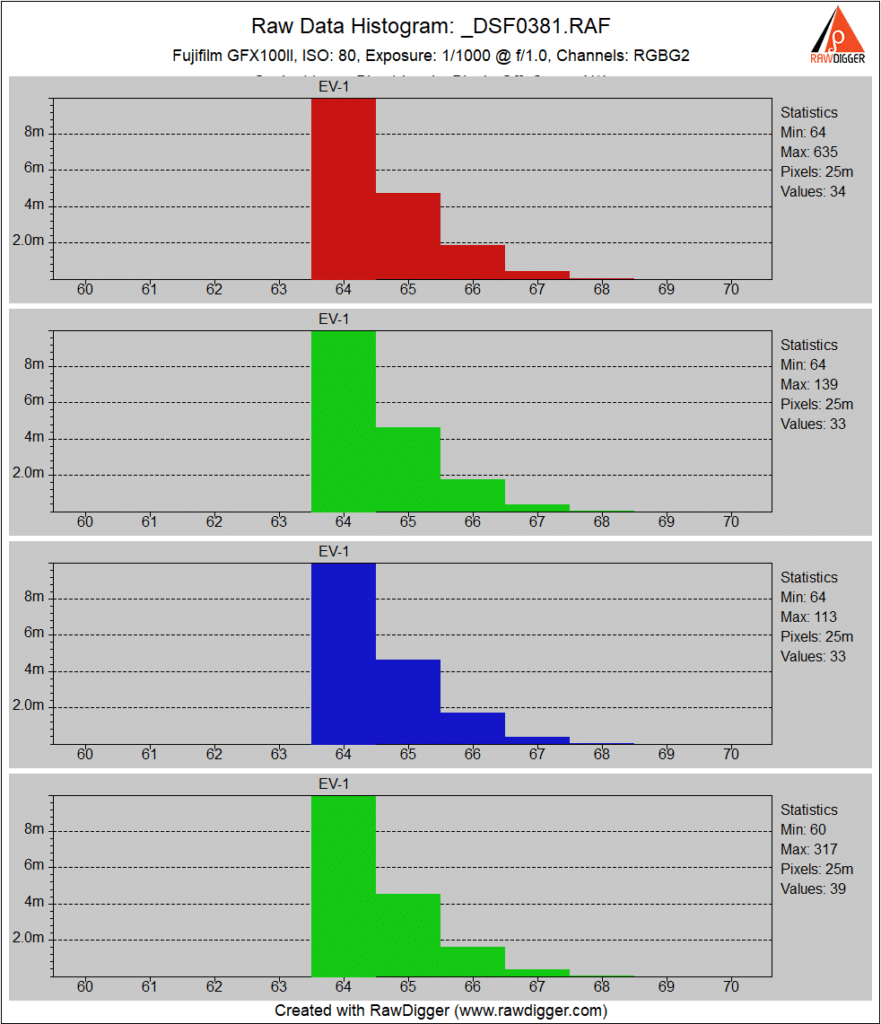

16 bit precision, EFCS:

Same thing, except it looks like all the values below the nominal black point are converted to values at the black point.

So there isn’t really any less read noise at ISO 80 than you’d expect. It’s all done with mirrors.

Interesting… this is deceiving customers from Fuji‘s side I would say…

Dear Jim,

nice to read your expertise again..

but in this case I don’t understand anything;-)

at the end: why has the GFX 100 ii iso 80 this kind of DR boost?

best, Tom (from Berlin)

Beats me. See the next post.

what I don’t understand should there not be a sign of black point clipping in regular shots too because so far I have not seen one ? for me this looks very much like the dieselgate software trick which only showed up under test conditions. too

We ran into this at the limits of a quantitative image analysis system. We would read every pixel in the window, run it through a calibration LUT and generate a calibrated mean. In doing that, we started finding that very dark windows underestimated calibrated data (calibrated by liquid scintillation counting). Very light windows overestimated. The reason was, as you have found, truncation of the actual distribution of pixel values. We were clipping the black values to zero and the bright values to the upper precision limit. Oh yes, and we were also artificially inflating SNR, as you saw with the read noise. I remember one of our customers telling me how impressed he was with our noise levels and the moral dilemma I was in as to whether I should take him deep into this, or just let him be happy. We fixed the problem.

Long story short, the answer was to characterize system response at the limits (not always normally distributed) and generate a characteristic pixel value distribution that was used to modulate the window pixel values. When we applied that process to the near min and near max pixel values, we found that our read values were much better estimates of the actuals. I think we patented the statistical sampling process but this was years ago so I can’t remember exactly.

Poor Fuji. I doubt they want to go there.

As i understand this is the same issue which make Nikon unable to process multiple star images in comparison to Canon 15y ago?