In the old 8-bit world, Lab had a bad reputation for causing posterization when photographers invoked even moderate editing moves. I thought I’d check out conversion errors from sRGB to Lab and back, with double-precision color space calculations, and quantizing to 8 bits per color plane after every conversion.

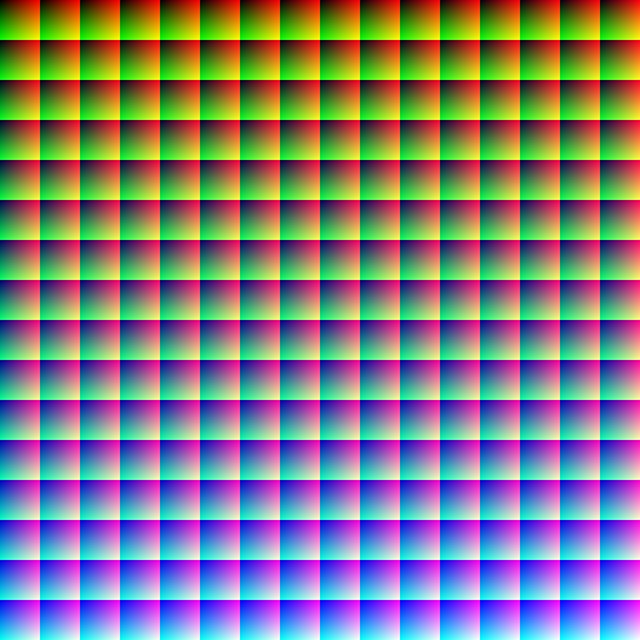

I used this target image, which has all the colors possible in 8-bit sRGB:

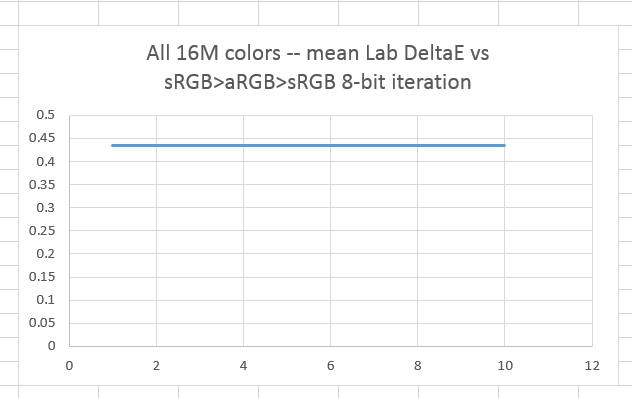

It doesn’t take many iterations for things to settle down. One iteration is defined as from sRGB to Lab and back. Here are the CIELab mean errors as a function of number of iterations:

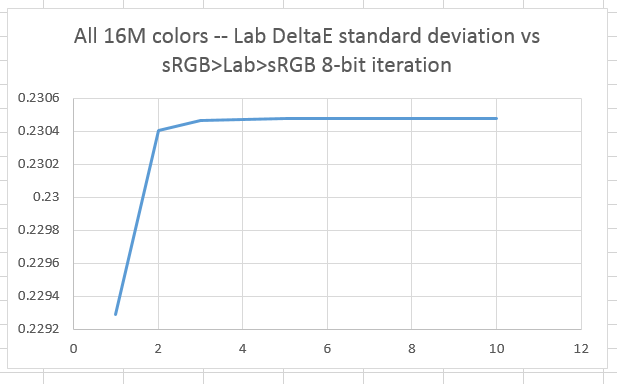

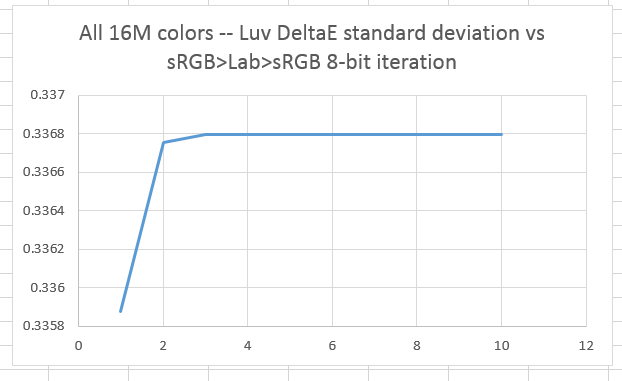

Well, that’s pretty boring. Is nothing changing? Not so. Here are the standard deviations at each iteration:

Using Lab to measure errors of conversions involving Lab is a bit like asking the fox to watch the chicken coop. What if we measure the errors in CIELuv?

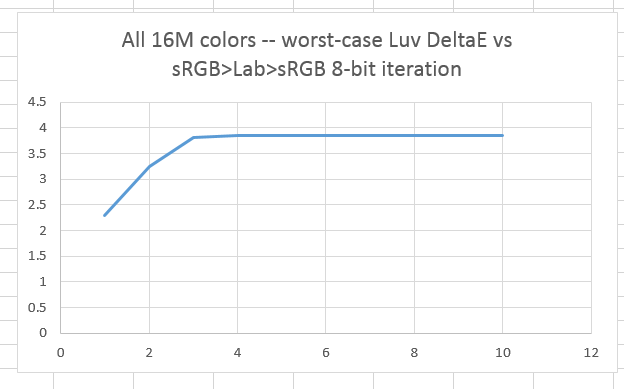

The mean is error measured in Luv is almost twice as bad as it is measured in Lab, but the worst-case error is actually better measured in Luv.

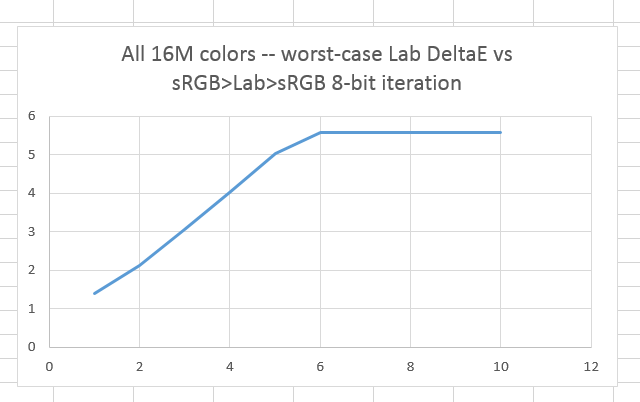

The worst-case Lab DeltaE is better than for sRGB?aRGB>sRGB, but the mean is a lot worse. This shows that 8-bit-per-color-plane images shouldn’t be freely moved in and out of Lab.

Is anyone surprised?

CIELab error image pictures follow, if anyone is interested.

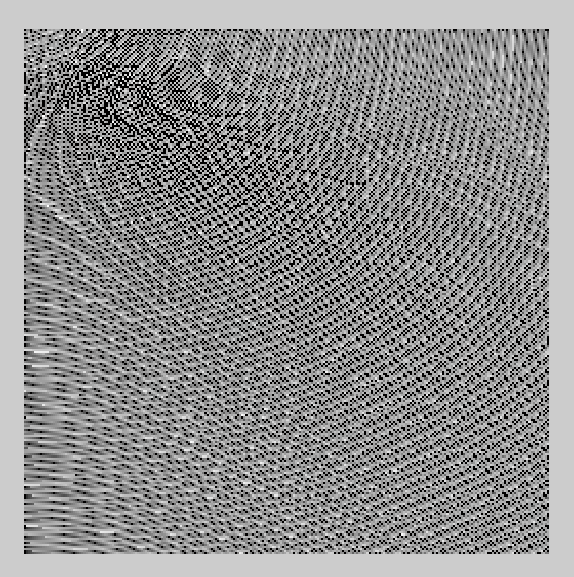

The whole error image, normalized to worst case and gamma corrected:

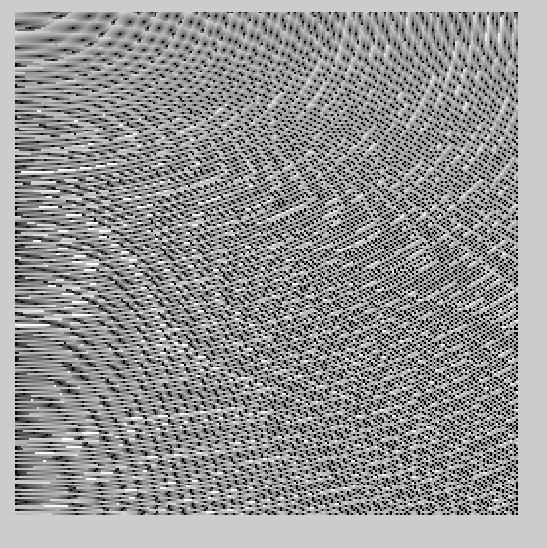

As above, but with a one-stop exposure boost:

Upper left corner:

Lower right corner:

Leave a Reply