I took my 256 megapixel image that’s been filled with random 16-bit entries with uniform probability density function, brought it into Photoshop, assigned it the sRGB profile, converted it to Adobe (1998) RGB and wrote it out. I went back to the sRGB image, converted it to ProPhoto RGB, and wrote that out.

Then I took the sRGB image on round trip color space conversions to and from Adobe RGB, ProPhoto RGB and CIELab, writing out the sRGB results.

In Matlab, I compared the one-way and roundtrip images with ones that I created by performing the color space conversions using double precision floating point. I quantized to 16-bit integer precision after each conversion.

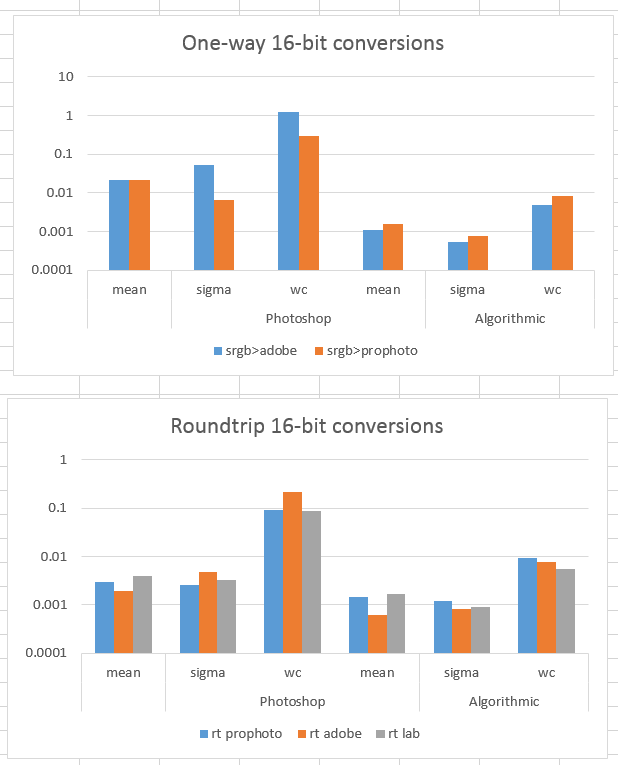

Here are the results, with the vertical axis units being CIELab DeltaE:

You can see that the conversions that I did myself are more accurate. In the case of the worst-case round trip conversions, they are about one order of magnitude more accurate. In the case of the one-way conversions, they are relatively more accurate. The striking Photoshop worst-case error with the one-way conversion to Adobe RGB makes me think that either Adobe or I have a small error in the nonlinearity of that space.

Photoshop did its conversions faster than I did mine. I suspect that they’re not using double precision floating point. In fact, from the amount of the errors, I’d be surprised to find that they’re using single precision floating point.

Except for the one-way conversion to Adobe RGB, even the Photoshop worst-case errors are not bad enough to scare me off from doing working color space conversions whenever I think a different color space would help me out. Still, it would be nice if Adobe offered a high-accuracy mode for color space conversions, and let the user decide when she wants speed and when she wants accuracy.

Leave a Reply