I saw this pronouncement on DPR today:

The smallest signal we can distinguish from the noise floor is equal to the noise. Signal to noise ratio (SNR) = 1.

You see people saying that, or things very much like that, all the time. In some circles, it’s conventional wisdom.

There’s only one problem: it isn’t even close to being true.

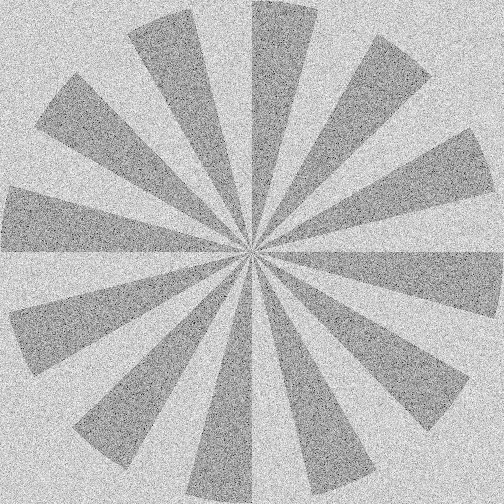

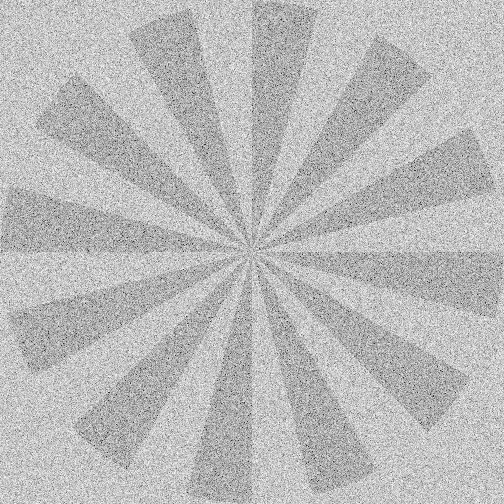

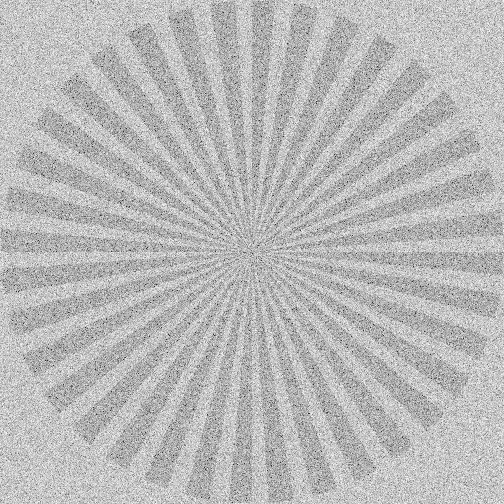

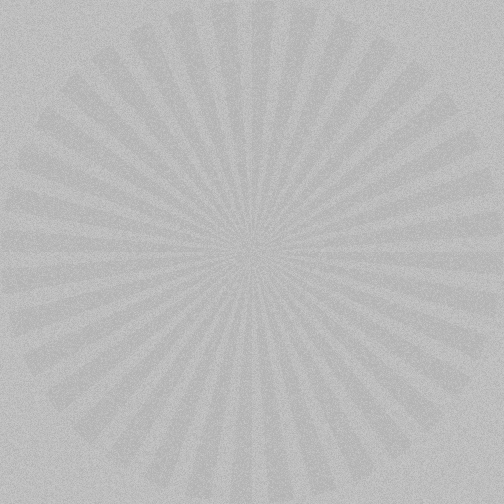

I’ve been meaning to post a demonstration for a while, and I spent the better part of half an hour coding up a program to make the images that I’m going to show you.

If you want to see the star better in the bottom image, try moving the image up and down with the scroll wheel on your mouse.

The noise is Gaussian, clipped at plus and minus three sigma. Mixing done in linear space. Images encoded for web at gamma 2.2.

You’ll notice that the parts of the star that are further away from the center are easier to distinguish when the noise gets high. That’s because there is more area for your eye to average over.

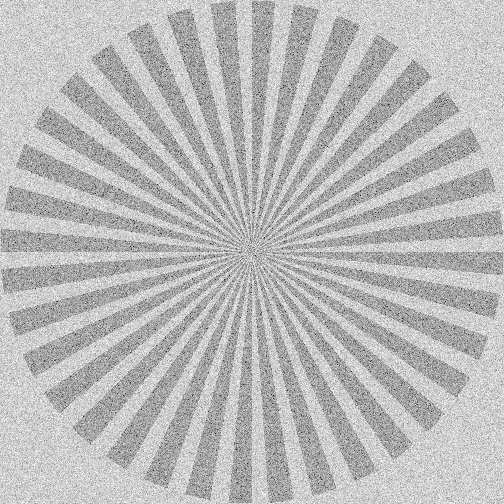

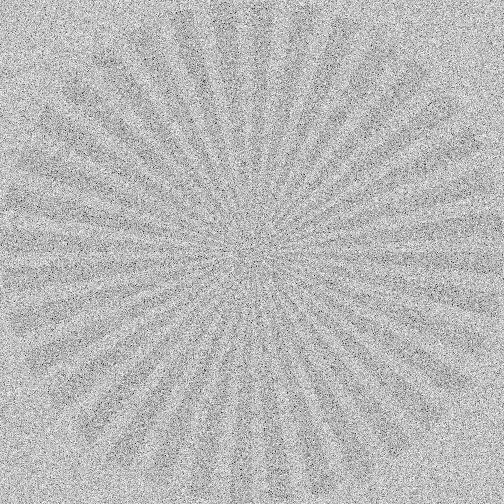

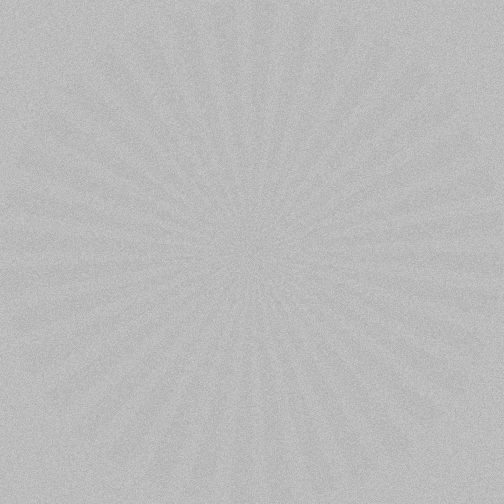

If we look at images with more spokes to the star, this is easier to see:

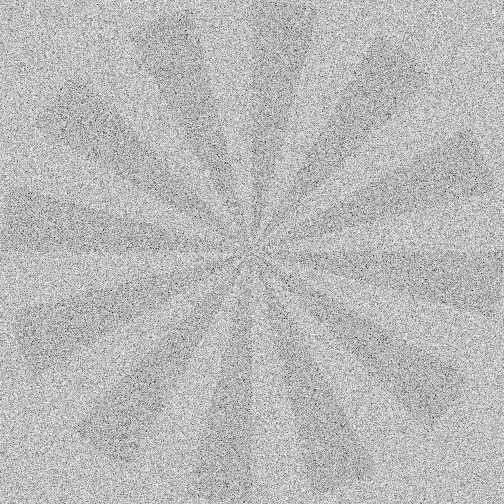

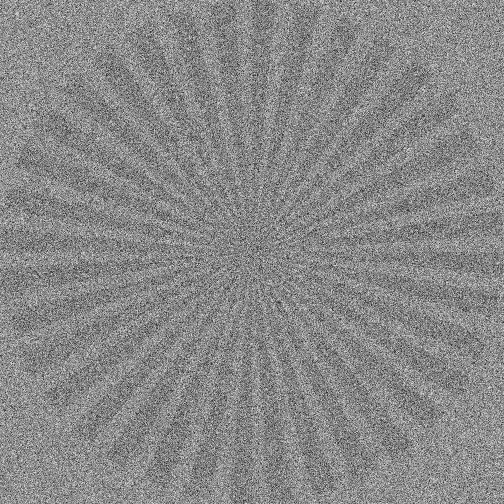

A reader asked what happens if we average 24 frames with the above parameters. Here is the result:

24 frames with those parameters average to:

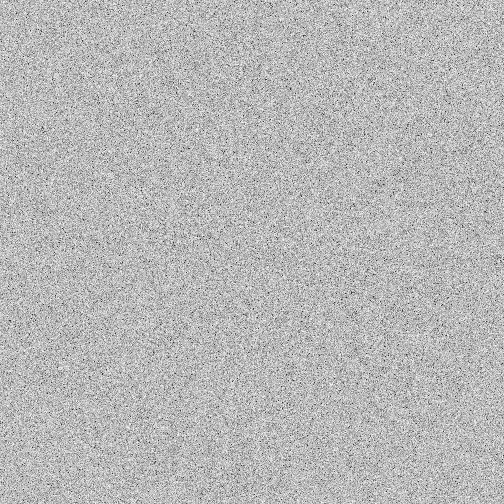

If we take the above image and tighten up on the black and white points, we get this:

For large scale detail, the SNR doesn’t have to be as high.

The question is whether you can, say, read text of a given size at a given SNR.

You are right on. With the Siemens Star stimulus, you can see the effect of raising the spatial frequency by looking closer to the center.

I added another set of images that illustrates that better, with three times as many spokes.

It definitely makes it easier to see the point of extinction of the detail at the higher SNRs.

I am thoroughly convinced Jim needs to write a book of his wisdoms/tests for those of us who are even the slightest bit nerdy. Great stuff, Jim!

This is the same as audio. One would think that with 16 bits of resolution that you can only get 96db of dynamic range but with dithering of the last bit you can get at least another 12db. In fact with noise shaping you can extend it further with low frequency sounds as you have more sample points to play with (much like the wider spokes of the Siemens star). This is also quite common in the video world where you often have cameras claiming a dynamic range higher than the bit depth of the sensor as the same effect you have demonstrated above allows more values of grey to be filmed.

Would be nice to see temporal noise reduction improvement applied to at least 24 frames of SNR 0.2 image.

I added that and a couple of more images to the bottom of the post. Thanks for the idea.

Just a comment from a (bio-)statistician: SNR is of course related to the r² metric (proportion of variance explained) in a regression. If we stopped at SNR=1 (r²=0.5) biomedical research would pretty much stop overnight 🙂

Great post.

The demonstration of the SNR .1 after averaging and contrast enhancement is impressive.

I’ve been thinking about this few days ago and now I see your article.

Usually the electronic dynamic range is calculated with SNR=1 because many peoples consider you cannot distinguish source from noise once SNR becoming subunitar.

But I personally imagined you actually can, because I remembered what I have read in this 2007 article:

http://theory.uchicago.edu/~ejm/pix/20d/tests/noise/#patternnoise

“Because the human eye is adapted to perceive patterns, this pattern or banding noise can be visually more apparent than white noise, even if it comprises a smaller contribution to the overall noise”

So the ability of our brain to detect form shapes make us still see images when SNR<1.

You can clearly see this in your test.

What is implied in the pronouncement is detectable over black.

In other words, the smallest “detectable” signal.

If your test has used a black background and a star with SNR < 1 over that black I think the results would be different.

Are you saying clip the noise at the mean and plus three sigma instead of plus and minus three sigma? If so, how would you expect the result to change?