In yesterday’s post, I downsampled images successively, a factor of two at each step, in an attempt to get averaging at the same time. I was working with the images today, and it didn’t look like I was getting the desired effect.

Then it hit me.

I was doing exactly the wrong thing. Downsamping by a factor of two each time meant that there would always be a pixel at the source resolution right where I needed a pixel at the target resolution. Since I was using bilinear interpolation, I’d just get that pixel. I might as well have been using nearest neighbor!

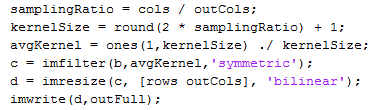

Rather than figure out some tricky way to downsample in stages, I just applied an averaging filter in the time dimension, then downsampled in one step.

Much better. Faster, too. Matlab is pretty darned swift at convolution.

Bilinear downsampling just picks points on the surface corresponding to the new pixel position? Huh. I always thought it took the average value of the surface that falls under the area of the new pixel.

It fits a straight line in both directions, picks points on each line (amounts to linear interpolation) and averages the two calculations, one in each direction.

http://en.wikipedia.org/wiki/Bilinear_interpolation

Note that it’s not actually a linear operation. However, since I was only doing it in one direction, it was linear, and guaranteed to go through the points on each end of the line segment.

Jim