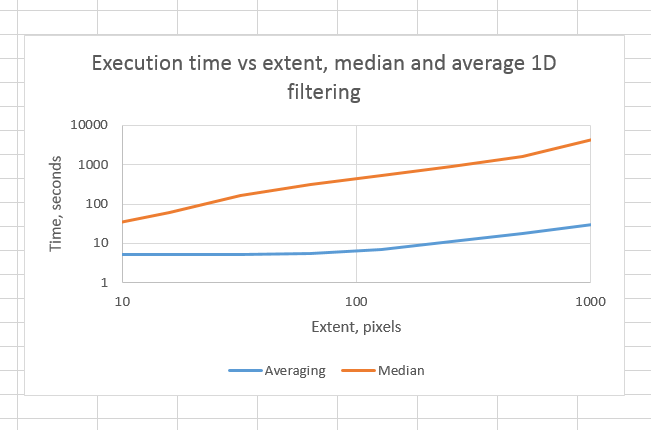

Median filtering is computationally intensive at large extents, and Matlab is poor at parallelizing this operation. Here’s a graph of some timings for one-dimensional filtering of a 6000×56000 pixel image using both median filtering and averaging with a block filter of the same size as the median filter’s extent:

I did a series of analyses to see which was better. I thought going in that median filtering was more appropriate, because it tends to preserve edges, and also that it is good at rejecting outliers entirely. I was right about rejecting outliers, but it turns out that preserving edges is not what I want. A source of edges in the time dimension is the several second recycling time of the Betterlight back, and I want to reject those edges.

Another source of edges is the artifacts that develop around sudden luminance transitions. I’m not sure of the source of these, but I suspect chromatic aberrations in the lens.

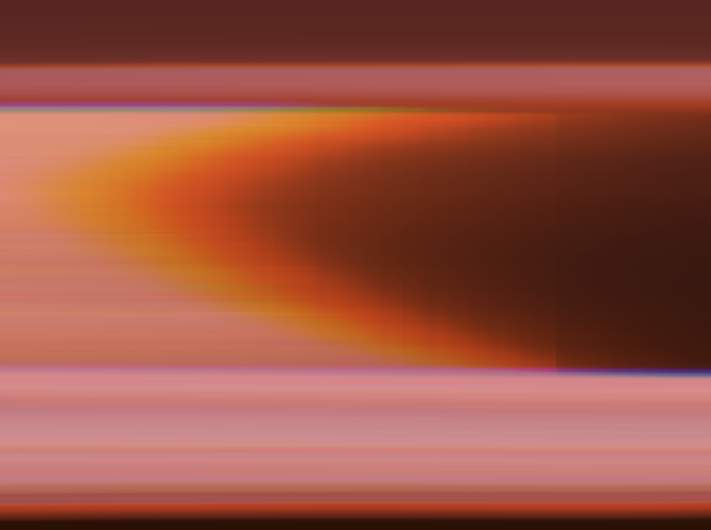

Here’s one with median filtering plus averaging:

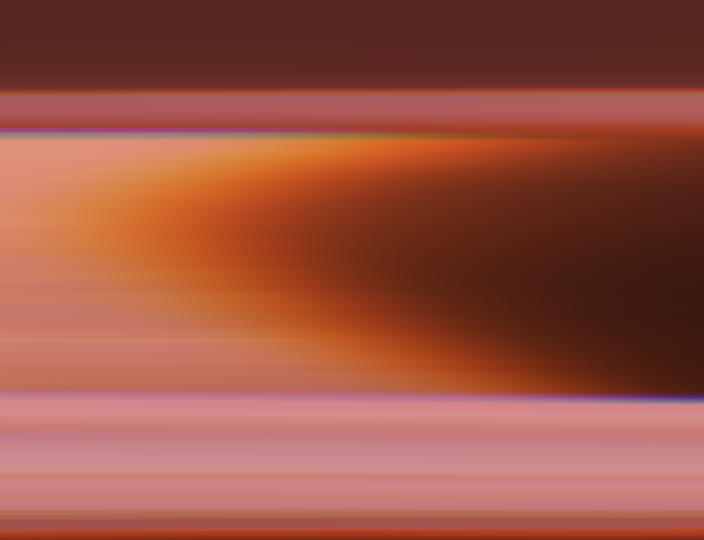

And with averaging only:

Averaging is better. It’s not usual that the computationally cheapest solution is the best, but it is here.

Note that averaging in the time direction (left to right in these pictures) does nothing for the blue artifact that runs in that direction. I’ll have to clean that up by hand later.

Leave a Reply