This is the 30th in a series of posts on color reproduction. The series starts here.

In some of the testing I’ve been doing, I’ve been varying the intensity of the Westcott LED panels that I’ve been using, and expecting the spectrum of the lights to not change materially. Is that a good assumption? I devised a fairly extreme test.

The camera was the Sony a7RII, set in aperture mode so that it would vary the exposure as I varied the light level. The lens was the Sony 90mm f/2.8 FE macro. The lighting was two Westcott LED panels on full, with the color temperature set to 5000K. I made seven exposures. The first exposure was with the light intensity set to 100, then 75, then 50, then 37, then 25, and so on, all the way to 3, for roughly 6 stops of dynamic range in half-stop intervals.

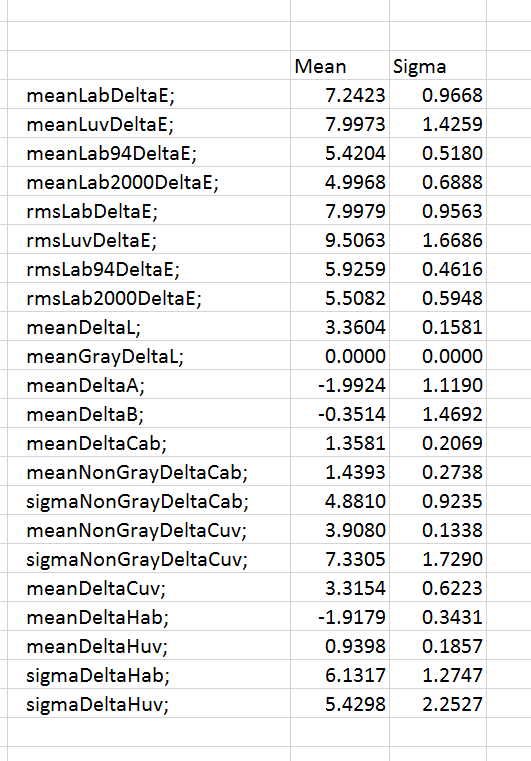

The simulated reference was lit with D50 light. I developed in Lr with Adobe Standard profile, and all controls at their default settings except that I white balanced to the third gray patch from the left. I computed the mean and standard deviation (sigma) of a bunch of aggregate color measures.

Here are the resulting statistics:

Not too bad, but a long way from great.

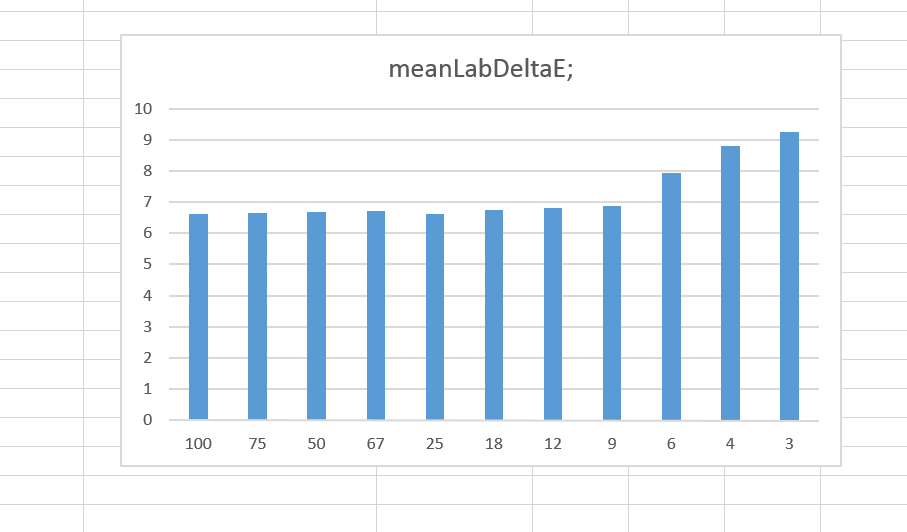

Let’s look at one of the measures, CIELab Delta E, versus light level setting:

You can see that things are pretty good until the setting is about 9, or a little over three stops down, then there’s a shift. Since we haven’t been using any levels below 50 so far, the work that I’ve already done should hold up. I’ll be careful in the future of dimming the panels below 10.

There’s one thing that I should mention. I ran the above tests during the day, and a little daylight leaked in to the room. It’s possible that the panels are doing fine, and that’s the reason for the shift.

I note that I white balanced the whole series to the exposure made with the light the brightest.

Leave a Reply