My silence over the last few days has not been because I’m on vacation. On the contrary, I’ve been really busy figuring out how to process the succulent slit-scan images. Doing it all in Matlab offers the most flexibility, but there’s not much interactivity. I create a set of parameters, process a bunch of images with them, wait a few minutes for the computer to do its work, look at the results, and think up a new set of parameters to try.

That works OK, but not really well, if there’s no clipping of the sharpened images to deal with. If there is, I’m at sea. I haven’t found a automagic way to deal with clipping like Topaz Detail 3 does, and messing around with some algorithms has given me great respect for the people who invented the Topaz Detail ones. It’s clear to me that I could spend weeks or months fiddling with code and still not come up with anything as good as Topaz has.

Therefor, I’ve redefined success. I’m just doing the higher-frequency (smaller kernel — say, up to 15 pixel sigma) sharpening one-dimensionally. I use Topaz Detail for the lower-frequency work. One reason I can do that is that the noise in the image is so low, and another is that I’m using the first pass of Topaz Detail on 56000×6000 pixel images, and I’ll be squishing them in the time (long) dimension later, so round kernels become elliptical after squeezing. Doing the 1D sharpening with small kernels makes visible clipping less likely.

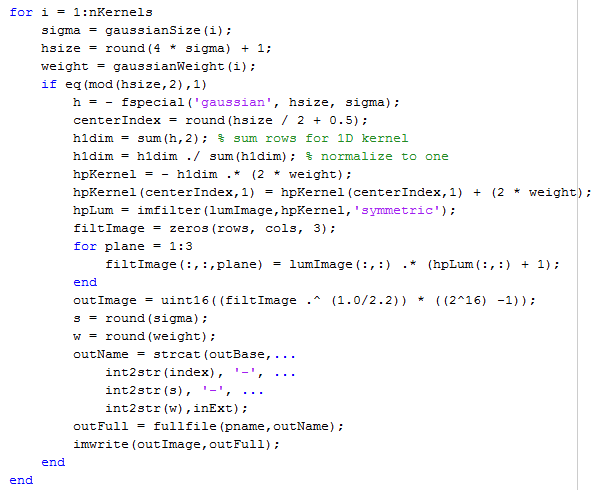

Another important reason for my progress was that I’ve found a way to make the adjustments of the 1D image sharpening interactive. Rather than have the Matlab program construct the entire 1D filtered image, I’m having it write out monochromatic sharpened images at each kernel size, with aggressive (amazingly — at least to me — high) weights:

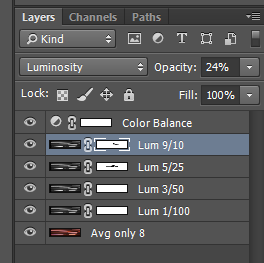

Then I bring the original image plus layers for all the sharpened ones into Photoshop, and set the layer blend modes for the sharpened images to “Luminosity”:

Then I adjust each layer’s opacity to taste.

Finally, when I see objectionable clipping, I brush black into the layer mask for the layer(s) that are making it happen.

Not mathematically elegant. Not really what I was looking for at all when I started this project. But it gets the job done, and well.

I may run into a problem with this method down the road, but it’s working for me on the one image I’ve tried it on.

One thing I tried that sort of worked for dealing with highlight clipping was scaling the floating point image file so that the brightest value in any color plane was unity, saving that as a 32-bit floating point TIFF, importing that into Lightroom, and using that program’s tone mapping functions. Lr treats the data in 32-bit FP files as scene-referred, so the tools are appropriate for dealing with clipping. For example, Lr’s Exposure tool produces non-linear saturation.

The technique worked moderately well, but I had some problems in the shadow areas. I decided to abandon it, and so I never got to the more difficult problem of what to do about black clipping. I did notice that Lr truncates negative values in 32-bit FP TIFFs.

Leave a Reply