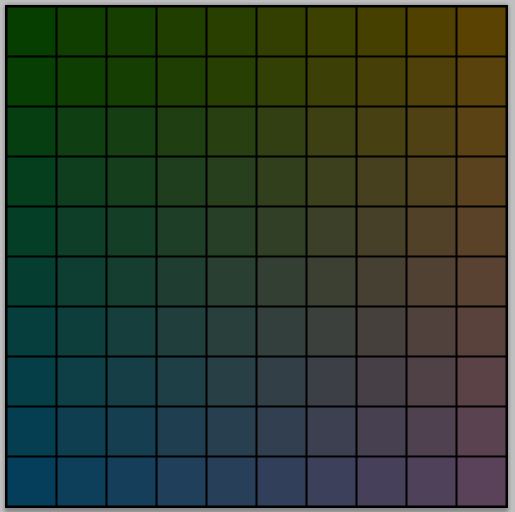

I’ve been working on ways to reduce the number of iterations required to calibrate a camera using UniWB, while requiring the use of only two programs by a user of this technique: Photoshop and Rawdigger. I first wrote a little Matlab program to generate a 10×10 grid of samples with a constant green value of 64 and red and blue values with ten unit increments.

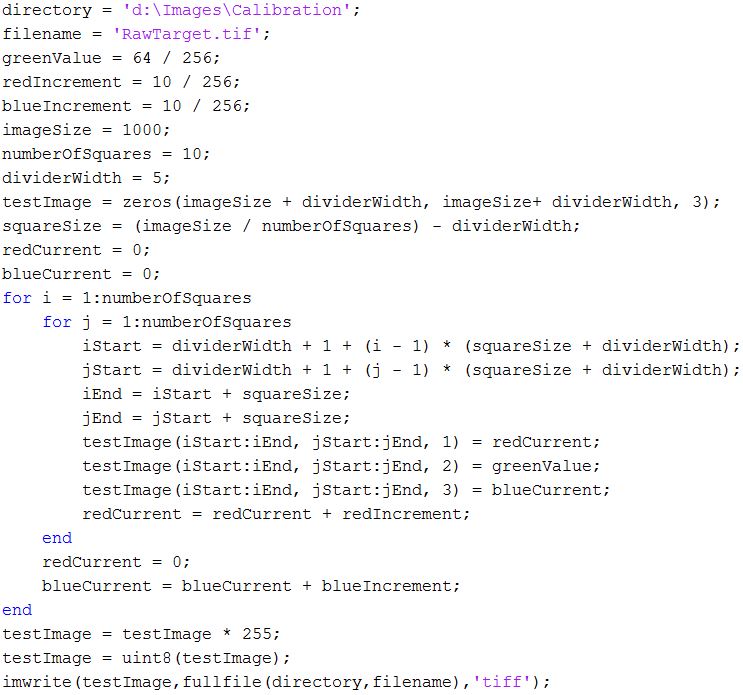

Here’s the program:

I read the resultant image into Photoshop and set the color space to Adobe 1998 RGB.

Here’s what the image looked like:

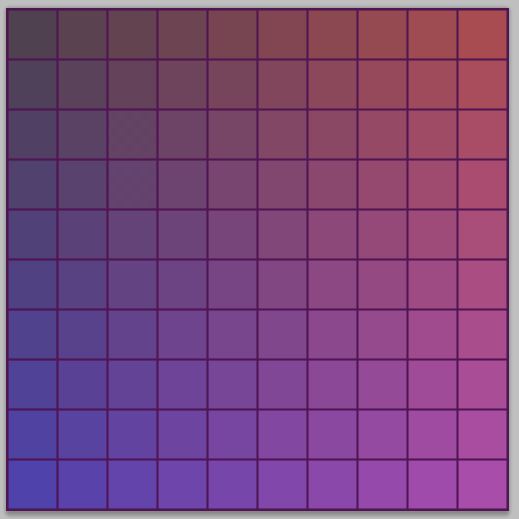

I then needed to put the right base values of red and blue in. I did that by creating a new layer, setting the blending mode to Linear Dodge (Add), and filling it with R=80, G=0, B=80. This gave me a grid of values from R=80, G=64, B=80 to R=170, G=64, B=170. I figured that would be a good image to use as the starting point for UniWB. If it turns out to by the wrong range for any particular camera, a user could just fill the Linear Dodge layer with another color.

Here’s what the two layers look like together:

Thus, the first step of UniWB calibration would be to photograph this target from the monitor with Photoshop running, and bring the resultant image into Rawdigger.

We’ll see how that works in the next post.

Leave a Reply