Recently I’ve seen two kinds of posts that share the same fundamental flaw. This is not the first time I’ve seen either of these mental errors in action, and I’m sure it won’t be the last. I’ve had a hard time getting some of the people making the errors to see what’s wrong with their reasoning and methodology, and I’d like to do what I can to prevent recurrence of this kind of overly facile thinking in the future. Hence this post.

Here are the two types of posts:

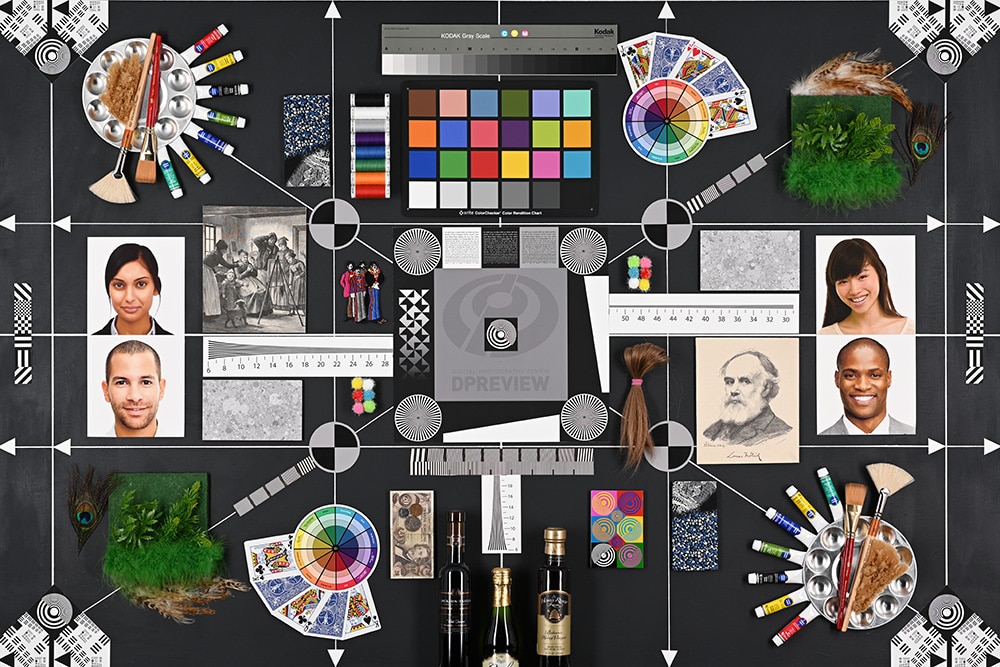

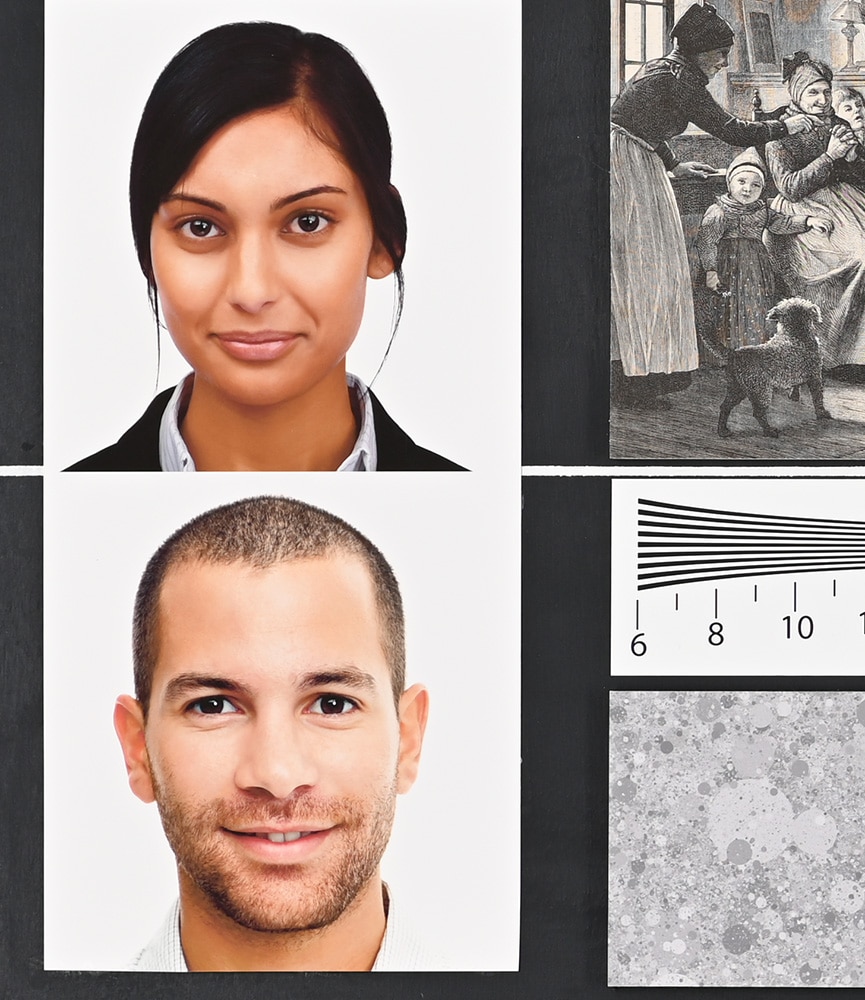

- Drawing conclusions about how a camera and/or raw developer renders the colors in human skin by comparing the portraits in the DPR studio scene across cameras and raw developers.

- Creating color profiles for cameras by photographing targets created on an inkjet printer.

Before I get into the details of the mental error common to both exercises, please let me tell a joke, well known among engineers, that illustrates at a high level what’s going on.

It’s midnight. There’s a bar, a streetlight, and a cop. A drunk staggers out of the shadows, and starts to poke around under the streetlight. The cop asks him what he’s doing. The drunk replies, “I’m looking for my keys.”

“Did you lose them here?” says the cop.

“No”, says the drunk. “I lost them over there, but the light is much better here.”

Here, in a nutshell, is how the two types of posts are looking for the keys under the lamp post.

- The portraits in the DPR studio scene are printed on a photographic printer; the colors in those portraits are, at best, metameric matches to the colors of actual human skin.

- Unless you’re photographing inkjet printer output, the colors in the camera calibration target in the experiment above are, at best, metameric matches to the colors of your actual subjects.

There’s a big word in both bullets above: metameric. What’s it mean in this context? Specifically, what’s a metameric match and why is that inadequate?

Metamerism is a property of the human vision system, and occurs because the color-normal (insert caveats here) human reduces spectra falling on the retina to three coordinates, and thus there are an infinite number to spectra that can produce colors that look the same to our putatively color-normal person. That phenomenon is called metamerism, and spectra that resolve to the same color are called metamers. A metameric match occurs when two samples with different spectra are perceived by a color-normal person as the same color. Metameric failure is the lack of occurrence of a metameric match when one might be expected.

Let me do a bit of self-plagiarism from an earlier blog post and give you an example that illustrates several kinds of metameric failure.

Let’s imagine that we’re engineers working for an automobile manufacturer and developing a vehicle with a carbon-fiber roof, aluminum fenders, and plastic bumper covers. The marketing folks have picked out ten paint colors. They don’t care that we meet their color specs exactly, but they do care that the roof matches the fenders and the bumpers match both. We meet with our paint suppliers, and find out that the paint formulations have to be slightly different so that they’ll adhere properly to the three different substrates, and so the bumpers can survive small impacts without the paint flaking off, and so the weave of the carbon fiber won’t show through and wreck the infinitely-deep pool high gloss effect that we’re going for.

Turns out that the reflectance spectra of all three paints are somewhat different, and we mix the paints so that we get a metameric match for a color normal person for each of the ten colors when the car is parked in bright Detroit sunshine.

What can go wrong?

Plenty.

We have prototypes painted in each of the colors, park them in front of the development building on a sunny day and call in the brass. Two of them, both men are what we used to unfeelingly call color blind. One suffers from red-green colorblindness protanopia (no rho cell contribution), and one the other red-green colorblindness, deuteranopia (no gamma cell contribution). About one percent of males suffer from each.

Each of the color-blind persons says that some of the paints don’t match, but they identify different paint pairs as being the problem ones. This is called observer metameric failure. Everybody else says that the colors match just fine and can’t figure out what’s wrong with the two guys who are colorblind. There’s one woman who has a rare condition called tetrachromacy (four kinds of cone cells), and none of the color pairs match for her. That’s another kind of observer metameric failure.

Now we call in the photographers and have them take pictures of the cars in bright sunshine. In some of the pictures, the color pairs don’t match. This is called capture metameric failure. The odd thing is that the colors that don’t match for the Phase One shooter are different from the guy with the Nikon.

We bring the cars indoors for a focus group. We carefully screen the group to eliminate all colorblind people and tetrachromats. The indoor lighting is a mixture of halogen and fluorescent lighting. Several people complain that the colors on many of the cars don’t match each other. When this is pointed out to the focus group as a potential problem, all agree that some colors don’t match, and they all agree on which colors they are. This is called illuminant metameric failure.

The photographers take pictures of the cars in the studio using high CRI 3200K LED lighting, and a bunch of colors don’t match, but they’re not all the same colors that didn’t match when the same photographers used the same cameras to take pictures of the cars outside. This is a combination of illuminant metameric failure and capture metameric failure.

We find a set of pictures where the colors match, and the photographer prints them out on an inkjet printer. We look at the prints in the 5000K proofing booth, and the silver car looks neutral. We take the prints into a meeting with the ad agency in a fluorescent-lit conference room, and the silver car looks yellowish. All the observers are color normal. This is a combination of one or more instances of illuminant metameric failure. In the viewing booth, the observer is adapting to the white surround, and the spectrum of the inks depicting the silver car resolves to a color with a chromaticity similar to the surround. In the conference room, the observer is adapting to the white surround, and the spectrum of the inks depicting the silver car resolves to a color with a chromaticity different from the surround. The fact that the printer uses fluorescent yellow ink and the paper has optical brighteners doesn’t help matters.

With that in mind, let’s take a look at metamerism in the two cases above.

In the first case, the portraits in the DPR test scene, there are some obvious difficulties even before we get to metamerism. The captured image from which the print in the scene was made may have color errors in the developed file, stemming from a combination of the color filter array (CFA) of the camera used to make the portraits and the raw developer and color profile employed. The print may not faithfully represent the colors in the developed file. The print may have faded over time. But let’s set all that aside and assume that the colors in the portrait photos properly represent the colors of the subject. Even if that’s true, the spectra of the inkjet print will be different from that of the subjects’ skin, and thus the match between the print and the subject will be a metameric one. So when the studio scene is captured by the camera under test, the colors in the developed capture will reflect how accurately the camera and associated raw developer render colors in the skin-tone range rendered by an inkjet printer, not how the camera/developer combination renders the colors in actual human skin.

In order to test how well the camera/developer reproduce human skin tones, the subject needs to be either an actual human being, or a test chart constructed so that each patch’s spectrum matches that of some human skin. The lighting spectrum needs to be the same as that of light that the user of the studio scene cares about, but that’s usually less of an issue. If that is done, the capture metameric error of the studio scene would match the capture metameric error associated with an actual human subject, and the skin tones in the scene will reflect how the actual camera captures skin tones. As things stand now, unless your chosen use for the camera is making pictures of inkjet prints, the DPR studio scene tells you nothing about how well the camera and associated raw developer will render human skin tones.

Finding the keys would be difficult if we used actual human subjects in the DPR test scene or came up with test patches with the required reflectance spectra. It’s much easier to look under the lamp post and use an inkjet print.

In the second case, we wish to present known spectra to the camera so that we can see how the camera responds to those spectra and use that information to make either an accurate color profile for the camera, or make a color profile whose inaccuracies are by design. The readily available test targets have either 24 to less than 200 patches, and many of those are grays. We’d also like more patches. Some people are making more patches by printing their test targets with an inkjet printer. That gives more colors, but all the colors are created from the same 4 to 8 inks, applied at various droplet sizes with various dilutions. Those inks mix on the paper in a was more complex than the additive mixing of RGB light, but the spectra of the mixed colors is a function of the spectra of the individual inks. When you print targets of several hundred patches with an inkjet printer, you are by no means getting several hundred independent spectra, and you are getting spectra that are not related to those of natural-world subjects.

In order to make a profile useful for natural-world subject matter, the patches need to be either natural-world objects, or a test chart constructed so that each patch’s spectrum matches that of some human skin. The lighting spectrum needs to be the same as that of light that the user of the profile cares about. If that is done, the capture metameric error of the patches would match the capture metameric error associated with an the intended subject matter, and the colors in the captured scene will be acceptably accurate or biased away from accuracy in the desired direction. With inkjet-printed targets, unless your chosen use for the camera is making pictures of inkjet prints, the profiles will produce colors that depart for the real ones in unknown and uncontrollable ways.

Finding the keys would be difficult if we used actual natural objects in the test target or came up with test patches with the required reflectance spectra. It’s much easier to look under the lamp post and use an inkjet print for a target.

Good info, thanks a lot!

My understanding is also that the prints are fading.

It is good to see a post like this, written by a recognized expert. If this question were raised by some less known technician like me it could be taken as trolling. I cannot see how someone can disagree with your explanation. It is objectively right. But that may scare people whose goal in photography is to achieve a perfect or faithful reproduction of reality. Ironically, this is more common in engineers than in experienced photographers.

And there is much more to increase the pain. Test targets are all metametic, be them from inkjet printers or the commercially available ones. The later are very useful, mostly in digital photography when combined with software profiling. But that will always be just a starting point because the inks of the charts may not match (emission + capture medium response) with those of fabrics, artworks, objects or car paint, as in your example. And skin! So, you are absolutely right, it is better to test “actual human beings”. But then you must have the person available to be compared with the output, under the same lighting! No way, the image will hardly be seen under the same conditions as captured!

More! If you test skin tones using real models, there is variation even in an hypothetical eugenic population. The same person may show many shades depending on time, lighting, condition or body part. And makeup too, which are also metameric, argh! I can recall so many cases of wedding photos where the brides got terrible appearance due to the color properties of their metameric makeups, pristine in real life but awful in images taken with the blueish light of portable flashes. That was common in the film era, when correction was almost impossible.

What we take from this is: There is no skin pattern! So, skin tones in test targets may just give one idea of what you can expect from your system, but they are as imperfect as inkjet patches or DPR’s color prints. Although engineers keep devising quantitative methods to achieve fidelity, the final decision is driven by human perception by visual appreciation and comparison, despite the variation and limitations of our natural hardware. That is where our main tool takes the principal role: the experience to communicate and transmit the mood we wish to touch the audience, with images that may be soft or contrasty, dull or saturated, cool or warm, and so on.

And else! The screen is (hugely) metameric and has (huge) variations. It is insane to try to match profiles in different brands of monitors in one studio, what to expect in a large population? And then, no matter how well you calibrate your monitors, you will have a plethora of reactions from different prints and papers. My workflow is to compare one print (seen under a combination of standard 5000K and 3200K gallery lights) to the image on the monitor and adjust the later to “metamericaly” emulate the printed result, which is my target output. Usually my monitors are dull, to mimic the shorter range of printed paper, but so I can have a good starting point for new work. Even so I always expect to make extra corrections after test prints, my recycling bin full of wasted paper, to achieve the best interpretation or the expression I have in mind, which is rather personal, sensorial and circumstantial.

When faced with life’s complexities, it is tempting to throw up one’s hands and say. “It’s all too complicated to deal with.” In the case of color reproduction, that’s not my approach at all. I deal with the things I can deal with, am cognizant of the things I can’t, and bridge the gaps with workarounds.

For example, this: “What we take from this is: There is no skin pattern! So, skin tones in test targets may just give one idea of what you can expect from your system, but they are as imperfect as inkjet patches or DPR’s color prints. ” I think you can learn a lot by examining the spectra of real-world objects, including skin tones, and targets that span the basis function set of those tones are very useful.

> “And makeup too, which are also metameric, argh!”

I saw a similar discussion about “masking (sp?) makeup”. IIRC, the conclusion was that cheap makeup was hopelessly metameric, but there is a good chance to find a brand of expensive makeup which would be a good match for your skin! (It was for Caucasian skin, IIRC…)

If so: for metameric cameras, one could try to make color targets made of expensive brands of masking creams. (Of course, the problem is that they are not designed to survive for years…)

What, then, is to be done?

Take a spectrophotometer to several skin patches in different lighting conditions and attempt to duplicate these spectra by sweeping with a tunable frequency monochromatic laser integrating over a long exposure?

Or measuring sensor spectral response and reflected spectra and doing the above only in simulation?

Measuring the sensor spectral response is definitely the high road here, but is beyond the skill set and time allocatable to the task for most photographers.

The CC SG has several patches intended to replicate skin tone spectra.

Speaking of measuring sensor spectral response…

This was just posted: a budget way to measure the spectral response of the sensor.

https://discuss.pixls.us/t/the-quest-for-good-color-2-spectral-profiles-on-the-cheap/18286

I agree. I found SSF data for my A7RII and it’s a beautiful thing to be able to quickly create custom profiles for any illuminant using datasets from multiracial skin, hair, and eye spectra, Color Checker SG, color space gamut borders, etc, without ever needing to shoot a target. If camera ssf data were more readily available I feel like this could be the future of digital photography. Of course the software would also need to evolve beyond command line apps. As powerful as Argyll and Dcamprof are they’ll never gain popular use.

This is a nice discussion. Jim has touched the wounds (as we say in my country), right on spot! Raising the question on how we test the reproduction of skin tones he opened the space for a debate that can be as long as thick treatise book.

I agree with him, it is nonsense to use a color photograph to evaluate skin tone fidelity. I also agree that if it is impossible to establish a standard for skin tone, so many are the variations and variables , we can “examine the spectra of skin tones, spanning the basis function set of those tones”. So I envision that our test should comprise at least five kids, selected as UNICEF selects their models, who should stand still while we check the spectra from their chins, cheeks and foreheads under the same light that the test image will be captured. Things get more complicated as we have to decide how those spectra will be emulated by RGB monitors or 4, 6, 8, 10 color prints. Engineers make things easy for us providing every kind of profile used in the translation from real life objects to image media. And instead of using targets that may vary out of control, such as the classical white woman surrounded by common objects in the old days, the solution is to design a test target that may best represent all the important families of tones and shades for the average photographer. This is wise, because we can have full control of the variables, knowing precisely the properties of each patch and how they must be translated into numerical values and then design profiles suited for each kind of intent. So, despite the limited, metameric and fake appearance of skin tones on test charts, they offer precisely measurable information, which cannot be accessed with DPR’s color print, even more when faded, nor from a real model if we cannot check how pale or suntanned she was. In fact, all those other color objects on the scene offer just ideas of color properties, not precise information, all but the color test chart and the gray scale.

This takes us to the other fallacy, the inkjet test charts. The main problem is the lack of quantitative information of the color patches by the particular ink set. In a private test it may be OK, if one can make a densitometric or even visual comparison of the result with the original. But it is of little use if that information cannot be accessed by the public. However, it must be pointed that if the homemade scales are metameric, so are the commercial ones. In reproduction of artwork, many times, if you follow the color separation guide you end with a poor result because of the different behavior of dyes and pigments as well as those from the supports. In these cases, you start with your basic setup but the final decision is made by visual comparison.

Thanks for a very clear text!

Two remarks:

• “the spectra of the mixed colors is a function of the spectra of the individual inks”.

This holds when pigment dots do not overlap. Do they (for “interesting” colors)? You saw/published many magnified pictures, so I hope you can answer this… (Overlapping mixes spectra not only additively, but also multiplicatively — which would result in a much larger variety.)

• “In order to test how well the camera/developer reproduce human skin tones, the subject needs to be either an actual human being, or a test chart constructed so that each patch’s spectrum matches that of some human skin.”

… Or maybe not. Keep in mind that for95% of people, a 100%-good match is possible: just have spectral sensibilities to match linear combinations of “non-mutated” cones. And there may be many ways to refute this!

For example, one can have 10 samples which are all metameric to each other (indistinguishable for 95% of people, given this particular lighting conditions). A good camera would produce identical results.

Another approach may be to have a gradient made of very narrow-band colors with constant (or known) energy density. This can be made by collimating light from “natural spectum” LED (such as “cold” Sunlike or Waveform’s Absolute) to a diffraction grating. Then one could be able to find the reproduction of ANY color by a DSP over the image of such gradient.

(Finally, it may happen that the approach of using masking creams — discussed above — can be made practical. However, I do not recall knowing somebody who designs such creams…)

I am one of those who throw up their hands. My skills set definitely lies on different fields, not science. Life science maybe 😉

So here comes my dumb question: Am I right if I “just” calibrate my workflow and be done, without diving into metametrics, or is even that (calibration) of no use?

Humbly, Robert

If by calibrate your workflow, you mean calibrate your monitor (my first priority), calibrate your camera, and calibrate your printer, yes, you will be far better off than if you had done none of those things. Be aware that only the monitor calibration is easy, and the camera calibration can be tricky.

Thank you Jim, that was exactly I wanted to hear / read. And now to something completely different: Culling of images in my camper standing under the Alps with a glass of prosecco!

Ouch: just read up a bit on camera calibration – this one has to wait, monitor and printer are already done. Thanks again!

Am I glad I only print (and post) black-and-white images.

I have enough difficulties getting my print brightness correspond to my screen brightness.

Jim, thanks for the article. I was very fond of the colors (and skin tones) the a6400 produces OOC. What I am observing is a miss match between the ouput of the a6400 OOC JPEG colors and the colors that Imaging Edge (and Lightroom) generate. Both render a yellow cast across the whole image. On the other hand, OCC from the a6500 and Imaging Edge are all but identical (and LR Camera Standard very close, too). EOS R OOC JPEGs and DPP4 output matches completly, while LR Camera Standard v2 is off. So, no, the test images in dpreview are not helpful in finding “the natural looking” skin tone. They are helpful to comapre output from different raw developers with the OOC JPEG.

P.S.: It seems as if Sony themselves are using a bad profile in Imaging Edge for the a6400 (and it seems for the a6100 and a6600 as well) with a yellowish cast. It also seems as if that error is somehow entering into Lightroom profiles (does Sony provide those profiles to Adobe?). I have sold my a6400 because I can’t match the OOC JPEGs with LR, and DxO (which comes close) runs too slow on my PC (and has other quirks).

IF there’s a yellow cast across the whole image, that sounds like a white balance problem. Are you white balancing to a gray card in both cases?

I am using the images downloaded from dpreview and leave WB as is. Using WB removes the yellow cast but then of course OOC JPEG and the JPEG developed in IE of LR differ even more. I have tried WB adjustments in Adobe DNG Profile Editor to no avail.

In my opinion, you need to get white balance right before you judge color. Is the color in the JPEG image your idea of perfection?

You are right about the WB. But I would expect consitency between the OOC, Manufacturer’s software like Imaging Edge or DPP, and the Lightroom Camera Standard profile (not the Adobe Standard, which is Adobe’s own interpretation). For the a6500 and the NEX6, that consitency is almost perfect. So is consitency between the EOS R OOC and DDP4 (the LR Camera Standard V2 profile is significantly off).

Yes, I did like the OOC JPEGs the a6400 produced – but I couldn’t get that yellow shade out of the JPEGs from IE and LR. Applying WB removed the yellow shade only to introduce a magenta cast. I have OOC and IE, LR and DxO JPEG for several cameras here: https://www.amazon.de/clouddrive/share/i6gynrxocwtBAZ4vX0GVEJ1XczXwZd3Uw77fyhg8JTC If you don’t know what to do with your time (which I doubt 🙂 ) take a look. Of course I know that color is subjective and largely what one wants to make of it. Once I edit an image, colors change. But still, having a starting point that is agreeable helps. The comparison of the dpreview test charts is based on the assumption (never assume) that the JPEG file is OOC and that there has not been any significant change in lighting if the JEPG and RAW file numbers are different.

Thanks for your time. And yes, I sold my a6400 yesterday because the better AF is not as important to me. Ordered a second a6500 body which currently sells at the price of the a6400. I like the OOC colors of the a6500 which are close to the a6400 OOCs.

a) thanks jim for posting this article; as usual, excellent explanation. i am a retired engineer, subject to the desire to calibrate, but as a hobbyist, i know enough to already know most of what you said, understand the utter futility of trying to achieve perfection, and i’m thankful i’m not being paid by someone to ‘get it right’.

b) beyond everything you wrote, and beyond the excellent comments, there’s the fact that humans are not all created equal. ignoring the all the variations of colorblindness, we do not all have the same number of red, green, and blue cones. nor is the ratio of each to the other the same throughout the entire ‘normal’ human race. therefore, even if you had the time, expertise, equipment, chemicals (pigments and dyes), etc to establish de facto standards, and we were all within the ‘normal’ range of humans, we would not all see the same results.

c) i do bird photography for a hobby. some of them have up to 12 types of cones. i would love to see the world the way they do. they must see colors we can’t even imagine, and i suspect that the camouflage hunters use to shoot them, or photographers use to shoot them (with a camera), don’t make much of a difference to the birds; they see them as altogether different colors than the natural colors of the soil and flora around them. fantastic stuff to think about.

keep up the good work.

actually, i just tried to find my source for the 12 types of cones for some birds, and can’t, so i retract that comment. most i could find was 4, which is still pretty cool. sorry about that!

Colour is too complex a discussion to ever get over in a forum or comments discussion. The further difficulty you only touched on is the actual cones of humans are for blue, greenish yellow and orangish red not red, green, blue as everyone thinks and is actually quite different from a camera sensors sensitivity. Then there is the belief that anything can be corrected in post which it can’t because cameras have very different colour sensitivities (Sony and Canon colour is a real thing). I find a didymium filter the best demonstration to people as it removes yellow and intensifies red in most things but not all and the filter itself changes colour (purple, green and blue) depending on the lighting. Also if you take a picture of a scene with and without it no amount of post processing will get the the two to look the same. Perhaps you should do an example post with this

There are two relevant points here. For discussions in which accuracy of terminology is important, I think it’s a mistake to divide the cones into red, green and blue. On that point I agree with you. I further think it’s a mistake to assign them colors at all. I favor S, M, and L, or rho, gamma, and beta for identifiers.

The second is the spectral sensitivities of the camera CFA and sensor. It is not necessary for accurate color that the spectra of the camera responses be the same as that of the cones, only that they be a linear transform of those. That’s the Luther-Ives criterion. Sadly, no commercial cameras even do that.

This is a pretty artificial demonstration, and not one that I find relevant to real-world camera spectral responses. If you want to demonstrate that you can’t fix all color errors in post, it is sufficient to point to the possibility that camera A resolves two different spectral responses as one set of raw responses, and camera B resolves them as different, while the opposit situation can exict for some other pair of spectral responses.