This post is part of a series about some experiments I’m doing combining space and time in slit scan photographs. The series starts here.

When you take a set of 10,000 5200×3500 pixel images and run slit scan simulations against them, there are a lot of options:

- What image to start with.

- Which way to run the simulated scan.

- How fast to move the simulated slit.

- How diffuse the edges of the slit should be.

There are way more than 10,000 ways to process all those images, and probably several thousand that yield meaningfully different results. It takes between 20 minutes and two hours to process an image. That means that opportunities for experimentation are limited. I’ve tried reducing the size of the images, finding something that works, then going back to the big images, and trying to get something similar. I don’t like that workflow, and it’s pretty hit and miss.

What to do?

Heck, this is photography! You already know the answer: pour money on it.

I called up MathWorks and ordered the Parallel Processing Toolkit for Matlab. The price wasn’t actually all that high. I spet about five minutes scanning the documentation, and found a tool that will work for me. It’s a construction similar to a for loop, but one that allows parallel execution. It’s called parfor. I was careful to write a loop for processing images in parallel where any iteration did not depend on the result of any other iteration. I was also careful to create any class instances inside the loop, so that each parallel interation would get its own class instance.

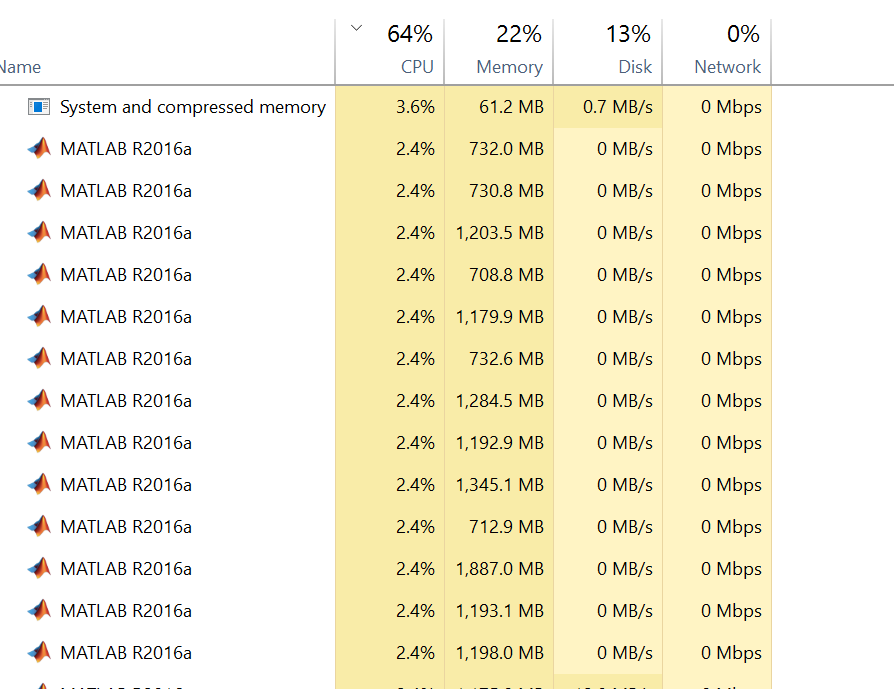

When I ran it, I got a bunch of Matlab processes:

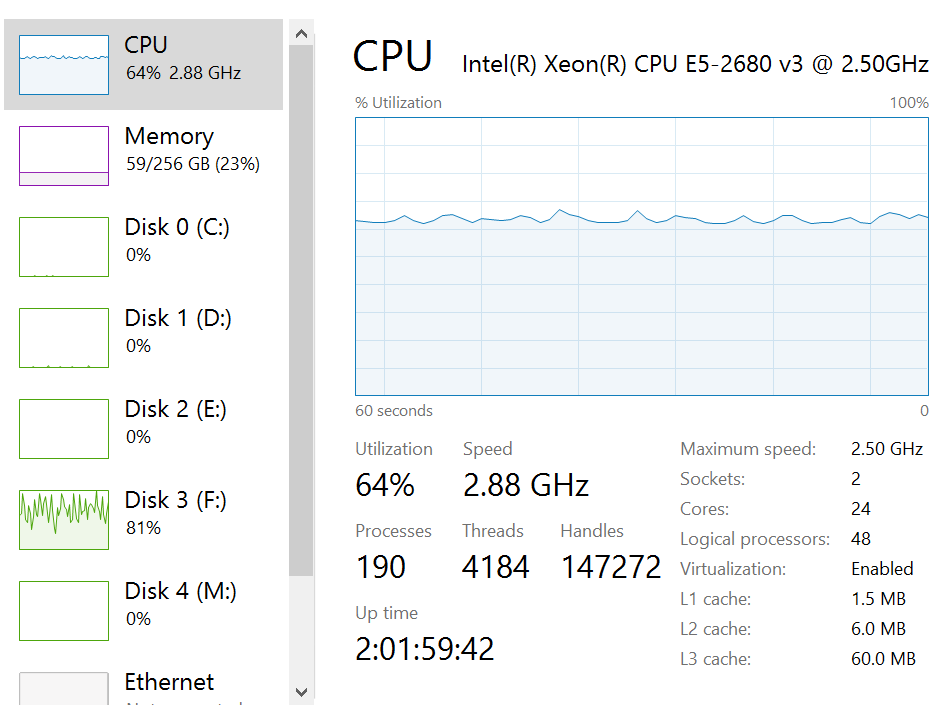

There’s a place in the Matlab console for tuning the parallel execution. About the only thing I needed to do was to pick the maximum number of parallel workers so that the combined process set was just under being disk-limited, and that I had enough CPU capacity left to do my usual work while Matlab did its thing. I picked 24 workers, which gave me resource utilization like this:

Then I proceeded to make images like these:

How much of a speed improvement did the parallel processing toolbox provide Jim? Do you use the GPU at all?

Running 24 workers, it’s about 24 times as fast. I’m not using the GPU, just parfor loops.

Very cool. Been thinking of speeding up Lightroom by putting images on 10gbe server and only operating on subsets on multiple different computers.

10000 jpeg images could fit in ram if you are willing to throw money at it

Yes. I only (only?) have 256GB of RAM.

And 197gb free for a ram disk 🙂 I’ve considered using a ramdisk for Lightroom but seemed dangerous. But for your read only situation it’d be perfect. Anyway I assume your disk speed limiter is on an ssd?

I’m considering a RAM disk, but I’d rather figure out a way to get Win 10 to use a bigger disk cache. I am also looking into a SSD striped array. I’m using spinning rust now.

I recently invested in a couple of these:

4TB Samsung PM863a Entrerprise SSD (refurb, less than 5TBW) w/ 3 Year Warranty & $1000 + free shipping

https://slickdeals.net/share/iphone_app/t/9241219

Sent using Slickdeals for iOS

Did you ever try a ramdisk? I have 128gb of ram now, and thinking of preloading cinemadng files (individual file for every video frame), have done a few minutes of googling and seems like softperfect seems to be actively developed, and recommended in a number of comments on the web. Found anything better?

I don’t have enough RAM to fit a whole series of images in RAM. I have 256GB, which seems like a lot, but is not enough for that. However, by spawning 24 parallel Matlab workers and assigning each to a core, I can get the synthetic slit scan rendering to the point where the CPU load is about 70%, which is as close to CPU-bound as I’d like to be and still be able to use the computer for other things.

Also you could speed up your search by working on smaller jpegs then rerendering full resolution your favorites

I tried that before, and I may return to it if I can automate the translation of the parameters to process the small images to the equivalent ones for the big images. I’m sure it can be (approximately) done, but I just need to buckle down and write the code.