I can’t measure sub-pixel camera movement at the sensor, so I have to look for indirect ways to get at it. Are there ways to glean such information from photographs of the ISO 12233 target? I turned to a simulation study to find out.

Using Matlab, I created a nearly-ideal camera with a resolution of 1500×1000 pixels, which is very close to the target size measured in pixels in the photographs with the Nikon D800E and Sony a7R in the last post. This camera defies the law of physics, since it exhibits zero photon noise and its lens is unaffected by diffraction. In other respects, it is merely perfect: no pixel response non-uniformity, read noise at the resolution of double precision floating point numbers. The camera has an RGGB Bayer color filter array, which is the same choice as the Nikon D800E. The camera digitizes images with 64-bit floating point resolution. Its native color space is Adobe 1998 RGB. Its lens has no distortion, and no aberrations of any kind. Sounds like a great camera, huh? You can’t build such an instrument, although, through the magic of software, it’s easier to code up a camera like this than one that suffers from the maladies of real photographic devices.

Adobe Lightroom, and many other raw processors, use proprietary algorithms for demosaicing. I used the most bog-standard one: bilinear interpolation. The advantage of that method is that anyone who can write a demosaicing routine can code it. The disadvantage is that it doesn’t do as good a job as some of the “special sauce” algorithms. After demosaicing, I converted the images to 16-bit gamma 2.2 integer representation to write the output TIFF files.

I created a rasterized version of the encapsulated PostScript (vector) target at about 10000×6700 pixels, so that it would have roughly 36 times the number of pixels as the camera image.

The first series of tests that I ran looked at the effect of various pixel fill factors on resolution, aliasing, and false color. The pixel fill factor is the ratio of the light sensitive area of a sensor pixel, aka a sensel, to the total area of the pixel. A perfect classical point sampler has a fill factor of zero. Modern cameras with micro lenses approach fill factors of 100%. People don’t talk about fill factors of over 100%, but in a simulated camera, it’s easy to code one up. A camera with a fill factor of 400% would take light from an area surrounding the sensel with four times the actual sensel area. This describes an anti-aliasing filter such as the one used on the Nikon D800 and the Sony a7, but not on the Nikon D800E and the Sony a7R.

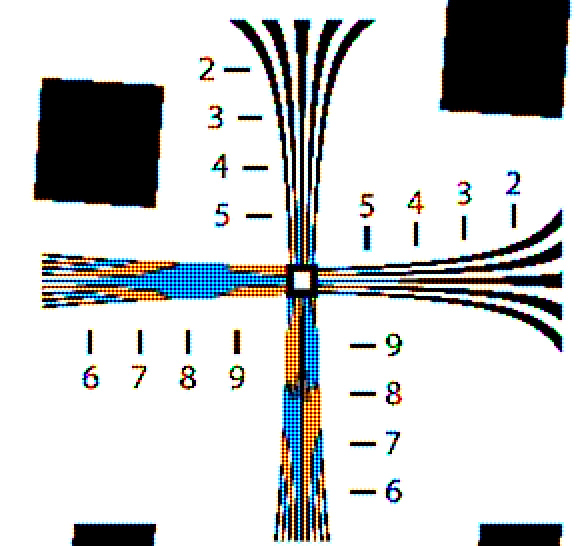

Here’s the portion of the target that we looked at with real cameras in the precious post (presented at 2x magnification, just like the real pictures in the previous post), as seen by my simulated camera with a fill factor of 1% (which is pushing things a bit, since that’s finer resolution than the target:

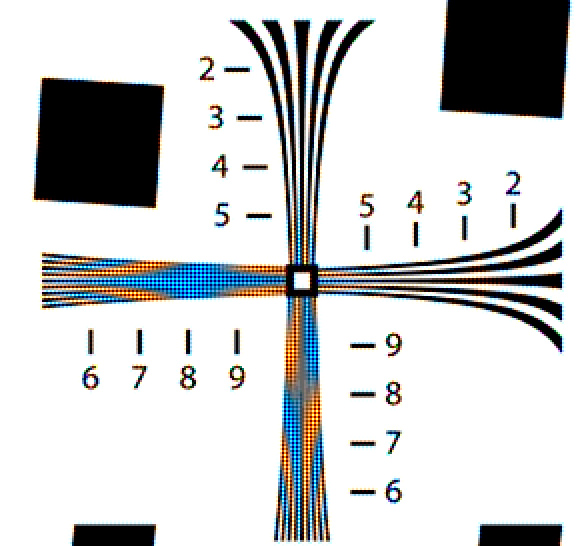

Going to 100% fill costs us some resolution and contrast, but calms down the false color a bit and smooths over the stair-stepping:

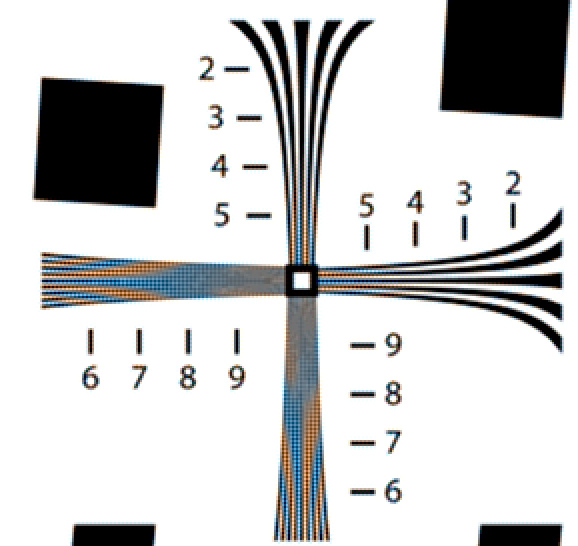

At 400% fill – a reasonable anti-aliasing filter – false color is reduced to about the level of the sharpest real-camera pictures in the preceding post (which were made with cameras with no AA filter):

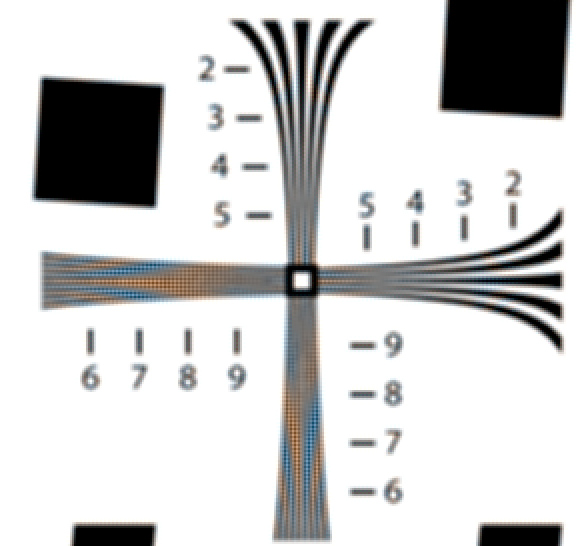

Even at 1600% fill, which is more AA than a real camera and enough to cause some serious blurriness, there’s still some false color:

Next, we’ll look at other things that can affect ISO 12233 images.

[…] you want to see a simulation study on the effects of anti-aliasing and fill factor on sharpness, here it is. A similar study on camera motion is here. A study showing how vibration blur affects […]