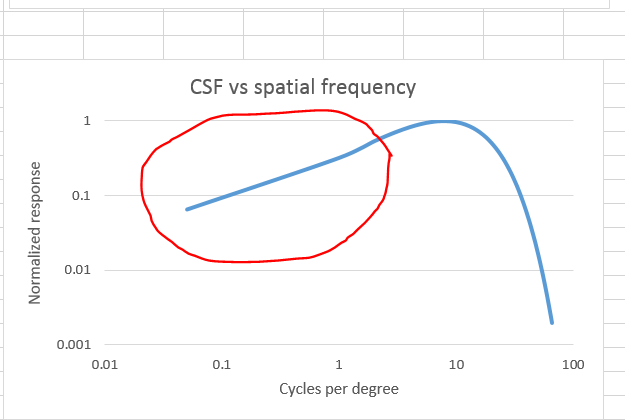

Let’s look first at the drop in luminance contrast sensitivity at low spatial frequencies.

That’s why dodging and burning works. Slow changes in luminance are introduced by the printer (in the old days) or editor (now) so that local contrast can be higher or to call attention to or from image elements. Done right, the viewer never notices the changes in luminance. Done really right, the photographer who made the changes can look at the edited image and not see what she did.

I suppose, given enough artfulness on the part of the image editor, that she could pull off this legerdemain with no help from the eye of the beholder, but the fact that anyone looking at the image has reduced sensitivity to the slow luminance changes sure helps.

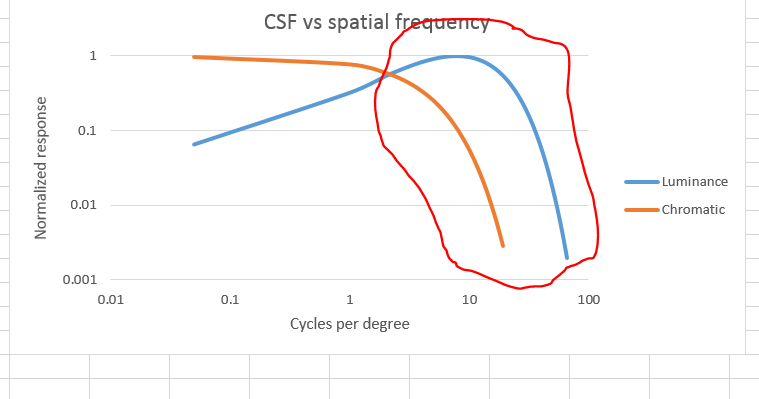

Next, let’s examine the consequences of the rolloff in chromatic contrast sensitivity at medium spatial frequencies, and the similar rolloff in luminance contrast sensitivity a little less than two octaves higher.

One implication of this disparity it that small scale output sharpening need not apply to chrominance. Indeed, sharpening chrominance beyond the viewer’s ability to see the results is dangerous if the sharpening algorithm is prone to generating artifacts, although there a counter argument that those artifacts won’t be seen by the viewer unless they have luminance components.

In the pre-press world in the days before desktop color, it was quite common to perform sharpening in CIELab by sharpening only the L* plane. Some even took the image to Lab just so they could perform the sharpening that way. Twenty years ago, I wrote an SPIE paper, Efficient chromaticity-preserving sharpening of RGB images, on how to get similar results without the computational and image-quality costs of the round trip to Lab.

Some people sharpen today by creating a duplicate layer, sharpening it, and using a luminance blend mode. I think it likely that some of the secret sauce in some proprietary output sharpening software involves luminance sharpening.

There is an outgrowth of the difference between the lowpass cutoff frequencies of luminance and chromatic contrast sensitivity that applies to file formats. There is no need to sample the chrominance layers as finely as the luminance layers. Kodak’s PhotoCD format sampled the two chrominance layers half as often in both the x and y directions as the luminance layer, producing chrominance layers that were a quarter the size of what they would had otherwise been, and reducing the entire image file size in half.

Unfortunately, we photographers don’t use luminance/chrominance color spaces much. Photoshop supports CIELab, but many of the tools don’t work in Lab. Color space conversion is in general lossy, so converting files to any old luminance/chrominance space before writing them out to disk has to be done judiciously. It’s easy to define a luminance/ chrominance space based on any particular RGB space – PhotoYcc, the color space of PhotoCD, was based on what we now call BT.709, the HDTV color space. That would get rid of changing primaries or gamma as a source of loss. To prevent degradation when images are repeatedly decompressed and compressed, there would have to be a standard way to encode and decode the four-pixels of the RGB form into the single pixel of the chromaticity planes. Even so, there would still be opportunity to have values “walk” with successive decompress/edit/compress cycles.

Because disk space is cheap, and photographers wary of damage to their “originals” – look at the controversies over lossless raw compression – I don’t see this happening for file formats that are now used for lossless storage, such as TIFF and PSD. It makes a lot of sense for lossy encodings. There was provision for something akin to this kind of chrominance subsampling in the original JPEG standard (in the form of different DCT thresholds for chromaticity planes), but I don’t know if it is used today.

Next, and last in this series on luminance/chrominance spatial effects, the implications for image capture.

Slight omission, Jim . .

to see the results is dangerous if the sharpening algorithm is prone to generating artifacts, although there [is] a counter argument that those artifacts won’t be seen by the viewer unless they have luminance components.

Not keen on the use of “she” for third person refs 🙂

Ted