This is one in a series of posts on the Sony alpha 7 R Mark IV (aka a7RIV). You should be able to find all the posts about that camera in the Category List on the right sidebar, below the Articles widget. There’s a drop-down menu there that you can use to get to all the posts in this series; just look for “A7RIV”.

I have read reports that you need an unusually heavy tripod and exceptional technique to use the a7RIV pixel shift without encountering artifacts. Since I had three 16-shot series from yesterday’s testing, I decided to look for inconsistencies that would indicate camera motion. For the captures, I was using a solid set of RRS legs and an Arca Swiss C1. The tripod was not on the best of footing: a gravel-covered chipseal driveway. There was little wind, but it wasn’t dead calm.

Here’s the scene:

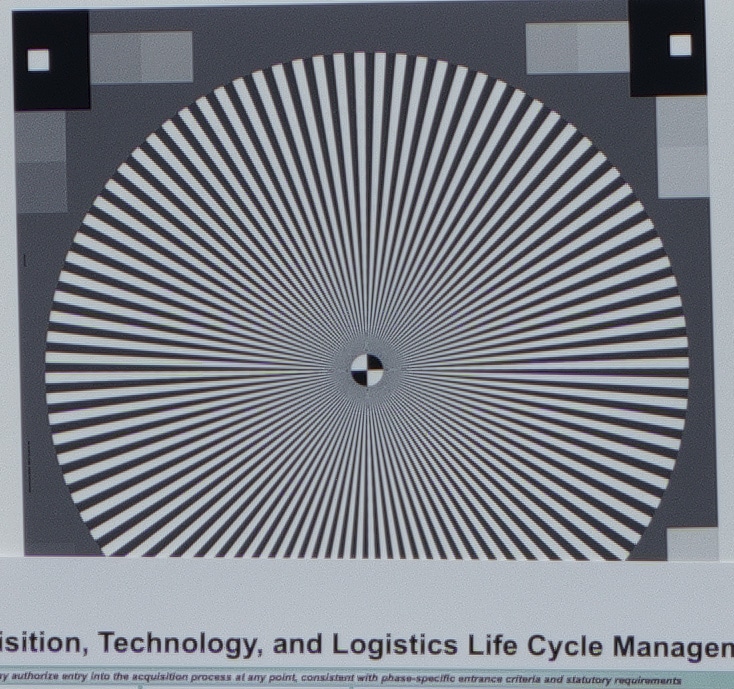

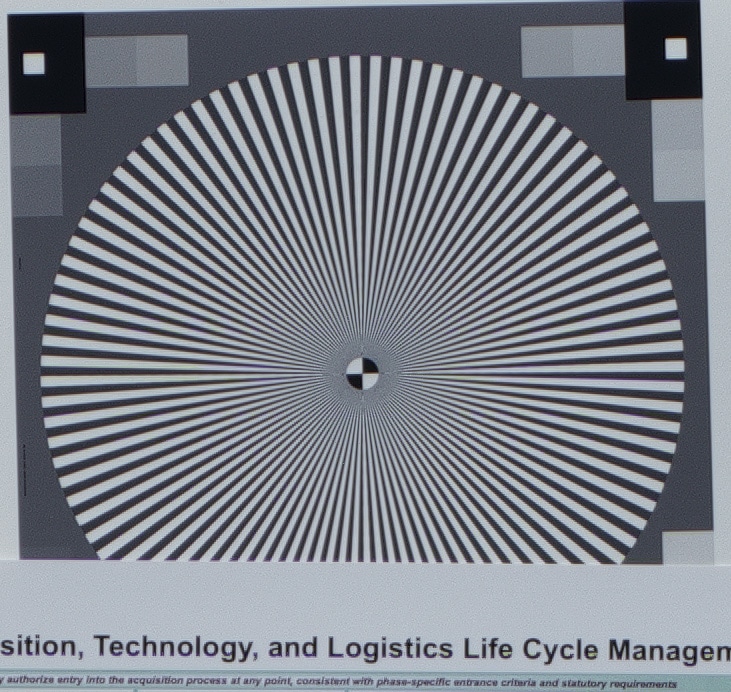

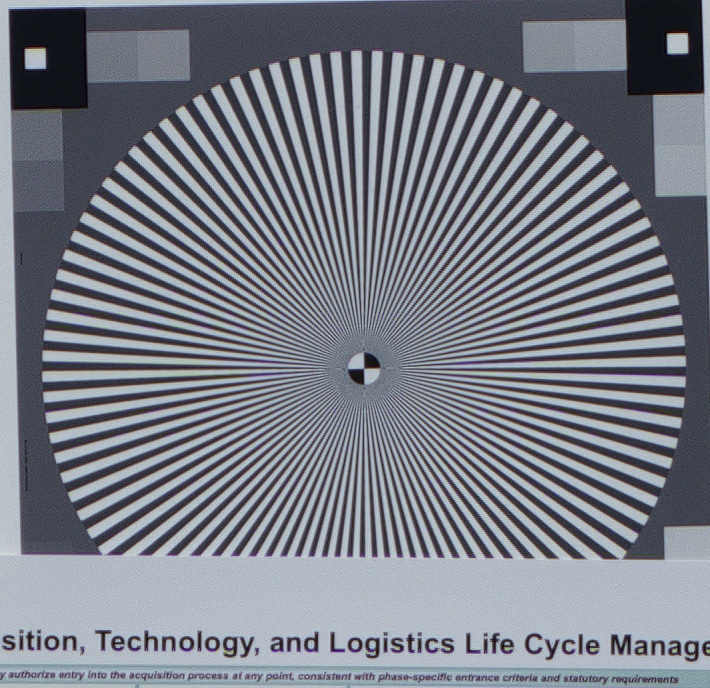

Here are tight, enlarged corps of the Siemens Star for each series, all developed in Sony Imaging Edge with Edge Noise reduction = 0.5:

Camera motion should cause color shifts. There are some of those, but they are fairly mild. It may be that the color shifts are due to imprecise positioning of the sensor by the camera.

FredD says

Yes, other than of course subject motion, mechanism imprecision in sensor positioning and external vibration would probably be the two main possible culprits in any pixel-shift problems reported. I don’t have any mechanical or electromechanical design competency background, or past experience with pixel shift, so I won’t speculate on the relative influence of either if your camera is locked-down on a good tripod.

Is the sensor movement controlled by voice-coil or piezo actuators? Consumer-camera pixel-shift has been around awhile, stacking for astrophotography and satellite-based remote sensing have been around much longer. One would think someone would have in a published paper mathematically modeled them and the effects of positional imperfections. (I can’t access the literature databases from home, and in any event, my backround is ecology and evolutionary biology, so I wouldn’t be very efficient at tracking down any such papers).

For pixel-shift, what happens if the inter-shot time within a series is lengthened? What if you look at pixel-shift applied to a point source subject? What if you just look at the four individual color planes individually, eliminating most of the effect of lens lateral chromatic aberrations? What happens if you stack multiple (say, two, four, eight, or sixteen) full-sixteen-shot series in post? Would one see a statistical trend as with non-pixel-shift s/n ratio when stacking for astro?

JimK says

VCA’s, same as the IBIS.

JimK says

That would require writing my own reconstruction software.

Horshack says

Jim, Take a look at the edges of the boxes inside the test chart for the 16-shot image. You’ll see checkerboard artifacts. It’s particular visible when viewed at 200%.

JimK says

Do you think that could come from camera motion?

Horshack says

It’s either camera motion or imprecision in Sony’s sub-pixel sensor movement.

Ilya Zakharevich says

Comparing the results of “dumb” reconstruction on DPR with their use of Imaging Edge, I would say that IE tries to calculate the “correct” sub-pixel shifts between the shots (or maybe uses some info in metadata on the expected residual errors).

And this could add a third alternative: imprecision in the calculation of subpixel-match between the images.

JimK says

Probably true.

FredD says

Might I suggest doing a differencing in Photoshop of a 16-shot pixel-shift series from a) another 16-shot pixel-shift series, b) a 4-shot pixel-shift series, c) a non-pixel-shift shot. For the latter two, you’d have to upscale the resolution of the non-16-shot p.s. image; not sure what’d be the best algorithm.

Erica says

What about the delay between moving sensor and taking another picture? Is the minimum 0.5 seconds enough?

JimK says

I don’t know. I should probably test.

Arbitrary Gnomes says

This is old, I know, but Sony seems to have updated the pixelshift algorithm in Imaging Edge, probably earlier this year… I recently had the update message pop up (with zero information about features of course) and for the heck of it opened a 16 shot pixelshift .arq I took at dusk (not thinking about it) with clouds blowing through and relatively long exposures.

Earlier versions of Imaging Edge created a hot rainbow mess on the edges of all motion in a scene and even weirder maze artifacts where, for example, a car’s headlights left a trail through the images. They also “finished” readying the .arq for display in about 30s.

The new versions have removed practically all of the artifacts… the clouds in the picture were now a smooth blur instead of a psychedelic puff. They had some weird dithering artifacts on the forward and rear moving edge, but nothing severe.

It now also takes almost 5 minutes to finish the tiles of the picture… but it’s doing a better job than Rawtherapee with the motion compensation stuff turned on.

I’d suggest anyone who was having issues with that try it again. I rarely used even the 4 shot version because almost all of my photos are outdoors and it’s a rare day when everything is completely still and a car or stoned disk golfer doesn’t end up in front of the camera.

JimK says

Thanks for that update. I’m in the process of selling all my Sony FF cameras and lenses, so I won’t test that myself.