There is a persistent legend in the digital photography world that color space conversions cause color shifts and should be avoided unless absolutely necessary. Fifteen years ago, there were strong reasons for that way of thinking, but times have changed, and I think it’s time to take another look.

First off, there are several color space conversions that are unavoidable. Your raw converter needs to convert from your camera’s “color” space to your preferred working space. I put the word “color” in quotes because your camera doesn’t actually see colors the way your eye does. Once the image is in your chosen working space, whether it be ProPhotoRGB, Adobe RGB, or — God help you — sRGB, it needs to be converted into your monitor’s color space before you can see it. It needs to be converted into your printer’s (or printer driver’s) color space before you can print it.

So what the discussion about changing color spaces is about is changing the working color space of an image.

The reason why changing the working color space used to be dangerous is that images were stored with 8 bits per color plane. That was barely enough to represent colors accurately enough for quality prints, and not really enough to allow aggressive editing without creating visible problems. To make matters worse, different color spaces had different problem areas, so moving your image from one color space to another and back could cause posterization and the dreaded “histogram depopulation”.

Many years ago, image editors started the gradual migration towards (15 or) 16 bit per color plane representation, allowing about (30,000 or) 60,000 values in each plane rather than the 256 of the 8-bit world. This changed the fit of images into editing representations from claustrophobic to wide-open. Unless you’re trying to break something, there is hardly a move you can make that’s going to cause posterization.

But the fear of changing working spaces didn’t abate. Instead of precision (the computer science word for bit depth) being the focus, the spotlight turned to the conversion process itself being inaccurate.

Before I get to that, there’s another thing I need to get out of the way. Not all working spaces can represent all the colors you can see. The ones that can’t don’t exclude the same set of colors. So, if you’ve got an image in, say, Adobe RGB, and you’d like to convert it to, say, sRGB, if there are colors in the original image that can’t be represented in sRGB, they will be mapped to sRGB colors. If you decide to take your newly sRGB image and convert it back to Adobe RGB, you won’t get those remapped colors back. one name for this phenomenon is gamut clipping.

There are two ways of specifying color spaces. The most accurate way is to specify a mathematical model for converting to and from some lingua franca color space such as CIE 1931 XYZ or CIE 1976 CIEL*a*b*. If this method is used, assuming infinite precision for the input color space and for all intermediate computations, perfect accuracy is theoretically obtainable. Stated with the epsilon-delta formulation beloved my mathematicians the world over, given a color an allowable error epsilon, there exists a precision, delta, which allows conversion of a given color triplet between any pair of model based spaces, assuming that the color can be represented in both spaces. Examples of model-defined color spaces are Adobe (1998) RGB, sRGB, ProPhoto RGB, CIEL*a*b*, and CIEL*u*v*.

The other way to define a color space is to take a bunch of measurements and build three-dimensional lookup tables for converting to and from a lingua franca color space. These conversions are inherently inaccurate, being limited by the accuracy of the measurement devices, the number of measurements, the number of entries in the lockup table, the precision of those entries, the interpolation algorithm, the stability of the device itself, and the phase of the moon. Fortunately, but not coincidentally, all of the working color spaces available to photographers are model-based.

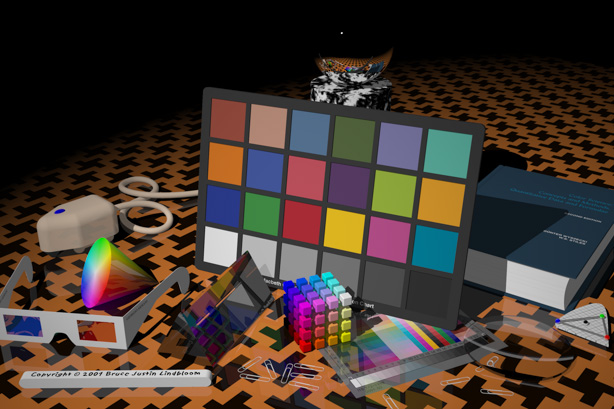

I set up a test. I took an sRGB version of this image of Bruce Lindbloom’s imaginary, synthetic, desk:

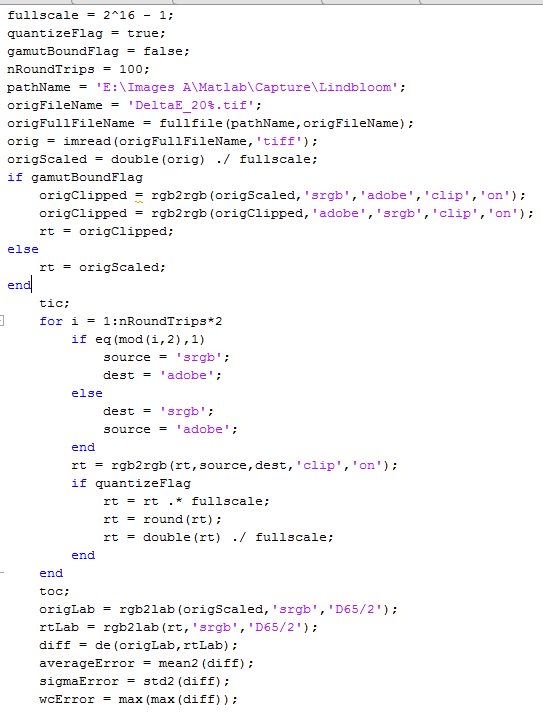

I brought it into Matlab, and converted it to 64-bit floating point representation, with each color plane mapped into the region [0, 1].

I converted it to Adobe RGB, then back to sRGB, and computed the distance between the original and the round-trip-converted image in CIELab DeltaE. I measured the average error, the standard deviation, and the worst-case error and recorded them.

Then I did the pair of conversions again.

And again, and again, for a total of 100 round trips. Here’s the code:

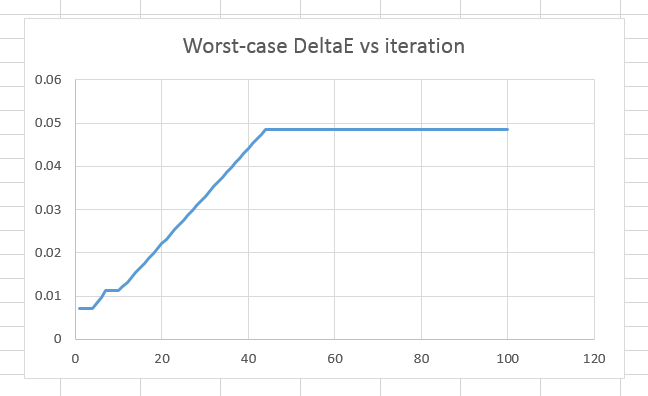

Here’s what I got:

The first thing to notice is how small the errors are. One DeltaE is roughly the amount of difference in color that you can just notice. We’re looking at worst-case errors after 100 conversions that are five trillionths of that just-noticeable difference.

Unfortunately, the working color spaces of our image editors don’t normally have that much precision. 16-bit integer precision is much more common. If we run the program above and tell it to convert every color in the image to 16-bit integer precision after every conversion, this is what we get:

It’s a lot worse, but the worst-case error is still about 5/100 of a DeltaE, and we’re not going to be able to see that.

How do the color space conversion algorithms in Photoshop compare to the ones I was using in Matlab. Stay tuned.

Leave a Reply