In the previous post, I noted small (sub LSB) differences in average dark field images from compressed and uncompressed raw files from the Sony a7RII. This post repeats the testing and plotting for the a7II.

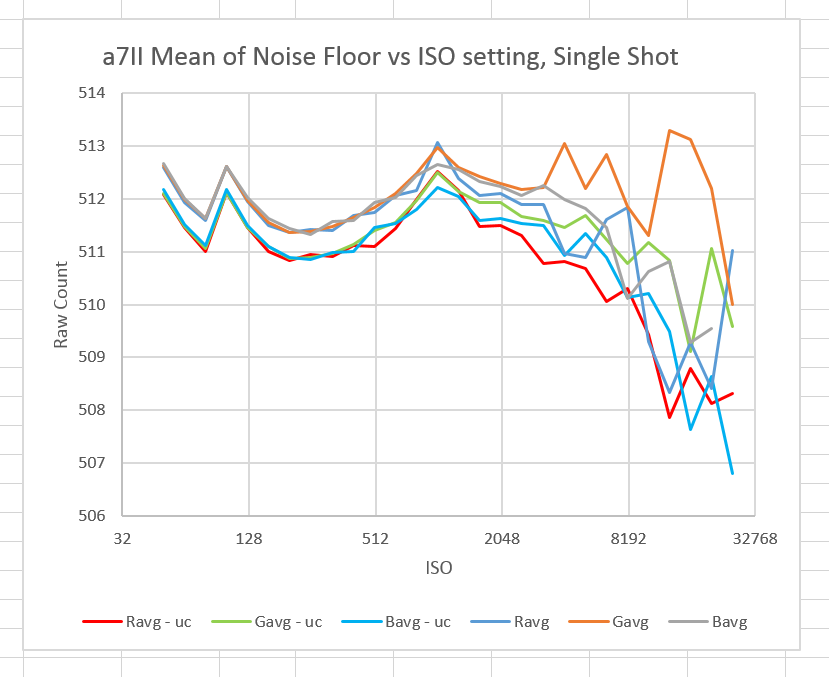

I took a look at the mean values of a 200×200 central square of the same raw dark-field images that I’d used for the earlier posts:

Can’t tell much with all three (r, b, and one g) channels, can we?

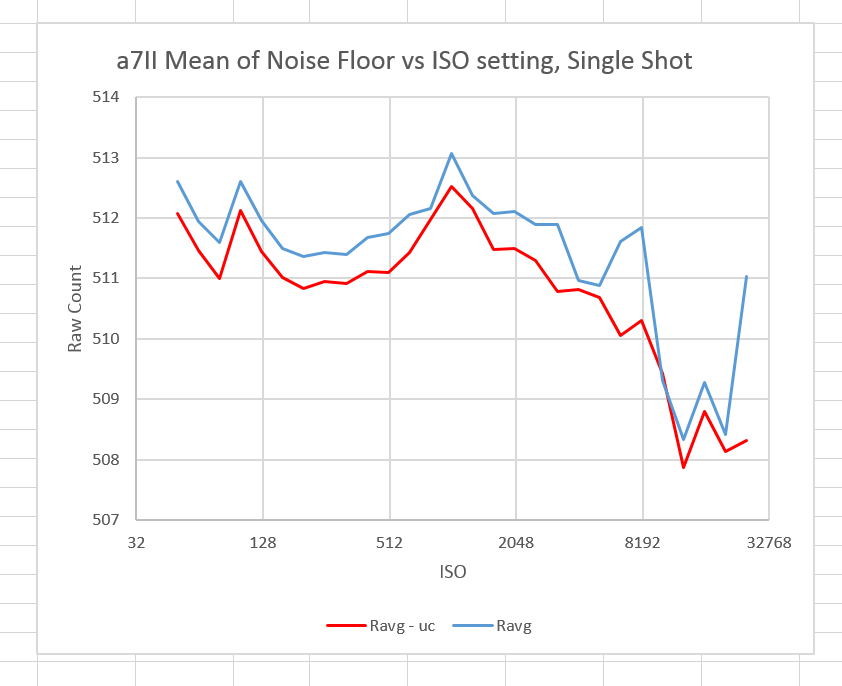

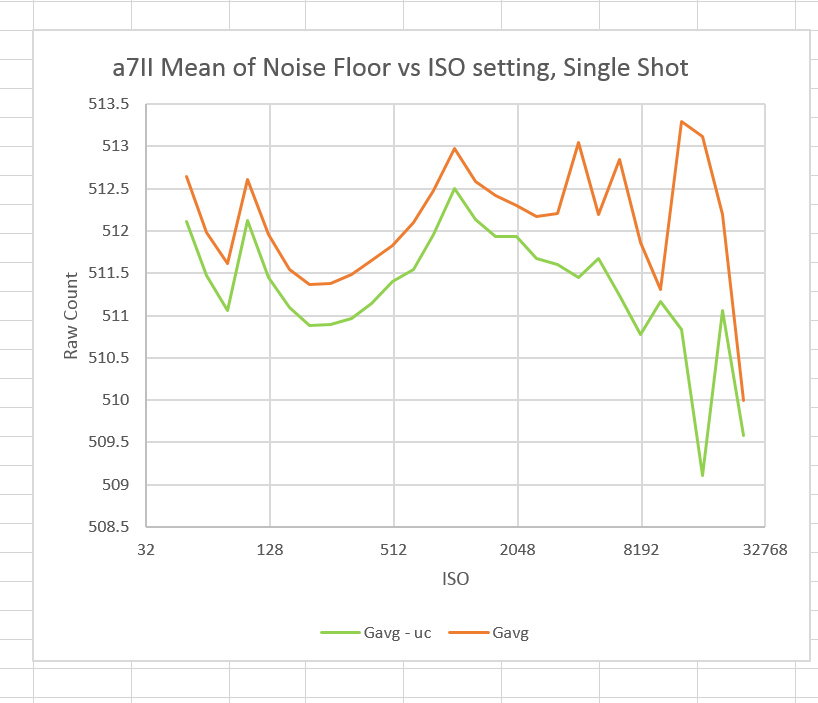

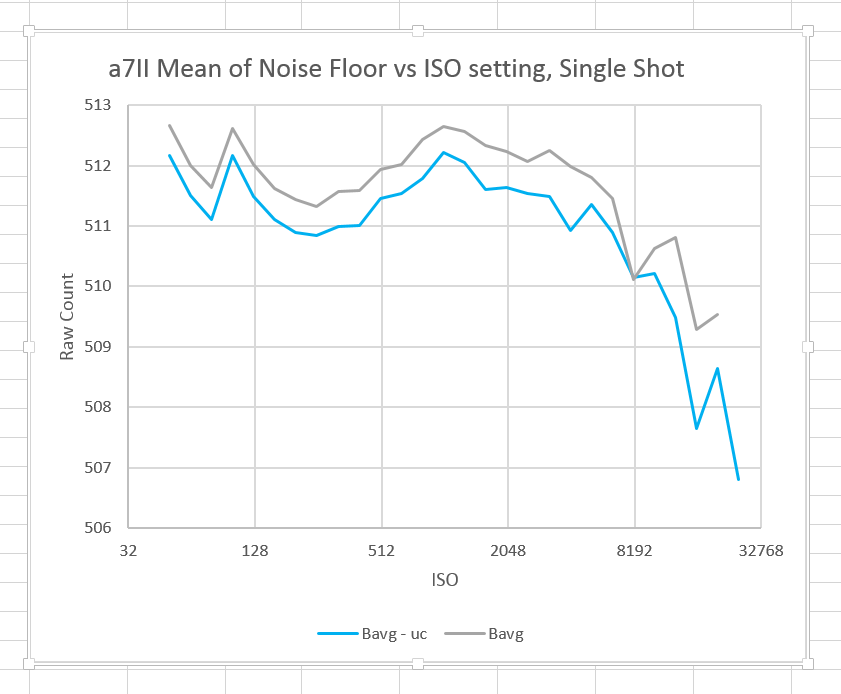

And next, each of the raw channels:

This is the same kind of thing we saw with the a7RII. At the low ISO values, there is a systematic bias, with the compressed (no suffix) means being higher than the uncompressed (“- uc” suffix) means. The difference is only about half a least-significant bit, with the uncompressed values closer to the nominal black point of 512.

As before, it is possible that this is real. It is also possible that RawDigger’s compressed file rounding algorithm is slightly different from Sony’s. If various raw developers use different rounding algorithms, we could see raw developer dependent color shifts on extremely hard-pushed images. If the slight offset is real, then we could see raw developer independent color shifts on extremely hard-pushed images.

Jack Hogan says

The question that comes to mind is ‘How do Raw Converters determine Black Level without optical black pixels’? There can be a substantial difference from ISO to ISO.

Chris Livsey says

I thought there were “reference” pixels at the sensor edge in permanent darkness. But I’m no expert.