When I did the analyses of images produced with the Sony a7R and a7II and the Sony 70-200mm f/4 OSS FE lens with mountings of varying stability, I used MTF50 as a metric for sharpness, and presented the results as graphs with that metric as the vertical axis. Over on the DPR E-Mount forum, my results were attacked by some who said that they didn’t want to see graphs, just pictures.

I answered as follows:

First off, in the case of camera vibration and its effect on image sharpness, the statistics are what’s important. Sure, I could take a single shot at each SteadyShot setting and shutter speed and post those shots, but it wouldn’t mean much. Just because of the luck of the draw, we might get a sharp shot from a series that’s mostly blurry, or a blurry shot from a series that’s mostly sharp. Then you’d get the wrong idea about which setup was better than which.

Second, once I’ve stepped up to making enough exposures to get reasonably accurate statistics — and I would like to do even more than the 16 per data point that I now do — we’re talking a lot of exposures. Each graph that I post is the result of analyzing 320 exposures. You don’t really want to look at all 320, do you?

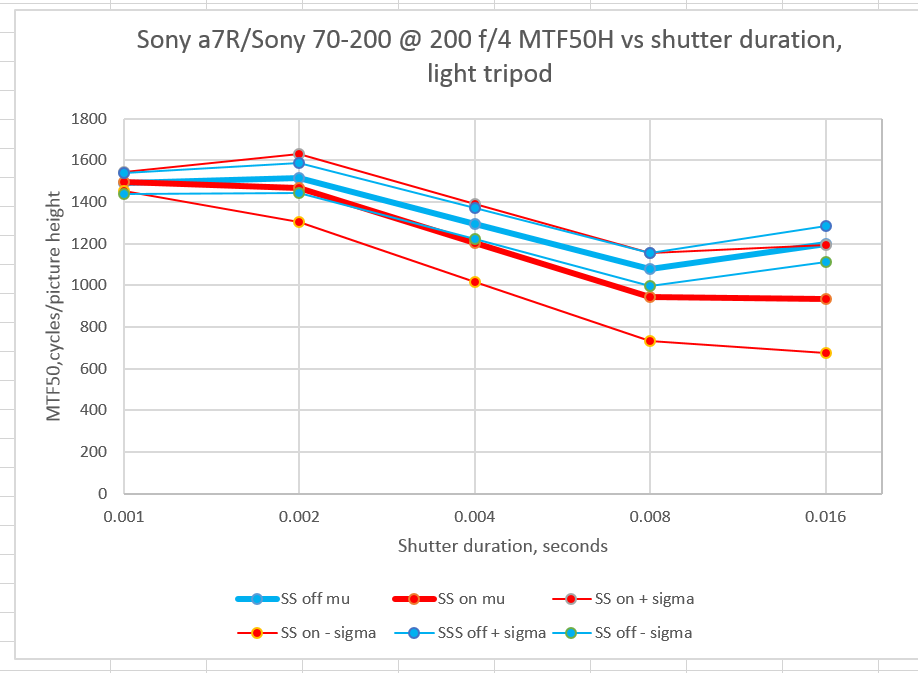

But the requests (and I’m characterizing them politely) got me thinking. I’ve been working with slanted edge targets and MTF analyses for more than a year. I’ve got a reasonable feel for what, say, 1800 cycles/picture height (cy/ph) means in terms of sharpness (really crisp), and what 400 cy/ph means (pretty mushy). But most people don’t. So, when I present curves like the following:

People can tell that higher up on the page is sharper, and sharper is better, but they don’t have a feel for how to interpret how sharp any point of the curve is. They need a Rosetta Stone to translate between various MTF50 values and images that they can look at and judge sharpness for themselves.

I started thinking about how to provide that bridge between the two worlds.

My first thought, and what I still think is the high road, is to do it all in my camera simulator. Start out with a slanted edge. Dial in some diffraction, some motion blur, some defocusing, take the captured image, run it through a slanted edge analyzer, and get the MTF50. The, leaving the simulator settings the same, run a natural world photograph through the simulator. Do that with various simulated camera blur, and we’ll get a series of images that people can look at, and we’ll know their MTF50. There’s one little technical problem: the natural world images with have photon noise (that’s why I’ve been using Bruce Lindbloom’s ray traced desk so much). To a first approximation, I can deal with this by turning off the photon noise in the simulator.

There’s another, more practical problem with this approach. Many, if not most, of the people whom I’d be trying to reach have an distrust/aversion/antipathy to math and science, and would have a hard time understanding what the simulator is doing, and a harder time believing that there wasn’t some nefarious activity going on.

So, I set the simulator approach aside, although I may pick it up again at some point in the future.

My next thought was to take a slanted edge target, plunk it down in the middle of a natural scene, photograph the whole thing with various shutter speeds, mounting arrangements, defocusing, etc, measure the MTF50 of all the shots, and publish blowups of various parts of the natural scene together with the MTF50 number for that shot. Easy, peasy, right?

The more I thought about it the less easy it seemed.

If I were to go to all this trouble, I’d want things in the image with high spatial frequencies, or else the difference between say, an MTF50 of 1600 cy/ph and one of 1200 cy/ph wouldn’t be noticeable.

I’d want natural objects that were flat enough to be in critical focus with lens openings wide enough to provide high on-sensor MTF, and that I could get close to the plane of the slanted edge chart. I’m starting to envy Lloyd Chambers his apparently permanent doll scene. I know that my wife would tolerate my setting something like that up for a day at most. I’ll get back to this.

The characteristics of anti-aliasing (AA) filter effects, diffraction, mis-focusing, and camera motion all are subtly different, even at the same MTF50. If would be nice to be able to change one with changing the others.

If I’m going to make exposures at varying shutter speeds, because of the point above, it would be nice to do that without changing f-stop, since that will change lens characteristics. Several alternatives come to mind. One is changing the illumination level. That requires an indoor scene, and my variable-power LED source gets pretty dim if it’s expected to light a large area. I can use strobe illumination and get plenty of light, but can’t test camera motion effects that way. Another is using a variable neutral density (ND) filter in front of the lens. That costs more than a stop of light (in theory – in reality, closer to two stops), even when the ND filter is set to minimum attenuation. Another is just letting the lighting level drop, and pushing in post, or compensating with the in-camera ISO control. In both cases the noise level will rise as the light hitting the sensor goes down. Using fixed ND filters is just too error-prone; I know I’d knock the camera out of position changing them.

Getting enough light is a problem. If I want the fastest shutter speed to be 1/1000, and I do the exposures outside, and want to shoot at f/8, that means ISO 250. Throw a variable ND filter on there, and we’re up to close to 1000. Slanted edge software is really good at averaging out noise. Humans aren’t. Maybe I can get the target and the real-world objects into close enough to the same plane and use f/5.6 and ISO 500. Going to f/4 and ISO 250 just seems like pushing it too far.

Returning to the subject matter for the scene. I’m thinking that a piece of cloth with a fine weave (or at least one that is on the order of the pixel pitch when projected onto the sensor) would be good. Lloyd Chambers has those dolls with fine hair and eyelashes; maybe I could get a doll? Cereal and cracker boxes? Wine bottles? Feathers? Or just include a photograph in the scene?

Any and all comments and questions are appreciated.

RE what MTF 50 looks like. I have faced the quandary of what to use as a long term consistent target to evaluate and compare images. I ended up getting a doll and draping a fine texture, multi colour weave fabric and attaching a feather to the fabric. The fabric/feather and the doll’s eyes are precisely on the sensor plane. I ended up choosing this because in my experience, my brain interprets sharpness differently for different subjects. Eyes and feathers are especially easy for me to see small variations in sharpness. The eyes of my doll have fine lines extending through the white part. I do find it hard to distinguish smaller changes in sharpness in fabric.

Here is an image of my doll target: http://roryhill.zenfolio.com/p654908404/hc9dc486#hc9dc486

I’ll be interested to see what you end up using as your target. So many variables…

I suspect one of the take-aways will be that most of us will be hard put to see much difference until the MTF50 changes drastically.

That’s kind of why I’ve been liking the Imaging Resource test still lives more than the new DPR one. For my own part, I still shoot brick walls, sometimes stone, just to get a handle on things at my end. But I’m not keying my personal tests to MTF anything, or generating the stats you do.

Hi,

Now, after reading your article, I’m feeling the other way around, I want to understand how one setups to measure an MTF chart… I mean, as you said, many us are exposed to this graphs, but we don’t really know what those numbers mean…

Regards,

David

I like the idea of a flat fabric, maybe stretched in part and a little folded in others.

Of course the MTF give an idea, but I test all my lenses in this way:

I take a white sheet of paper (slightly shiny, non-standard).

In Word I write the whole sheet full, a line with letters, a line with numbers. Font: Verdana size 10! (important). Line spacing 1 or 1,5.

This sheet I glue on a hard pad, so it does not bend.

Then I take pictures from the sheet so that the numbers and letters are visible from the center to the corners. Aperture 0.95 to 32 (as it has the lens).

Why?

1. Autofocus: it is set correctly from the factory?!

1. I can check the centre- and corner sharpness. It shows me, which aperture show me corner sharpness, or not!

2. I can see artifacts and cromatische abberation easier. Help me in post processing software for correction!

3. For sharpening in post processing software. Not every lens has the same sharpness radius for the edge !!!

4. and distortion (help for correction pp).

And lastly, I use it to check, if I have a buy a good lens, or it has faults in the production.

Jim, I enjoyed your article and have been following the discussion on dpreview regarding A7R shutter slap at 200 mm.

I switched last year from Nikon to Sony because of my experience with a NEX7. I purchased an A6000, A7R and lenses. I purchased lens coverage of 16-50 and 55-210 for the A6000 and 16-35, 24-70 and 70-200 for the A7R. Unfortunately I didn’t know about A7R the shutter slap issue. I did some initial testing and believe it is not going to be a major problem with a few adjustments. However, I continue to test to make sure that I know how to best take advantage of my equipment in all shooting situations. Also, would you believe that I am using a MTF tool (QuickMTF) to better understand results?

As I said, I was initially concerned about the shutter shock issue; however, now my focus is on general quality and how to use the equipment to achieve it. I have tested several legacy lens systems, Nikon AI, Pentax, and Minolta Rokkor and of course the f4 Sony lenses. Most of my testing is in 24-90 mm field of view because 98 percent of my last twelve digital photography years have been in that range .

Have you done much in this range? I will look through your blog over the next few days to see what I can glean.

Hi,

Demonstrating MTF 50, what would come to my mind is shooting a subject with a well corrected lens using different apertures and let diffraction to change MTF 50.

Best regards

Erik