A reader — thanks, Chris! — made a comment yesterday that I think raises some points worthy of attention.

So what needs to change in the camera, presuming the sharpness is in the OTUS

, to get it out?

Physics says f4 and that’s not changing soon, unless we hear from the Large Hadron Collider.

So setting aside atmospherics, again we have no control, we can give support pretty well leaving nailing focus and handling in camera generated vibration?

Without support in real world shooting we need stabilisation in camera.

Is there any reason, other than marketing, to go to 50MP in a “35mm” camera without fixing those two/three?

I believe there is.

I’ve done simulation studies that show that it will take on the order of half a billion Bayer-CFA’d pixels to get most of what’s in the Otus in the capture. That assumes that the center sharpness is available across the whole frame, which isn’t a good assumption, but the way that camera sensors are currently constructed, which constant pitch, means that that knowledge wouldn’t help anyway.

More pixels will make it easier to focus with live view, too. The images that I’m focusing on with the D810 are so badly aliased that it’s difficult to know what is a sharper image than another. If the lens weren’t so far ahead of the sensor in its MTF curve, that wouldn’t be the case.

And, more pixels will produce smoother images with less aliasing, even if they’re not sharper.

So, I’m all for more pixels, even if we don’t get a lot more sharpness.

Chris goes on to say:

And finally, a question, presumably with say a Phase One IQ280 the f stop is slightly bigger, 5.2 pitch against 4.88, but it’s really close, so why, having spent that amount, don’t we get complaints about final sharpness and focus issues, or is the repeatability at the level you are measuring just as bad but no one looks?

There’s a big difference between what you can measure and what you can see. In my experience, there is little practical difference between the center sharpness of the Otus 55 and the Zony 55, even though I can repeatably measure differences between the two lenses. To give you an idea of what I’m talking about, let me show you what a sharp slanted edge looks like, and let you compare it to a not-so-sharp one.

Note: these edges were demosaiced without interpolation, using a technique that Jack Hogan suggested to me: white balancing (or, as I prefer, equalizing) the four raw layers. It’s a technique I’ve used in the past on deep-IR images, and it works on the Imatest target that I’m using because it is monochromatic.

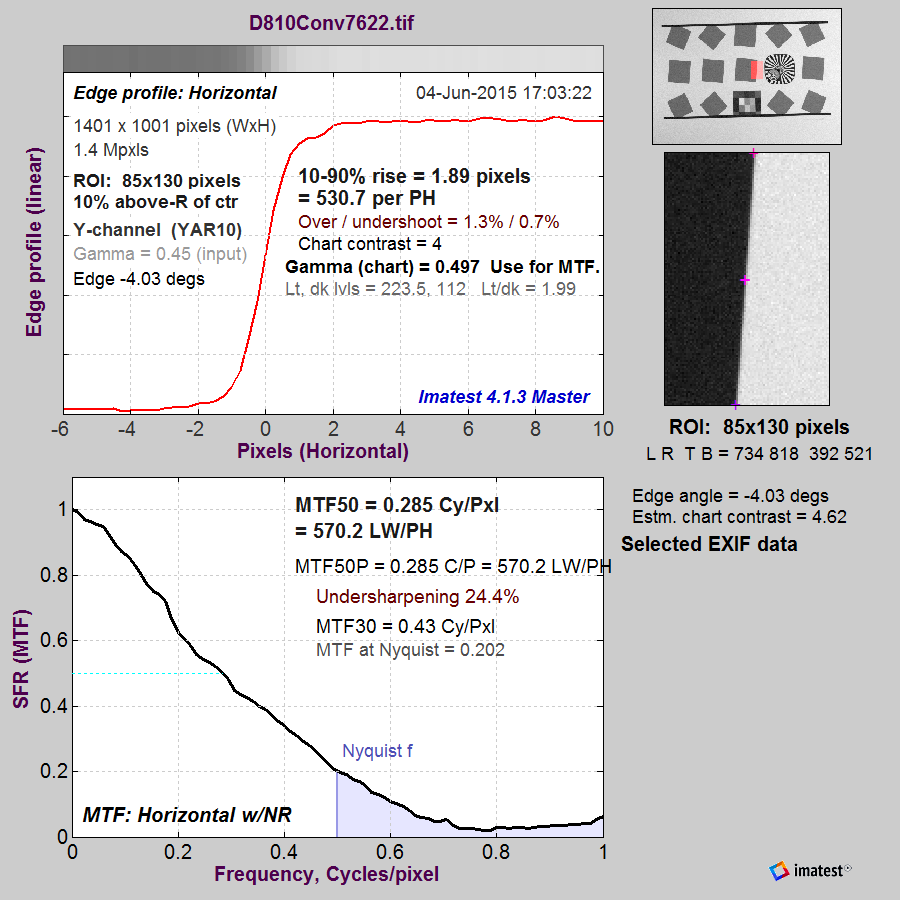

Here’s the plot of a reasonably sharp edge:

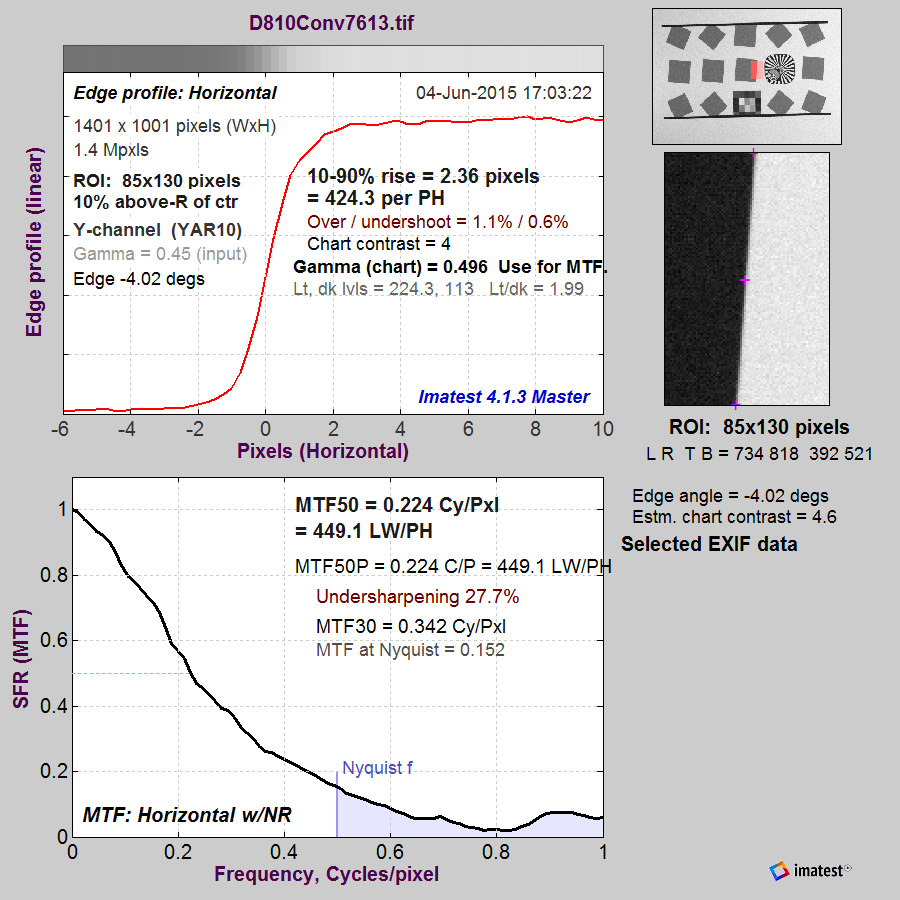

And here’s one that’s quite a bit worse:

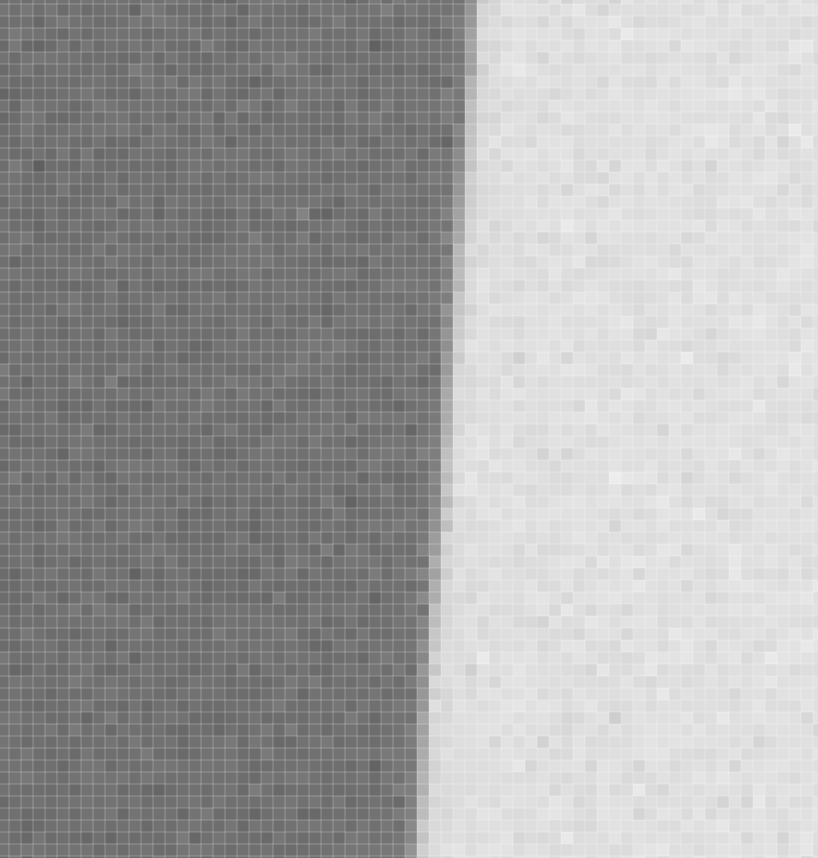

Here’s the better edge, blown up a lot:

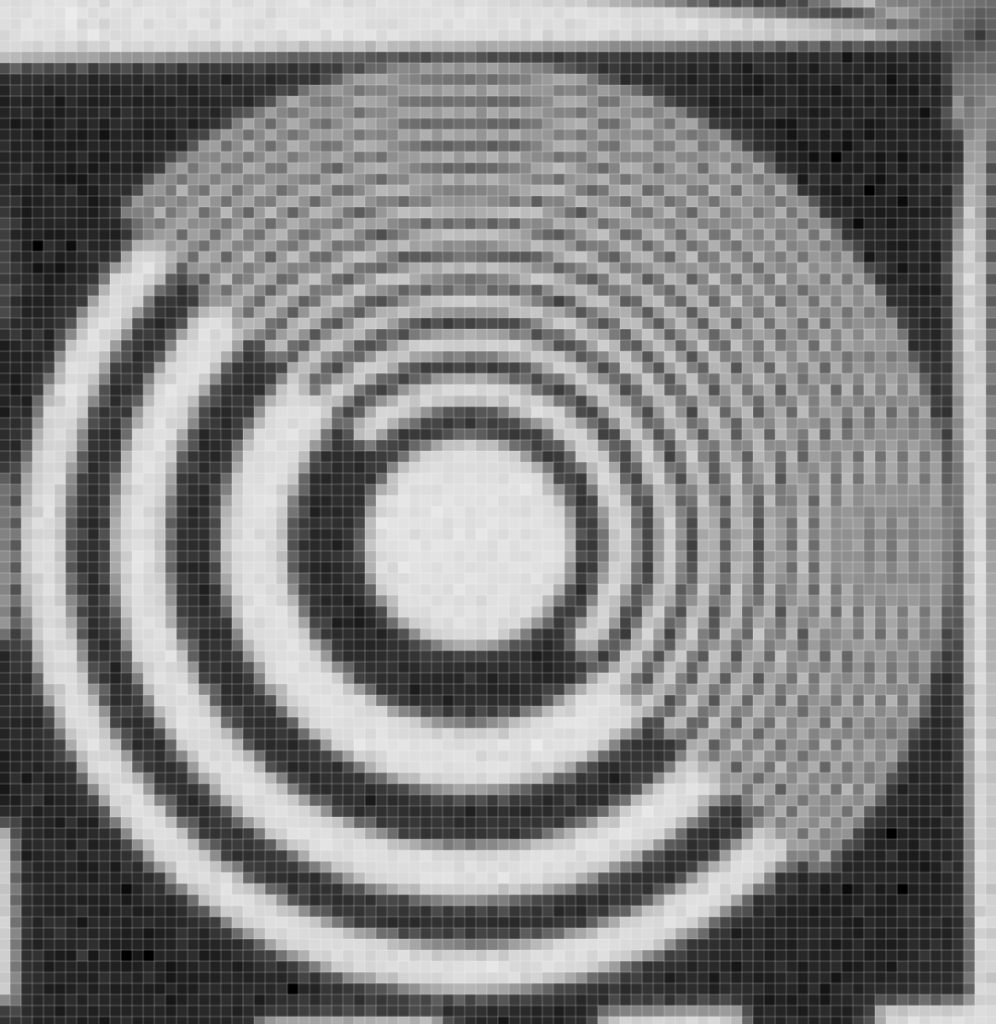

And here’s the not-so-good one:

Without the slanted edge calculations, I’d have a hard time saying that one was meaningfully sharper than the other, although you can see it in the focusing targets. Here’s the better one:

And the not-so-good one:

Bet you’ve not seen aliasing like that from a Bayer CFA without any false color. That’s the beauty of the white balance demosaicing technique. Too bad it only works for monochromatic subjects.

Also, let me point out here that, absent the false color, the images presented above faithfully replicate what I saw when I was focusing using the D810’s magnified live view. There’s nothing wrong with the D810 live view per se, it’s just that, without peaking, it’s harder to use precisely than that on the Sony a7R.

So, to get back to why IQ180 users aren’t complaining about inability to achieve critical focus, my guess is that they’re getting focus that’s accurate enough for sharp-appearing images, which is a lower bar than the focus necessary to get sharp-measuring images.

But to back away from this a bit, the advent of high-res sensors and marvelous new lenses like the Otus 55 and the exotic German tech camera lenses used by landscapers on the IQ180 have given photographers such exquisite tools that a lot of our focus infrastructure is falling apart.

Except for wide lenses, rangefinders can’t provide sufficient accuracy. Even ground-glass phase detection autofocus won’t cut it if you want things consistently really sharp. Same with contrast-detection AF, but improved algorithms may help that in the future; the mismatch between the ground glass distance and the sensor distance is always going to be a potential problem in SLR cameras.

Depth of field tables are obsolete, too, as the acceptable circle of confusion gets smaller and smaller.

Lens tilts get more problematical as finding a subject plane that’s sufficiently flat gets harder as the definition of “sufficiently” changes.

What to do? I’ll have some ideas n the next post.

No, it’s thanks Jim 🙂

The easy bit is asking the questions.

I do see the reasoning behind higher MP being a “good thing” but did you balance that against the inevitable density/size required which will push you below the f4 and make those problems worse?

I agree on IQ180 that good enough is good enough for end use and indeed given the slant edge images, which are very instructive, by eye a difference may not be easily distinguished until pushed, although the target images are clear so it may be a case of knowing what you are looking for.

Just one more point as well: How much software correction is going on, even in the RAW, in these cameras for the lenses and what effect is that having?

I am intrigued with the new Phase hyperfocal setting focus “calculator” which is a different approach to obtaining the good enough over a greater part of the subject, dependant on the subject of course, I can’t see it being used in portyraiture much!!

We are a long long way from having sensors that can deal with the details of images from good lenses set to f/4. By the time we do, I’m hoping we have some sophisticated image processing tricks at our disposal. In the meantime, I’m stopping down past that, usually.

Jim

I cannot detect any spatial filtering at all in raw files from the D810 or a7x cameras at base ISO, single-shot shutter mode, and exposures faster than 1/30 second.

Of course, Lr is happy to silently apply spatial filtering depending on the camera, lens, and phase of the moon.

Jim

Jim, Does that answer, and I’m sure it does, mean exactly what it says 🙂

That is: no filtering under those specific conditions, implying that there are conditions you can detect it under or is it just my paranoia?

Chris, there are conditions when Nikon and Sony apply some spatial filtering in the interest of noise reduction. Sony at nosebleed ISOs in the a7S for and in the a8S and a7II when the shutter is set to bulb for example.

http://blog.kasson.com/?p=10012

Nikon for longish exposures.

http://blog.kasson.com/?p=6910

Jim