A DPR poster posed an interesting question: “What does sensor outresolving look like in photos?” In order to deal with this, I needed to define what “sensor outresolving” means. I’m taking it to mean that the sensor samples the projected image from the lens not just at, but more finely than the Nyquist frequency.

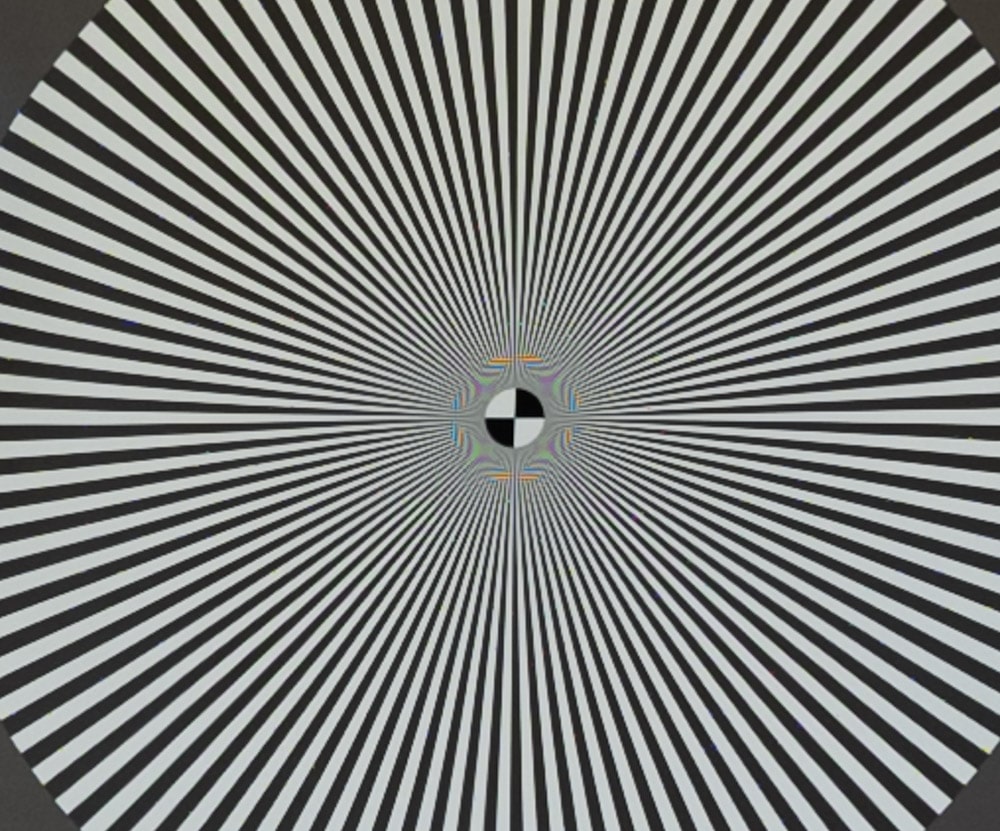

If that’s the right definition, I ran a little experiment to determine the answer. I set up the GFX 100 and the Fuji 110 mm f/2 lens on this scene:

I used the focus bracketing feature of the GFX 100 to step through and well past the in-focus point, using the smallest possible step size of 1. I developed the images in Lightroom with a few contrast, white balance, and exposure tweaks, and I set the sharpening to strength = 20, radius = 1, and detail = 0.

Here’s an in-focus crop:

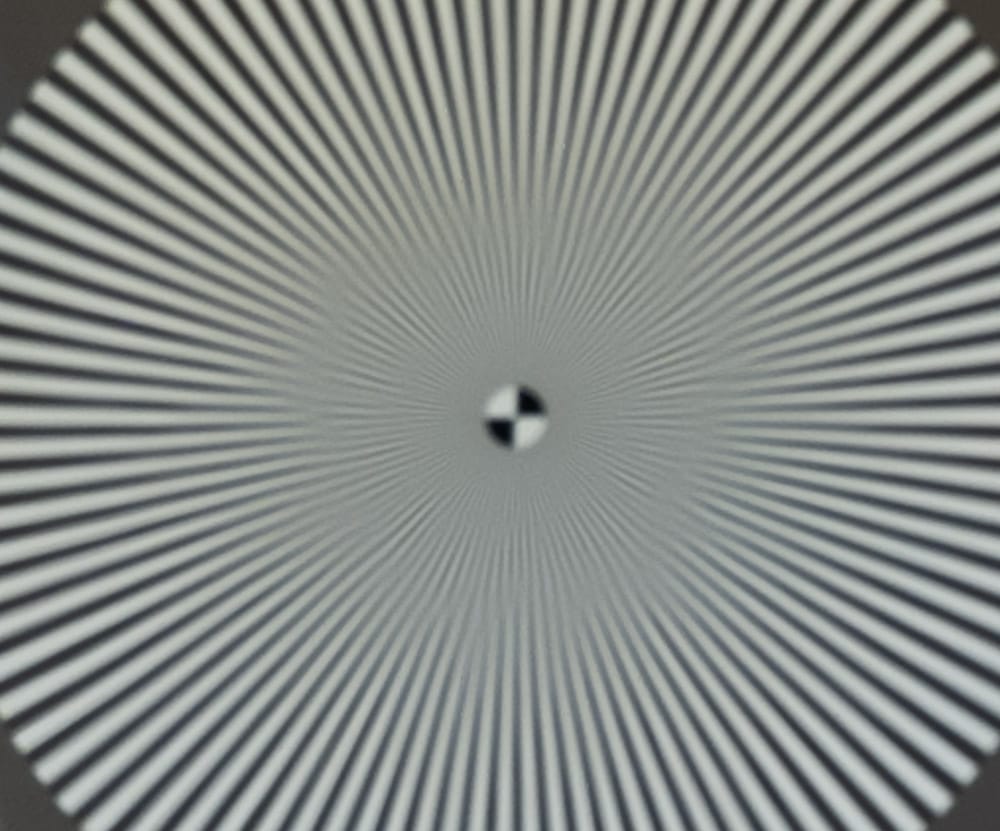

I then scanned the images until I found the closest one in the sequence that had no visible aliasing. It was 18 steps away from the in-focus one.

There’s your answer. If you’re going to use diffraction, lens aberrations, and defocusing to create a strong enough low-pass filter that there’s no visible aliasing, your image is going to look really soft at the pixel level.

Even that out-of-focus one shows some phase reversal.

Jim, is there a mode, or could there be, then, of a camera, that takes two shots, and that some algorithm could maybe not perfectly, but rather successfully detect false colors? Today, I rely sometimes on RawTherapee False Color Suppression, which just tries to guess.

Another question that came to mind is how would this look like for a Pixel Shifted Image (4 images full color information).

You can do that in post, after a fashion. Use auto-align on the two shots, then subtract them.

https://blog.kasson.com/gfx-100/sony-a7riv-with-pixel-shift-vs-fujifilm-gfx-100/