This is the third in a series of posts on color reproduction. The series starts here.

I stated in the last post that, in the general case of arbitrary subject matter and arbitrary lighting, we couldn’t get accurate color – even using out limited definition of accurate – from cameras that don’t meet the Luther-Ives condition. I also said there weren’t any consumer cameras that met that condition.

Because the filters in the Bayer array of your camera don’t meet the Luther-Ives condition, there are some spectra that your eyes see as matching, and the camera sees as different, and there are some spectra that the camera sees as matching and your eyes see as different. This is called capture metameric error, or, less precisely, camera metamerism. No amount of post-exposure processing can fix this.

What to do?

The simplest approach is to pretend that the camera does meet the Luther condition, and come up with use a compromise three-by-three matrix intended to minimize color errors for common objects lit by common light sources, and multiply the RGB values in the demosaiced image by this matrix as if Luther-Ives were met to get to some linear variant of a standard color space like sRGB or Adobe (1998) RGB.

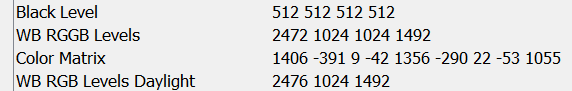

Many cameras provide a compromise matrix in the EXIF metadata as an aid to a downstream raw converter. Here is the matrix from a Sony a7II:

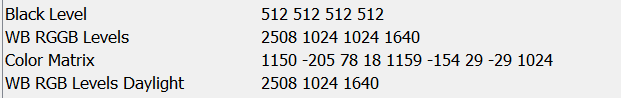

Here’s the matrix for a Sony a7RII:

There are ways to get more accurate color, such as three-dimensional lookup tables, but they are memory and computation intensive and are not generally used in cameras. They may be used in some raw converters. I’d appreciate input on this.

However, no matter how colors are reconstructed from a non-Luther camera, as I said earlier, they can’t be 100% accurate for all subjects and all illuminants.

Next: constructing a compromise matrix.

Hi, I’m one of the developers of darktable[1]. I’m really enjoying this series of articles. I was particularly intrigued by this bit:

> There are ways to get more accurate color, such as three-dimensional lookup tables, but they are memory and computation intensive and are not generally used in cameras. They are certainly used in raw converters.

It’s common to use LUTs for display and printing profiles but I’ve never seen any used in raw processing. Everything in darktable uses a 3×3 and I haven’t seen that Adobe does anything different. Do you have any references to use of 3D LUTs for color transformation of raw files?

[1] https://www.darktable.org/

I thought Adobe used LUTs. I’m not sure why I thought that, except that compromise matrixes are such blunt tools. Until I find out more, I’m going to soften the statement.

Thanks for the information.

Jim

> I thought Adobe used LUTs

Quite so, all current Adobe DCP profiles are LUT-based.

I understand that Nikon uses LUT profiles in its CaptureNXx products. Anybody knows for sure?

They do.

I read somewhere (maybe it was just implied?) that the [xyz<-camera] matrices in dcraw are taken from metadata in dng's generated by the Adobe dng converter…

Then it multiplies them by the [colorspace<-xyz] matrix to put the image into the final color space.

This stuff honestly makes my head hurt after too long, though, and I can't remember where in the chain which white balance multipliers are applied…

Jim, feast your eyes on Anders Torger excellent site and camera profiles: http://www.ludd.ltu.se/~torger/dcamprof.html

Jack