I few days ago, I posted the results of simulating camera motion blur in a Sony alpha 7R (a7R), showing how the modulation transfer function, as measured by MTF50, varied with camera motion blur measured in pixels, and also showing simulated photographs with their motion distances and MTF50 values, so that you could see what the various MTF50 values translated to in terms of subjective impression of images.

Today, I’m doing something similar for the Sony alpha 7 Mark II (a7II). It’s turned out to be a little tricky because of the way that the a7II anisotropic antialiasing (AA) filter works. If you take a picture of a test chart like the ISO 12233 one, you’ll notice that the a7II doesn’t suffer any loss in resolution in the vertical direction (horizontal lines) due to the AA filter, but there’s a small amount of loss horizontally (vertical lines).

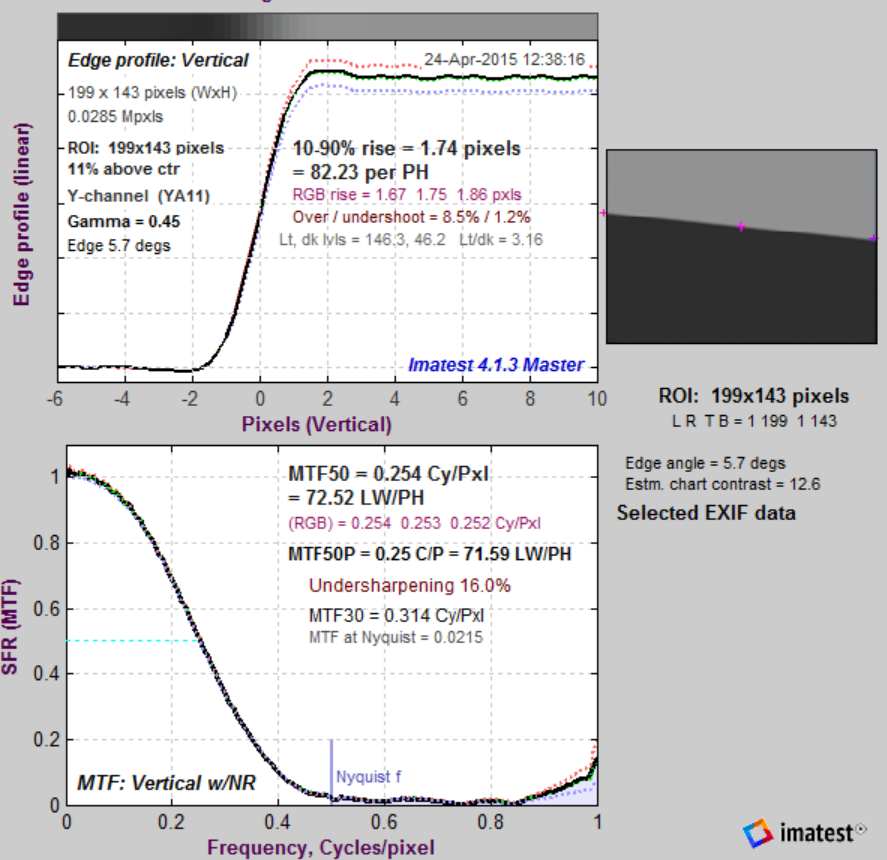

I added some features to the model so that I could emulate the behavior of the a7II’s AA filter. Here’s what Imatest‘s SFR function looks like for a conventional 4-way phase-shift AA filter and a horizontal edge with the 6um pixel pitch of the a7II and a simulated Zeiss Otus 55/1.4 set to f/5.6:

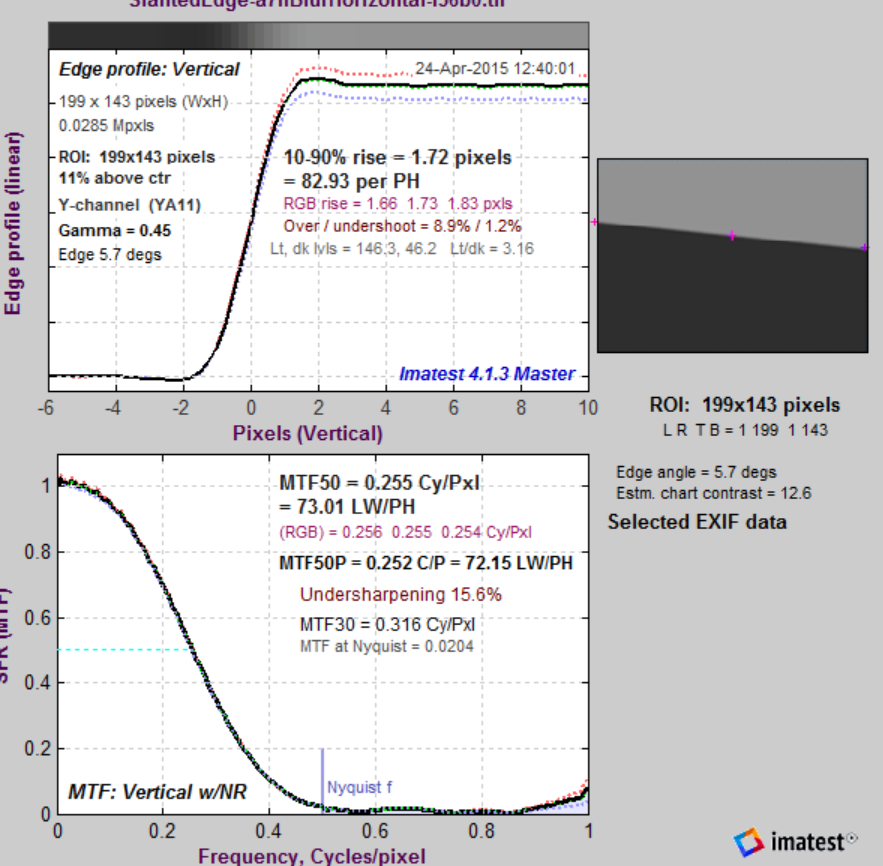

And here’s what it looks like with a vertical 2-way phase-shift AA filter:

Almost identical, because the filter runs almost perpendicular to the slanted edge.

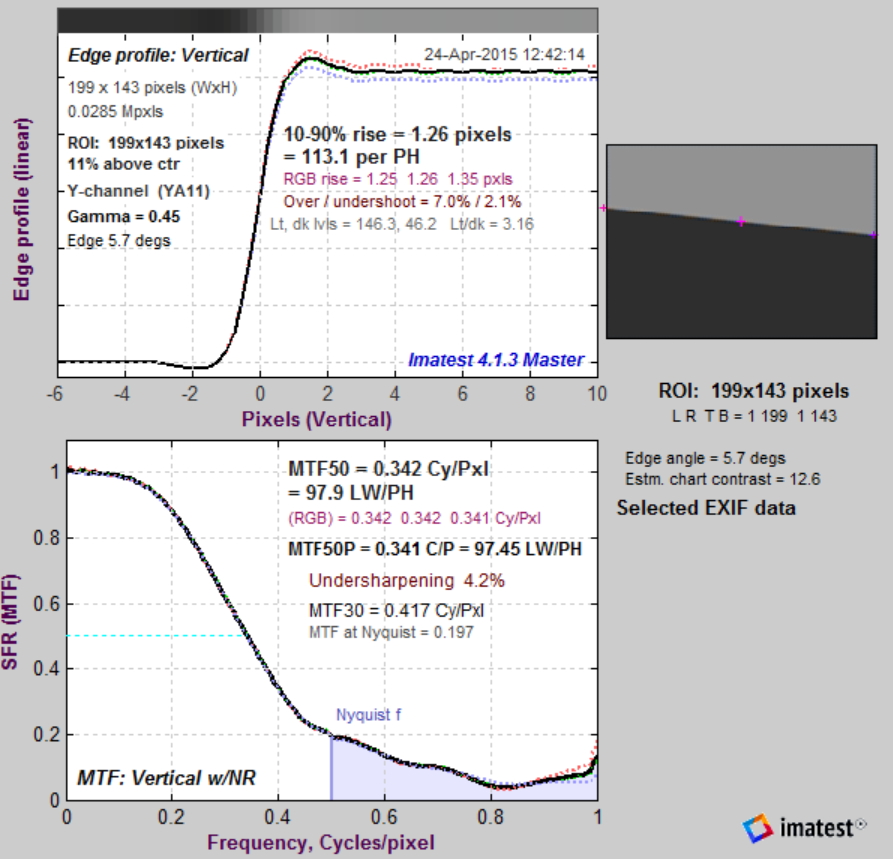

But things change if we simulate a horizontal 2-way phase-shift AA filter:

Now the lens is resolving so much detail that there is significant aliasing. Not the overshoot. This is not due to deconvolution filtering, since I’m not using any. It is strictly a property of the Matlab implementation of the gradient-corrected linear interpolation demosaicing algorithm that I’m using.

I set up a run of the simulator with various amounts of motion blur, modeled as linear, constant speed movement of the image across the sensor at a forty-five degree angle. By the way, these things take a long time to run:

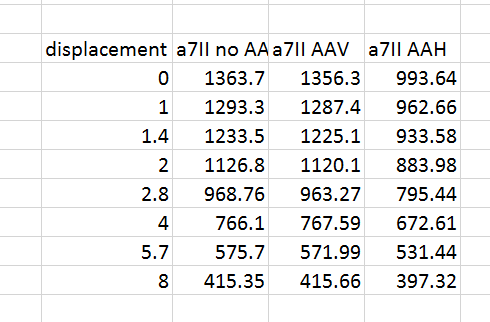

Here are the MTF50 numbers in cycles/picture height for a horizontal edge with displacements from 0 to 8 pixels at a 45 degree angle:

Here are the 1:1 crops from the photographic scene enlarged 300%:

It’s really hard to see the effects of one pixel of camera motion blur in the a7II.

If you compare these images to the a7R motion blur images, remember that the pixels is the a7II are 6 um apart, while the pixels in the a7R are 4.88 um apart. Therefor, one pixel blur represents more camera motion in the a7II case than for the a7R.

I presume, always a dangerous extension of too little knowledge, that the MTF reduction is also seen with the D800 v D800E, but does the “two way zeroing” of the E filter reduce MTF compared to the 810?

Apologies if you have already addressed this.

Supplemental: Is the ZM 35mm f1.4 on its way, mine just arrived and physically it looks like it means business 🙂

Hi Jim,

wrt the over/undershoot, it’d be interesting to take a look at that demosaicing algorithm under the hood to see whether it does something similar to low-level deconvolution somewhere along the process. As a reference there is no sign of over/undershoots in ESFs from dcraw AHD (lmmse) and VNG or RT Amaze:

http://www.strollswithmydog.com/raw-converter-sharpening-with-sliders-at-zero/

Jack, here’s a description of the algorithm:

http://research.microsoft.com/en-us/um/people/lhe/papers/icassp04.demosaicing.pdf

Jim

Jack, I have a Matlab implementation of AHD. Maybe I’ll give that a try. It’s not well documented…

Jack, here’s all I can find about Matlab’s implementation, — essentially nothing:

http://www.mathworks.com/help/images/ref/demosaic.html

Jim

I’m old fashioned about these things.

If I can carry the Zeiss 21mm, Otus 55mm and Apo-Sonnar 135mm then I can carry an RRS and use it most every shot. It just works.

One spends thousands on good equipment and thousands getting to a destination and then leaves IQ to ‘sloppy technique’ just doesn’t make sense to me.

It’s like storing and preserving a nice bottle of wine for 2 decades then leaving it in a hot car the day you plan to drink it.