I scratched my head about yesterday’s results, which seemed to show that the Otus 55/1.4 ZF.2 had greater resolution on the Sony alpha 7R (a7R) than on the Nikon 810. from the comments, some of you were just as confused as I. One of you nailed the problem, which was developing the images in Lightroom (Lr).

You see, Lr does some funny things without informing the user. One of them is to apply sharpening based on the camera and the lens. This fun-loving program will do that even if you turn sharpening off. When I mounted the Otus on the D810, the camera passed the lens data and the f-stop to the metadata, and Lr apparently looked at that metadata and was gentle with the sharpening. When I mounted the Otus on the a7R with an adapter, Lr had no idea what lens was on the camera, or what f-stop it was set to. So it applied some kind of generic sharpening, which, as it turns out, was pretty aggressive.

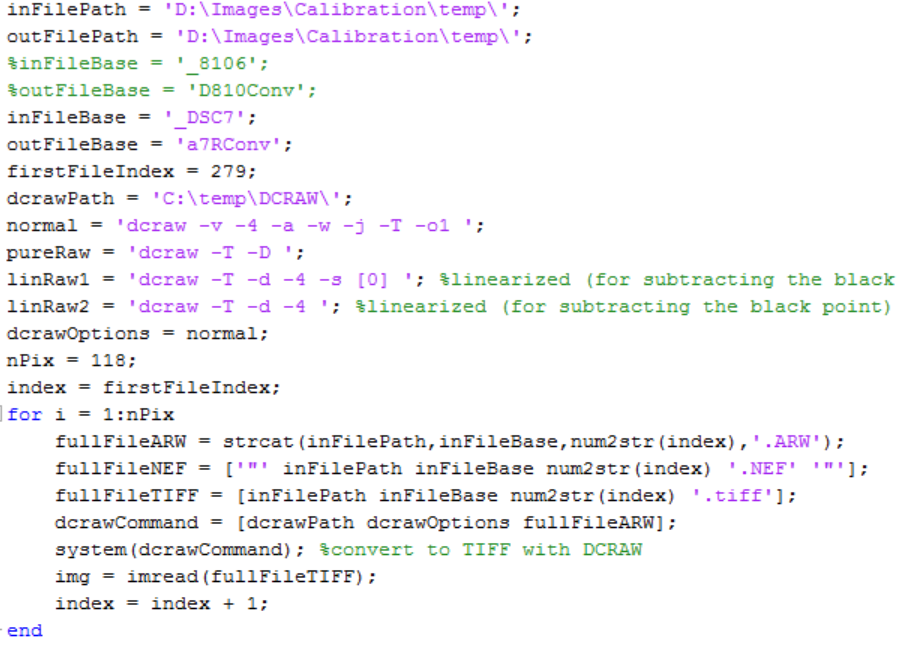

I needed to use another raw converter, one that would do as it was told. DCRAW is such a converter. It has no batch mode, and I had more than 200 images to convert, so I wrote a Matlab script to call DCRAW and tell it to use AHD for demosaicing:

I then turned Imatest loose on the TIFF files that DCRAW generated.

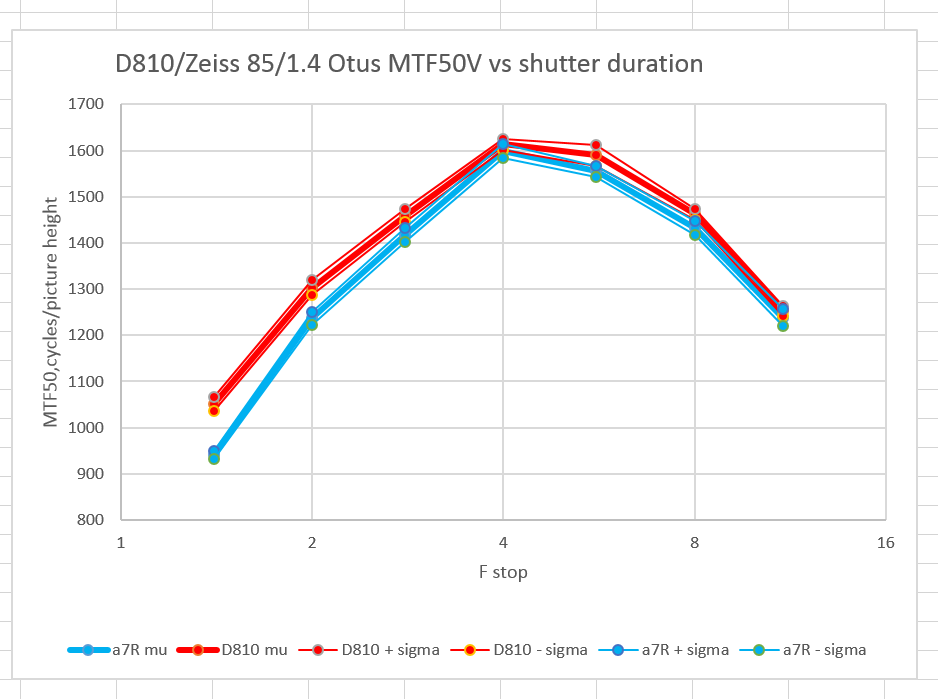

For vertical edges:

Now the two camera are essentially the same, except at f/1.4 and f/2, where the Nikon wins. Note that the absolute numbers are lower in both cases. That’s because sharpening increases the MTF50 numbers as measured by the slanted edge method.

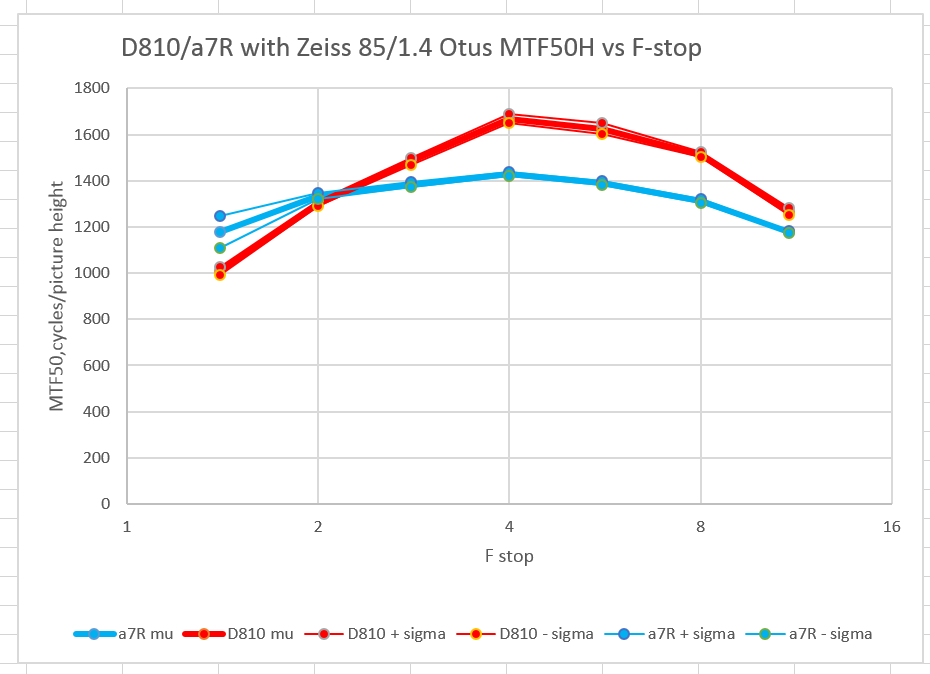

For horizontal edges:

That’s kind of weird. The Nikon falls down wide open, but comes on strong as you stop down.

I suspect that the slightly better Nikon results are due to the fact that the lens is designed for the sensor stack thickness of the Nikon cameras, and that the Sonys have a slightly different thickness. If that’s true, the effect at the corners should be greater.

Thanks for running the tests again Jim. I’m glad you got to the bottom of this! And it goes to show how tricky it can be to run apples-to-apples comparisons.

A good friend of mine pointed out (before all of this) that a line isn’t always a line. And Jim’s new results show this to be the case. Jim, is there a percentage difference for the bottom set of data at f4? Dare I say it looks close to 19% ?

Thanks Andre for guessing the right culprit.

Good show, Jim. Wouldn’t a horizontal edge be more affected (as opposed to a vertical one) by what I assume is vertical vibration induced by shutter shock?

This is why I’m annoyed by all of the “fair comparisons” between cameras using ACR.

Based on what I see in DPReview’s test scene ACR demosaicing for the Phase One IQ180 seems to use something similar to the LMMSE method in various GPL’d converters (like the GPL demosaic packs in LibRaw, and RawTherapee) which greatly reduces chroma moire (and also chroma noise as a nice side-effect), whereas most of the other cameras use a more basic demosaicing algorithm.

As a side note, there’s pretty much no reason anyone should consider demosaicing other than LMMSE or Amaze, both of which are in LibRaw and RawTherapee. I don’t know how they compare on a slanted-edge test, but LMMSE really quelches color error while Amaze gives incredible sharpness and eliminates maze-style moire.

For the IQ backs the inside knowledge of Phase in C1 processing could give them a fair advantage, they built it. I find different software prioritises different aspects of the image visible even from my lowly P20.

Is it even possible to find a level playing field for these cameras to play on?

Maybe not, Chris. And the difficulties of getting repeatable results at 36 MP with lenses like the Otus are daunting for someone like me without a dedicated testing lab.

My suggestion is to work directly off the raw files, no demosaicing at all (dcraw -D). One can then either white balance the 4 channels and use them as one full resolution grayscale image – or use a tool like MTF Mapper to get MTF slanted edges and Curves for the individual raw channels. Both methods are about equally accurate (the grayscale version is about the same as the average of the four channels) and should give readings of the linear spatial resolution information contained in just the raw data, no processing. One easy example here if camera white balance is properly set in-camera http://www.strollswithmydog.com/how-to-get-mtf-curves-for-your-camera-and-lens/